Data migration is crucial for all organizations to improve data management and achieve their business goals. If you are looking to conduct this process, you must have an efficient destination to transfer the data without any hassle. Google BigQuery is one such data warehouse that enables you to store, manage, and analyze large volumes of data.

However, when your dataset is modified at source, you need a solution that helps you update your BigQuery dataset. Webhooks are like a digital mediator that facilitates communication between different software applications. This makes it a suitable tool to act as a trigger for initiating the data migration process.

Using HTTP Webhooks, you can load data into BigQuery and conduct accurate analysis in real time. This article will illustrate two methods through which you can load data from Webhook to BigQuery seamlessly.

Introduction to Webhooks

Webhooks are an HTTP-based call-back mechanism that gets triggered when any change or update occurs at one end of the application system. It communicates this change or update to the other system. Thus, by transferring data between the source and destination system, Webhook enables synchronization and seamless sharing of information. Webhook functions on an automated push-based model without the need for manual checks. Moreover, the tool offers flexibility to developers to customize it as per their specific needs.

Prominent features of Webhooks include:

- Asynchronicity: By acting as a communicator, Webhook ensures asynchronicity between the source and the destination. This leads to real-time updates of data in both systems, which are usually web pages or web applications.

- Easy Integration: Webhook uses minimum resources and infrastructure to carry out operations. This facilitates ease of use and integration of data into different libraries.

- Error Management: Webhook has efficient error handling, which ensures quick action when any bottlenecks occur during the delivery of data. Webhook invokes a retry mechanism whenever an issue happens to re-establish the transfer of information, ensuring a consistent flow of data.

- No Constant Connection: Webhook does not require a constant connection between applications to function. This is contrary to the traditional APIs. The tool ensures efficient communication as the source application sends information only when needed.

BigQuery Overview

BigQuery is a serverless data warehouse provided by Google Cloud. It is a fully managed and cost-effective analytics tool designed to help you run complex queries on large datasets in just a few minutes. You can use SQL queries to analyze and store your data in BigQuery.

Some key features of BigQuery are:

- Serverless Framework: BigQuery works on serverless architecture, which ensures that you do not have to worry about infrastructure management. It is taken care of by Google Cloud in the backend. This helps you to focus solely on your task of analytics and decision-making.

- Integrated With Other Google Cloud Services: BigQuery integrates with other services provided by Google Cloud, such as Cloud Dataprep, Google Cloud Storage, and Dataflow. This enables you access to a varied range of services offered by Google.

- High Scalability: BigQuery provides high scalability by enabling you to analyze vast amounts of gigabyte and petabyte-scale data. It adjusts the scale based on the size of queries, thereby assisting you in dealing with large datasets.

- Multi-Cloud Tool: BigQuery is a multi-cloud tool that can be used across varied clouds for the purpose of data analysis. This makes it easier to share data between different organizational departments without the need to copy or move data.

- Price Structure: BigQuery has a pay-as-you-go model, allowing you to pay only for the services you use.

Best Ways to Load Data to BigQuery — Webhook to BigQuery Integration

You can load data from Webhook to BigQuery by using one of the two methods below:

Method 1: Using Estuary Flow to Load Data From Webhook to BigQuery

Method 2: Using the Manual Method to Load Data From Webhook to BigQuery

Method 1: Using Estuary Flow to Load Data From Webhook to BigQuery

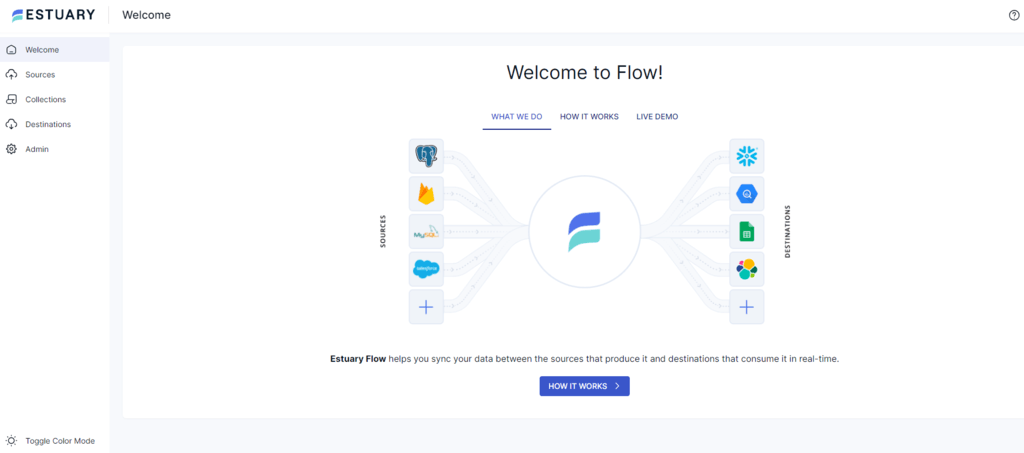

Powerful data integration platforms like Estuary Flow are designed to simplify the often complex process of moving data between different sources and destinations. With Flow, users benefit from a user-friendly interface that streamlines workflows, from source to destination. Its intuitive design empowers users of all skill levels to effortlessly configure data pipelines and automate data flows.

Let’s dive in to see how it works!

Step 1: Connect Webhook as a Source

- Sign up or log in to the official website of Estuary Flow.

- Remember to check out the prerequisites for the HTTP Webhook and BigQuery connectors!

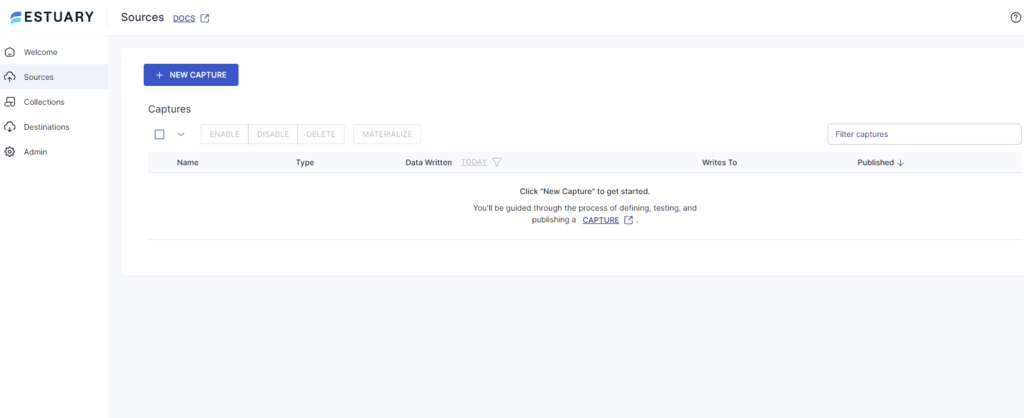

- On the main dashboard, click on the Sources tab on the left side.

- On the top left corner of the Sources page, click on the +NEW CAPTURE button.

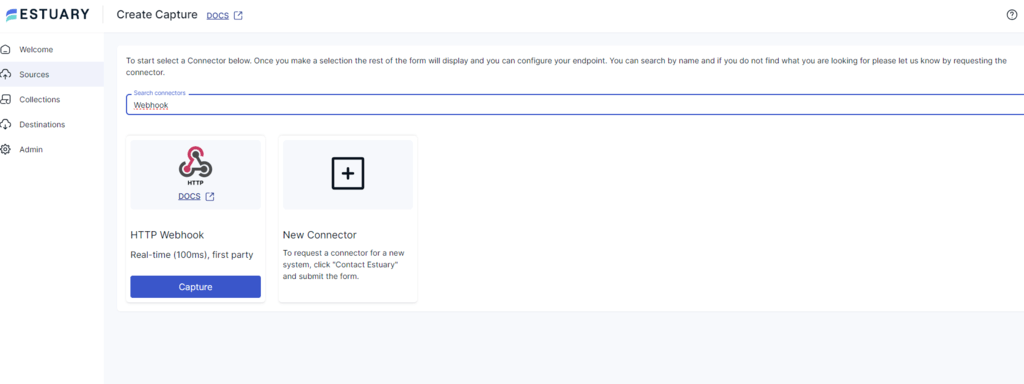

- Type Webhook in the Search Connectors tab. You will see an HTTP Webhook connector with a Capture button at the bottom. Click on it.

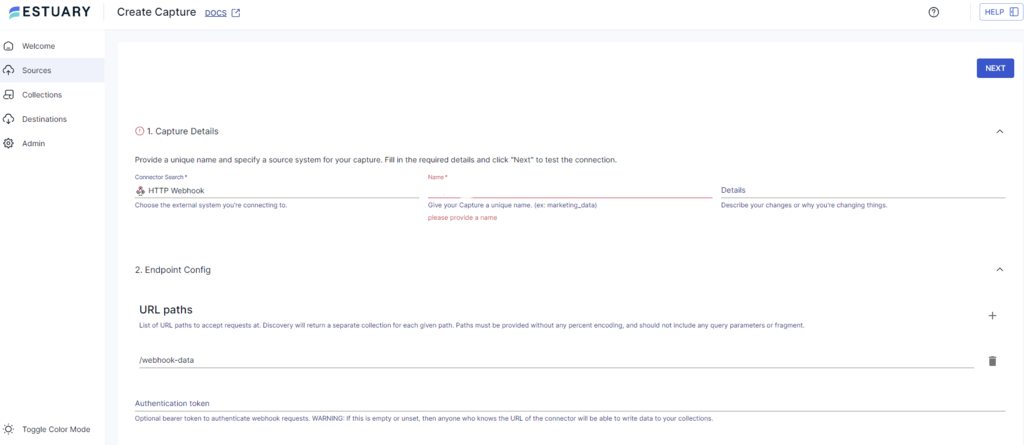

- On the Create Capture page, fill in the details for the Name, URL paths, and Authentication token fields. Click on Next. Then, click on Save and Publish.

Step 2: Connect BigQuery as a Destination

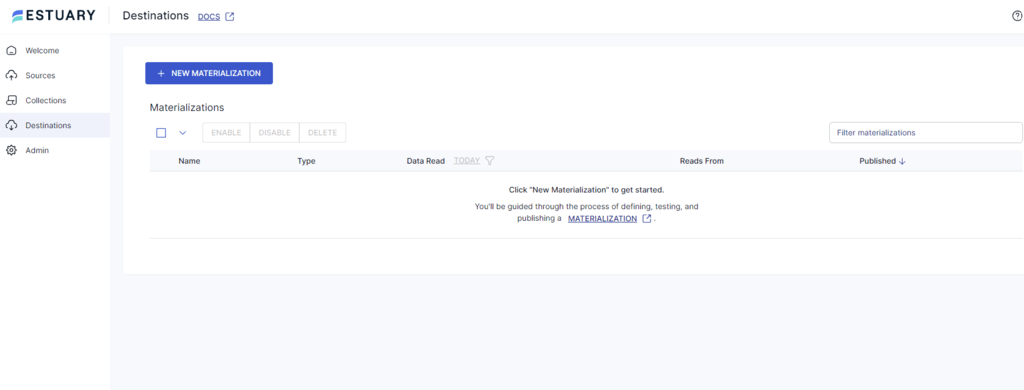

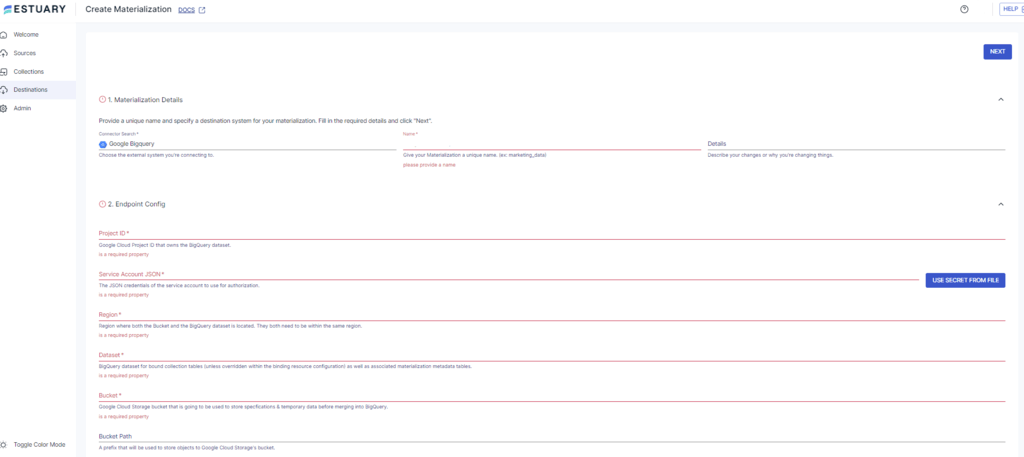

- To set up the destination, click on Destinations on the left side of the main dashboard.

- On the Destinations page, click on +NEW MATERIALIZATION.

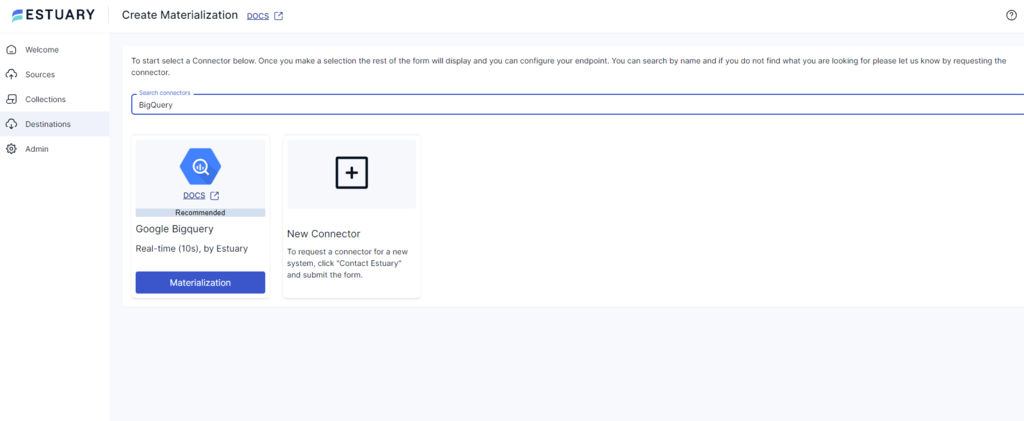

- In the Search Connectors box, type BigQuery. You will see the Google BigQuery with Materialization button below it. Click on it.

- On the Create Materialization page, enter the details such as Name, Project ID, Service Account JSON, Region, etc. Click on Next.

Thus, you can see that Estuary Flow makes it extremely simple to establish a data pipeline to load data into BigQuery using Webhooks. Let’s look at some advantages of using Estuary Flow.

Advantages of Using Estuary Flow

- Simplification of ETL process: Estuary Flow has simplified the ETL process by offering a vast range of connectors for data sources and destinations.

- Real-Time Data Streaming: With the platform, you can obtain access to the latest information through continuous data movement. Estuary Flow saves time as you do not need to watch out for the batch processing cycles.

- Cost reduction: Estuary Flow helps reduce business costs through its seamless and hassle-free ETL and data integration services. You do not have to hire technical experts who spend hours manually carrying out the data integration process through custom code.

- Real-time monitoring: Estuary Flow allows you to monitor and analyze data in real-time with up-to-the-minute access to it. This helps extract valuable insights and make better decisions by quickly observing any changes in certain parameters.

Have questions or need assistance? Join our Slack community or contact us today to get real-time support and guidance on integrating Webhooks with BigQuery using Estuary Flow!

Method 2: Load Data from Webhook to BigQuery Using Custom Code

In this method, you will learn how to load data into BigQuery using Webhook with the help of custom code. The steps to achieve this integration are as follows:

Step 1: Install the Libraries Required

You can use Python and Flask for the Webhook and Google Cloud Client Library for Python to interact with BigQuery. Begin the process by installing the necessary libraries first.

plaintextpip install Flask-google-cloud-bigqueryStep 2: Import Libraries and Setup Flask App

The Flask web app serves as an HTTP server that intercepts incoming Webhook requests. Create and name your Webhook file and provide your Google Cloud credentials. Then, you can set your BigQuery project and dataset information.

plaintextfrom flask import Flask, request, jsonify

from google.cloud import bigquery

app = Flask(___name__)

# Replace with your BigQuery project, dataset, and table details

project_id = "your-project-id"

dataset_id = "your-dataset-id"

table_id = "your-table-id"

client = bigquery.Client(project-project_id)

table_ref= client.dataset (dataset_id).table(table_id)

table = client.get_table(table_ref)Step 3: Create BigQuery Handler and Webhook Endpoint

Create a table in BigQuery, and define the Webhook endpoint that intercepts the incoming JSON data that needs to be inserted into BigQuery.

plaintext@app.route('/webhook-endpoint', methods=['POST'])

def webhook_handler():

try:

# Assuming the incoming data is in JSON format

data = request.json

# Insert the data into BigQuery

errors = client.insert_rows_json(table, [data])

if not errors:

return "Data loaded successfully into BigQuery.", 200

else:

return f"Error: {errors}", 500Replace your_project_id, your_dataset_id, your_user_id, etc, with your BigQuery project, dataset, and user id details.

Step 4: Run Flask Application

Now, you must run the flask application. Adjust the port and deployment settings if needed.

pythonpython your_flask _app.pyAfter running the Flask application, it will start a server on port 5000 by default. The Flask application can now be exposed to the internet using server links like Ngrok:

plaintextngrok http 5000You can now replace your_flask.py with your Python script name. The Ngrok service will provide a URL. Update the Webhook configuration with this URL for testing.

Through this manual method, you can migrate your data to BigQuery using Webhooks. However, it requires you to have a sound knowledge of programming languages.

Limitations of Using the Manual Method

Let us look at some of the limitations of the manual method:

- Scalability Issues: Dealing with large datasets becomes difficult when you are using custom code. You may not be able to simultaneously scale operations and integrate large datasets as needed, causing breakdowns in the process.

- Error Handling: While using custom code, debugging an error can also be time-consuming, which may hold up the troubleshooting process. This eventually leads to delays in critical decision-making processes.

- Dependency on developers: Using the manual method to load data requires you to be highly dependent on software developers and technical experts. Without skilled developers, it becomes difficult to manage and optimize codes for data integration, especially for large datasets.

Get Integrated

To wrap up, we've covered the ins and outs of integrating Webhook and BigQuery, highlighting two handy methods for loading data into BigQuery via Webhook. We also covered the manual method and the advantages of using no-code SaaS tools like Estuary Flow. Both methods work well to migrate your data, but it’s important to consider the time-consuming limitations associated with the manual method.

Flow’s user-friendly interface, various in-built connectors, and streaming data capabilities make it a reliable and cost-effective tool to build robust data pipelines. What’s more, it only takes a few minutes to set up.

Empower your data integration journey with Estuary Flow. Sign up now to connect any two supported platforms in just a few clicks!

FAQs

When should you use Webhooks?

Webhooks are best suited for scenarios requiring real-time communication or automatic triggering of actions in response to events in another system. They are commonly used for sending instant notifications, synchronizing data between applications, or automating workflows based on specific events.

Is BigQuery similar to AWS?

BigQuery is similar to Amazon Redshift, but not the entire AWS ecosystem. Redshift is a cloud-based data warehousing service that allows you to use SQL queries to analyze large datasets, similar to Google BigQuery.

Is BigQuery free to use?

BigQuery offers a free sandbox environment that allows you to explore and use BigQuery’s capabilities without providing credit card information.

Author

Popular Articles