Welcome to the comprehensive guide to Postgres Change Data Capture (CDC)! Whether you're a business leader, data engineer, or IT professional, this guide will provide you with the knowledge you need to make informed decisions about implementing CDC for your PostgreSQL databases.

This resource is designed to support a wide range of data-focused and data-adjacent professionals who meet two simple requirements:

- You’re part of an organization that uses the relational database PostgreSQL, or intends to migrate to it.

- You’re aware of the industry buzz around change data capture (CDC) and want to learn more.

PostgreSQL Change data capture (CDC): Who Should Read This Guide?

Business leaders: you need a no-nonsense foundation to make decisions. We cover the essentials of Postgres and CDC, plus the pros and cons of different approaches.

Data engineers and IT professionals: you intimately know the systems you work with, but figuring out the best changes to make can be time-consuming. Here you’ll find a succinct intro to a range of Postgres CDC methodologies.

Implementing a new data architecture or strategy requires understanding and buy-in from a variety of teams that may not work together day-to-day. Having a common starting point can make the collaborative process easier. That’s what this guide is for.

Why Choose PostgreSQL for Change Data Capture (CDC)?

PostgreSQL, also known as Postgres, is a popular open-source object-relational database. It’s completely free, but it’s also an extremely stable enterprise-class system backed by over 25 years of open-source development.

Other popular relational databases include Oracle, MySQL, SQLServer, and DB2. So why use Postgres specifically? Here are a few reasons.

- As mentioned above, it’s free — no matter what you choose to do with it.

- Postgres is known for being extensible and adaptable. It’s open-source, allowing developers to easily integrate it into new projects. It also uses standard SQL, which means it’s less likely to have conflicts with other systems. Plus, it supports user-defined data types.

- It’s highly fault-tolerant, secure, and ACID compliant, meaning transactions are guaranteed to be valid in the face of errors and downtime.

- It goes beyond standard SQL capabilities. Queries in both SQL and JSON are supported, as well as a wide variety of data types, even within the same table.

- Postgres is well-suited for CDC workflows.

One of Postgres’s most popular implementations is as the primary transactional database for an enterprise. That is, it acts as the quick, robust system of record that powers products and applications, and tracks user information.

For example, consider an online store. A PostgreSQL database would be used to store customer data, track inventory, and process sales. It’s well-suited to these workflows because it’s a row-based relational database.

Though Postgres excels at certain jobs, it’s highly unlikely for it to stand alone. Relational databases like Postgres are just one component of a modern data stack. They’re simply not optimal for all workflows.

The most obvious example of this is analytics.

Let’s return to our online store example. To succeed in e-commerce, you need data analysis to evaluate performance, market correctly, plan inventory, and more. While Postgres would handle the moment-to-moment operations, it’s poorly suited for these analytical workflows. That’s where a data warehouse comes in. Data warehouses are a complimentary type of data storage optimized for analytics, but poorly suited for transactional workflows.

To learn more about different types of data storage, see this post.

What is Postgres CDC and Its Implementation Benefits?

Postgres Change Data Capture (CDC) refers to the process of capturing and recording changes made in a PostgreSQL database in real-time or in batches, allowing for seamless data synchronization across systems.

In most data architectures, your Postgres database will be your most up-to-date data storage system. But you’ll almost certainly have other storage systems, like data warehouses, which in turn power analytical applications.

This presents a classic data integration problem. You need to get the latest data from Postgres to all your other systems in a way that’s:

- Timely, in a world that increasingly demands up-to-the-minute information

- Reliable and able to withstand errors and downtime

- Minimally harmful to performance, even as your data grows to terabyte scale

When implemented correctly, change data capture can meet all these requirements.

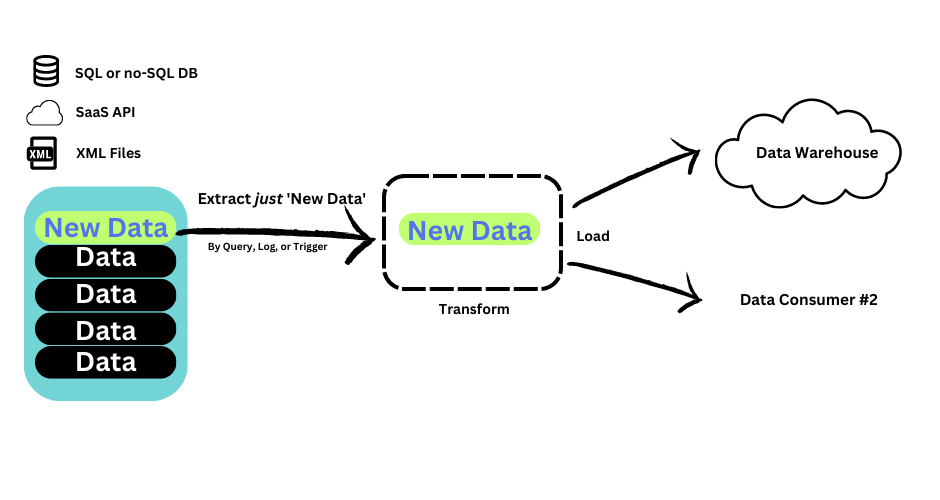

Change data capture is the process of recognizing a change in a source data system so that a downstream system can act on that change, usually by updating a target system to reflect new information.

Postgres is one of the most commonly requested source databases for CDC. Fortunately, it’s also well suited to it.

- For database administrators, simpler CDC methods are easy to set up using SQL alone.

- Advanced, real-time CDC methods are also attainable thanks to Postgres’ write-ahead log (WAL).

In the next section, we’ll cover these methods in more depth.

What Are the Different PostgreSQL Change Data Capture (CDC) Methods?

Most of the current change data capture buzz is around log-based, real-time CDC. In this type of CDC setup, data changes from Postgres are reflected in the targets within seconds or milliseconds. Real-time CDC is event-driven, scales better, and has been challenging — at least historically.

However, CDC has no temporal requirement by definition. This means that CDC methods can be batch or real-time.

Compared to real-time CDC, batch methods introduce latency and can have a negative performance impact on the database. However, they are easier to implement and may be sufficient for your needs.

In the following sections, we’ll describe three approaches that fall into both categories:

- CDC using queries (batch)

- CDC using triggers (batch)

- CDC using logs (real-time)

Each method for implementing Postgres CDC has its own advantages and trade-offs. Below is a comparison table to help you understand the key differences:

| Method | Latency | Performance Impact | Ease of Setup |

|---|---|---|---|

| CDC using Queries (Batch) | High | Low | Easy |

| CDC using Triggers (Batch) | Medium | Medium | Moderate |

| CDC using Write-Ahead Log (WAL) (Real-time) | Low | Low | Complex |

Queries for Postgres Change Data Capture

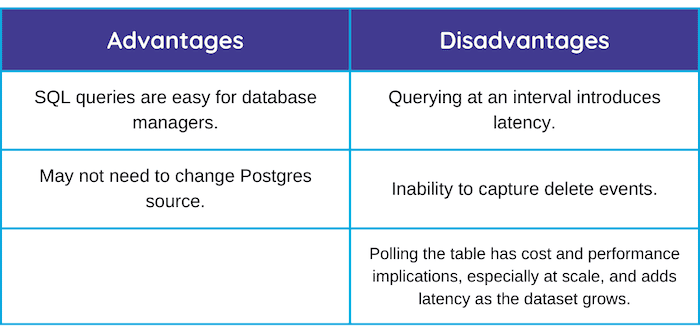

SQL queries are a simple method for batch CDC in Postgres that can be set up using your familiar Postgres client: either a GUI like pgAdmin, or the psql CLI.

For this to be possible, the source table’s schema needs to have a column with a timestamp or version number indicating when it was last updated. If it doesn’t, you’re better off using the trigger method described below.

Your SQL query will look something like:

plaintextSELECT * FROM my_table WHERE time_updated > time_last_query’;Because the query is run against existing records, this method can’t detect DELETEs.

Postgres doesn’t have a built-in task schedule, so you’ll have to use an external cron tool or other scheduler utility to run the query.

At this point, these selected changes need to be written somewhere, and ultimately make it to the target system, which presents another challenge. You’ll need to create a bespoke ETL pipeline or set up an event-based framework like Kafka Connect.

Triggers for Postgres Change Data Capture

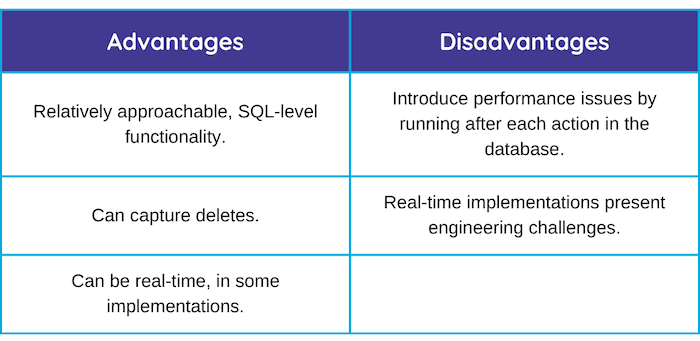

Postgres allows you to create triggers: functions that listen for insert, update, and delete operations. The trigger function can then write those new changes to a second table, which acts as a changelog.

The Postgres community has a generic trigger function that does exactly this. Its documentation can be found here.

To run this function, you’d use the query:

plaintextSELECT audit.audit_table('target_table_name');… where target_table_name is the table from which you want to capture changes.

At this point, you have a log of the changes, but it’s still inside Postgres. Once again, you have to move them to the destination system.

From there, you’ll face the same challenge as when using queries: either building or purchasing a pipeline solution to write the changes to the target system.

Triggers also have a significant performance impact on the database. A good practice is to run the trigger against a replica of the main table, which adds another layer of complexity.

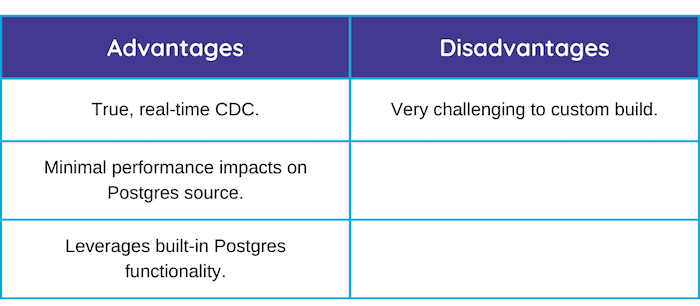

Real-time Postgres CDC Using the Write-Ahead Log (WAL)

Both queries and triggers are methods of tracking change events in Postgres that introduce latency and can bog down performance.

But there’s another way. You can capture changes in real-time by taking advantage of Postgres’ built-in features.

The write-ahead log (WAL) makes note of all changes that have occurred in the database. It’s a transaction log designed mostly for recovery purposes and as insurance for data integrity. Its characteristics make it ideal for event-based CDC — what many have come to think of as true CDC.

The WAL is an authoritative source of the data state. You can work with the settings and features that surround it to power real-time CDC.

(Hint: Refer to out the terminology section below for full definitions of Postgres terms used here.)

Steps for Setting Up Logical Replication for Postgres CDC

Step 1: Set 'wal_level' to Logical

- Ensure the WAL level is set to logical to capture the necessary detail for logical replication.

plaintextALTER SYSTEM SET wal_level = logical;

- Explanation: This command adjusts the WAL level in PostgreSQL to ensure the database captures the logical changes required for replication.

Step 2: Ensure the Database User Has Replication and Read Privileges

plaintextALTER ROLE your_user_name WITH REPLICATION;

GRANT pg_read_all_data TO your_user_name; -- Useful for Postgres 14+

- Explanation: The first command grants the necessary replication privileges to the user. The second command grants read access to all tables, which is important for logical replication, especially in PostgreSQL 14 and later.

Step 3: Ensure the Source Tables Have a Primary Key or Set REPLICA IDENTITY FULL

All tables involved in logical replication should ideally have a primary key. If a table lacks a primary key, you can set REPLICA IDENTITY to FULL, but be aware of the performance implications.

plaintextALTER TABLE your_table_name REPLICA IDENTITY FULL;

Step 4: Create a Publication for the Source Tables

plaintextCREATE PUBLICATION my_publication FOR TABLE my_table;

- Explanation: This creates a publication, which is a set of changes from the source tables that you want to replicate.

Step 5: Perform Logical Decoding

Logical decoding converts the WAL into a format that can be consumed by external systems, such as JSON, SQL, or Protobuf. For this, you might need to use or install a plugin like wal2json or pgoutput (which is built into Postgres 10+).

Example to load the wal2json plugin:

plaintextLOAD 'wal2json';

- Explanation: Plugins like wal2json or pgoutput help in decoding the WAL into a format that can be easily consumed by downstream systems.

Step 6: Set Up a Messaging Queue or Event Bus

Once logical decoding is in place, you can set up a messaging queue or event bus to relay the decoded event logs to the target system. This might involve setting up a Kafka topic, an event bus like RabbitMQ, or a custom implementation.

Example setup with Kafka might look like:

plaintextkafka-console-producer.sh --broker-list localhost:9092 --topic my_topic

- Explanation: This step involves routing the CDC data from the PostgreSQL source to a messaging system like Kafka, where it can be consumed by other services or databases.

Key Features and Terms for Postgres Change Data Capture

Real-time Postgres CDC requires you to leverage some of Postgres’s defining features and components. Here’s a quick reference:

Write-ahead log (WAL):

The write-ahead log, or WAL, is a transaction log used in Postgres to efficiently protect against failure and provide a backup when failure does occur.

There is a gap of time between when the database agrees to complete a transaction, and when the change is written to disk. To fill in that gap, all intended changes are written to the WAL immediately. If the database were to crash, it could use the WAL to pick up where it left off. The WAL is what makes Postgres ACID compliant.

The WAL also makes Postgres more efficient by eliminating the need to write to disk for each transaction.

The WAL is always present, but you have some control over it in your database. For example, the wal_level parameter controls the level of detail that this written there. When wal_level is set to logical, you can use it for logical decoding.

Logical decoding:

Logical decoding is the process of streaming database changes to external consumers in a format that other applications can use without having to be aware of the database state. They are reflected in basic SQL terms: as INSERTs, UPDATEs, and DELETEs.

This is done by reading the WAL.

Logical replication:

Logical replication is a Postgres feature built on logical decoding. Principally, its function is to allow replication across Postgres versions. In practical terms, it powers the flexible streaming output that CDC requires.

You can take advantage of logical replication with a custom connector, or a plugin such as wal2json, which translates changes from the WAL into JSON. You can also decode the output of Postgres’ default plugin, pgoutput.

Replication slot:

Each individual stream of changes created through logical replication is called a replication slot.

Replica identity:

This is a setting applied to tables that determines how much detail is written to the WAL for UPDATEs and DELETEs. Some CDC approaches recommend setting replica identity to FULL to ensure all necessary information is present in the WAL, but a safer approach is to ensure that all tables have a primary key.

Publication:

A publication represents the change set generated from one or more tables. It’s required for logical replication. It’s created with the create_publication command. In most cases, you’ll create one publication for all tables.

Why Consider Managed CDC Solutions?

Most of the real work happens at step six: these systems are notoriously hard to set up. Capturing the decoded change events into a Kafka topic, for example, is not a simple plug-and-play solution.

Fortunately, platforms and services are becoming available to make this method more approachable. These are more user-friendly systems built atop complex, unopinionated event buses.

The open-source platform Debezium paved the way to making log-based CDC approachable. Debezium uses Apache Kafka as an event bus, and offers out-of-the-box connectors to popular sources, including Postgres.

Many other Postgres CDC services are becoming available that make the user experience even simpler. Some leverage Debezium as their open-source foundation; others use a different architecture.

When you’re evaluating managed CDC services and open-source platforms, consider your individual needs, including:

- How much data engineering work you can take on.

- The size and shape of your source data.

- The type of destination system you need to move the data to.

Let’s take a closer look at one managed CDC service: Estuary Flow.

Estuary Flow for Real-Time PostgreSQL Change Data Capture

Estuary’s DataOps platform, Flow, includes a connector for Postgres CDC.

So, what does that exactly mean?

Flow is a centralized platform for building real-time data pipelines that connect a variety of source and destination data systems using open-source connectors. But unlike a typical ETL platform, Flow has an event-driven runtime, allowing true, real-time CDC.

When you use Flow’s Postgres source connector, Flow acts as a log-driven, real-time CDC pipeline.

After running some basic configuration on your database, as per the steps above, the Postgres connector essentially performs steps 5 and 6. It captures change events from the WAL and writes them to a Flow data collection. Cloud-storage-backed collection data is streamed through the Flow runtime, which is built on Gazette, an open-source streaming broker that was also built by Estuary’s engineering team.

Your data collections captured from Postgres are automatically backed by a data lake in cloud storage. From there, they can be streamed to one or more target systems using any available materialization — or destination — connector. This occurs with millisecond latency.

Key Features of Estuary Flow for Postgres CDC:

- High degree of customization using the intuitive web app or the CLI. Add additional data sources or multiple destinations to your CDC pipeline, and combine or separate streams of data without ever having to directly engineer the streaming broker.

- Ability to add transformations without an additional tool: aggregations, joins, and stateful stream transformations are all possible.

- Minimize performance impact of historical backfills. Backfilling data from Postgres tables is important for data integrity, even in event-driven CDC, but re-reading large tables can cause performance impacts or even errors like data duplication. Flow avoids having to re-read the whole Postgres table by writing a checkpoint of where it left off in that source table.

Looking for something else? Check out our CDC Guides for other systems:

Conclusion

PostgreSQL Change Data Capture (CDC) is a powerful method to ensure that your data stays synchronized across multiple systems in real-time, enhancing the reliability and efficiency of your data architecture. Whether you opt for query-based, trigger-based, or log-based methods, each has its unique benefits and trade-offs that can be tailored to your specific use case.

As businesses continue to demand real-time insights and seamless data integration, mastering CDC in PostgreSQL becomes increasingly crucial. By choosing the right CDC method, you not only improve data consistency but also position your organization to better handle the challenges of a dynamic data environment.

Ready to Implement PostgreSQL CDC? Explore Estuary Flow’s real-time, scalable CDC solution to effortlessly capture and stream changes from PostgreSQL to any destination system. Sign up today for a free trial and take the first step towards revolutionizing your data pipelines.

Additional Resources

Estuary resources:

- Quick Postgres CDC demo in Flow

- Blog series on CDC

- Flow documentation

- Flow and the Postgres CDC connector code on GitHub

- Get started with Flow today

Other resources:

- PostgreSQL documentation for topics mentioned: triggers, WAL, logical decoding.

- Custom Postgres CDC use-cases: Devoted Health, Netflix

Author

Popular Articles