Streaming data integration is a crucial process if you want to base your decisions on data coming from multiple sources in real time. It unifies the data from all sources into a single stream which makes it easier for you to understand your company’s performance, predict outcomes, and make better decisions.

From powering real-time reporting tools and updating customer-facing apps to supplying up-to-date data for machine learning algorithms, Real-time data integration has diverse uses for different industries. But it’s not always easy to implement. It can be challenging to choose a flexible and scalable integration approach or handle complex data types and formats.

This guide will help you understand streaming data integration and how you can use it. We’ll talk about how it compares to data ingestion and help you figure out when to use each one. We’ll also guide you on how you can set up your own data integration pipeline and explore ready-made solutions available.

By the time you are done reading this 15-minute guide, you will have a clear roadmap for integrating streaming data into your business.

Understanding Data Integration For Driving Business Transformation

Data integration is the process of bringing together data from different sources into one place. It requires a flexible architecture – one that can adapt to various data sources, formats, and sizes. But it generally involves 3 main steps:

- Collection: This is the first step where data is gathered from various sources. These sources can be databases, log files, IoT devices, or other real-time data streams.

- Transformation: Once the data is collected, it needs to be transformed into a format that can be easily understood and used. This involves changing the data structure, cleaning up the data, or converting it into a standard format.

- Loading: The final step is to load the transformed data into a central system. This system is often a database or a data warehouse where the data can be accessed and analyzed.

Why is Data Integration Important?

Here are some key reasons:

- Efficiency: Data integration can save time and resources. It automates the process of collecting and transforming data and saves valuable time and resources.

- Real-time Insights: With streaming data integration, you can get insights in real time. This means you can react to changes quickly and make decisions based on the most current data.

- Complete View: By bringing together data from multiple sources, you get a more comprehensive understanding of your business.

- Unified View of Data: With integrated data, you can see patterns, trends, and insights that you might miss if the data were scattered across different systems. This helps in making better decisions.

- Scalability: Many data integration systems are designed to handle large amounts of data. As your business grows and your data volume increases, a good data integration system will scale with you.

Batch Integration vs. Real-Time Integration

Data integration can occur in 2 ways:

- Batch integration in which data is extracted, cleaned, transformed, and loaded in large batches.

- Real-time integration in which the data integration processes are applied to time-sensitive data in real time.

Comparing Data Integration & Data Ingestion: Unraveling The Differences

Data Integration vs. Data Ingestion: Key Differences

Data integration and data ingestion are both involved in transferring data, yet they’re not quite the same. To grasp these differences, we first need to understand what data ingestion is.

What is Data Ingestion?

Data ingestion is the process of collecting raw data from various sources and moving it into centralized locations such as databases, data warehouses, or data lakes. Note that data ingestion does not involve any modifications or transformations to the data. It simply moves the data from point A to point B.

Data ingestion can occur in 3 ways:

- Batch data ingestion: In this, data is collected and transferred periodically in large batches.

- Streaming data ingestion: This is the real-time collection and transfer of data and is perfect for time-sensitive data.

- Hybrid data ingestion: This is a mix of batch and streaming methods, accommodating both kinds of data ingestion.

4 Key Differences Between Data Integration & Data Ingestion

Now let’s see how data integration and data ingestion differ from each other.

- Complexity: Data ingestion pipelines aren’t as complex as data integration pipelines, which involve additional steps like data cleaning, managing metadata, and governance.

- Data Quality: Data ingestion does not automatically ensure data quality. On the other hand, data integration enhances data quality through transformations like merging and filtering.

- Data Transformation: Data ingestion involves moving raw data without any transformations. In contrast, data integration involves transforming the data into a consistent format.

- Expertise Required: Data ingestion is a simpler process and doesn’t require extensive domain knowledge or experience. However, data integration demands experienced data engineers who can script the extraction and transformation of data from various sources.

Learn more: Data Ingestion vs Data Integration

Choosing the Right Approach for Your Needs

The choice between data ingestion and data integration depends on your specific needs. Consider the following before making a decision:

- Use data integration when you need to ensure data quality and want your data in an analysis-ready form. It’s ideal when you need to merge, filter, or transform your data before loading it into the central system.

- Use data ingestion when you need to quickly move large volumes of raw data from various sources to a central repository. It’s ideal when you don’t require any transformations or modifications to the data.

Streaming Data Integration: Step-By-Step Guide

The process of setting up robust, efficient, and reliable streaming data pipelines can be a challenging task. But to help you with it, we will walk you through the steps of 2 different approaches for creating a streaming data integration pipeline, including pre-built DataOps solutions like Estuary Flow and building the data integration pipeline from scratch.

The Easy Way: Using Estuary Flow (with Tutorial)

Let’s look at the easy way first. This is where you use Estuary Flow’s pre-built solution for data integration. It is designed to make data integration much simpler and takes care of the hard parts for you so you can focus on getting the best out of your data.

Estuary Flow is a real-time Extract, Transform, Load (ETL) tool that can scale to match your growing data demands. Here are the concrete features it offers:

- Built-In Testing: Estuary Flow comes with built-in schema validation and unit testing features that ensure your data is as accurate as possible.

- Scalability: Regardless of your company’s size, Estuary Flow can scale to meet your needs. It can power active workloads at 7GB/s CDC from any size database.

- Reliability & Control: Estuary Flow has a proven, fault-tolerant architecture and provides built-in, customizable schema controls for safeguarding your data products.

- Data Transformation: Estuary Flow can modify and process data in real-time using streaming SQL and Javascript transformations. This makes it one of the fastest data integration platforms available.

- Lots of Connectors: Flow provides built-in database connectors, real-time SaaS integration, and support for the Airbyte protocol for batch-based connectors. It also allows you to easily add connectors through its open protocol.

- Real-Time Data Capture: Estuary Flow captures new data instantly from all kinds of data sources such as cloud storage, databases, and Software as a Service (SaaS) apps. It lets you move and transform your data quickly, without any scheduling.

Step-By-Step Guide To Data Integration Using Estuary Flow

Here’s how you can use Flow to handle real-time data integration from different sources and then send it to new data storage:

Getting Data From Different Sources

Flow can get data from different places and each of these needs its own Flow capture. To make a new capture:

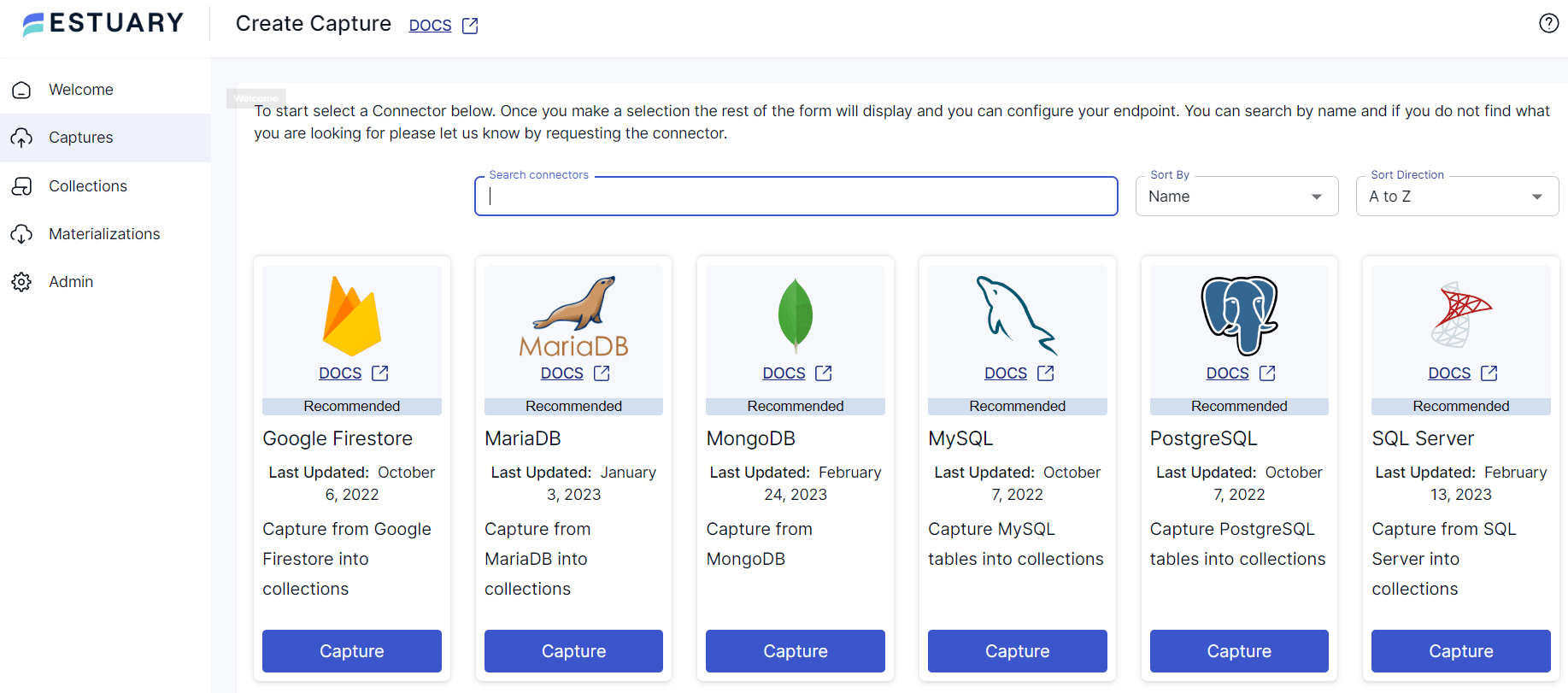

- Open the Flow web app and go to the ‘Captures’ tab. Then, click on ‘New capture’.

- From the list of connectors, pick the one for your data source.

- Fill in the details it asks for. This could be things like server details or login info, depending on where you’re getting the data from.

- Name your capture and click ‘Next’.

- In the Collection Selector, pick the collections you want to capture.

- When everything looks good, click ‘Save and publish’.

TIP: If Flow doesn’t have a connector for where you’re getting data from, you can request a new connector.

Transforming Captured Data

Flow can make changes to your data as it comes in from different places. This is done through Derivations. Here are some of the things you can do:

- Filtering: You can create a derivation that selectively filters documents from the source collections based on certain conditions. For example, you might only be interested in transactions above a certain value or events that occurred within a specific time range.

- Aggregation: You can even summarize your data. You can count things, add them up, or find the smallest or biggest values, and you can do this with all your data, or just parts of it.

- Joining: You can join multiple source collections together in a single derivation. This is similar to a SQL join and can be used to combine data that share a common key.

- Time Window Operations: Flow supports operations over time-based windows of data. For example, you can calculate a moving average or identify trends over a specified time window.

- Complex Business Logic: You can implement custom business logic in a derivation using SQL or TypeScript. This could involve computations using values from different fields or documents, conditional logic, or other complex transformations.

Here’s how to make a new derivation:

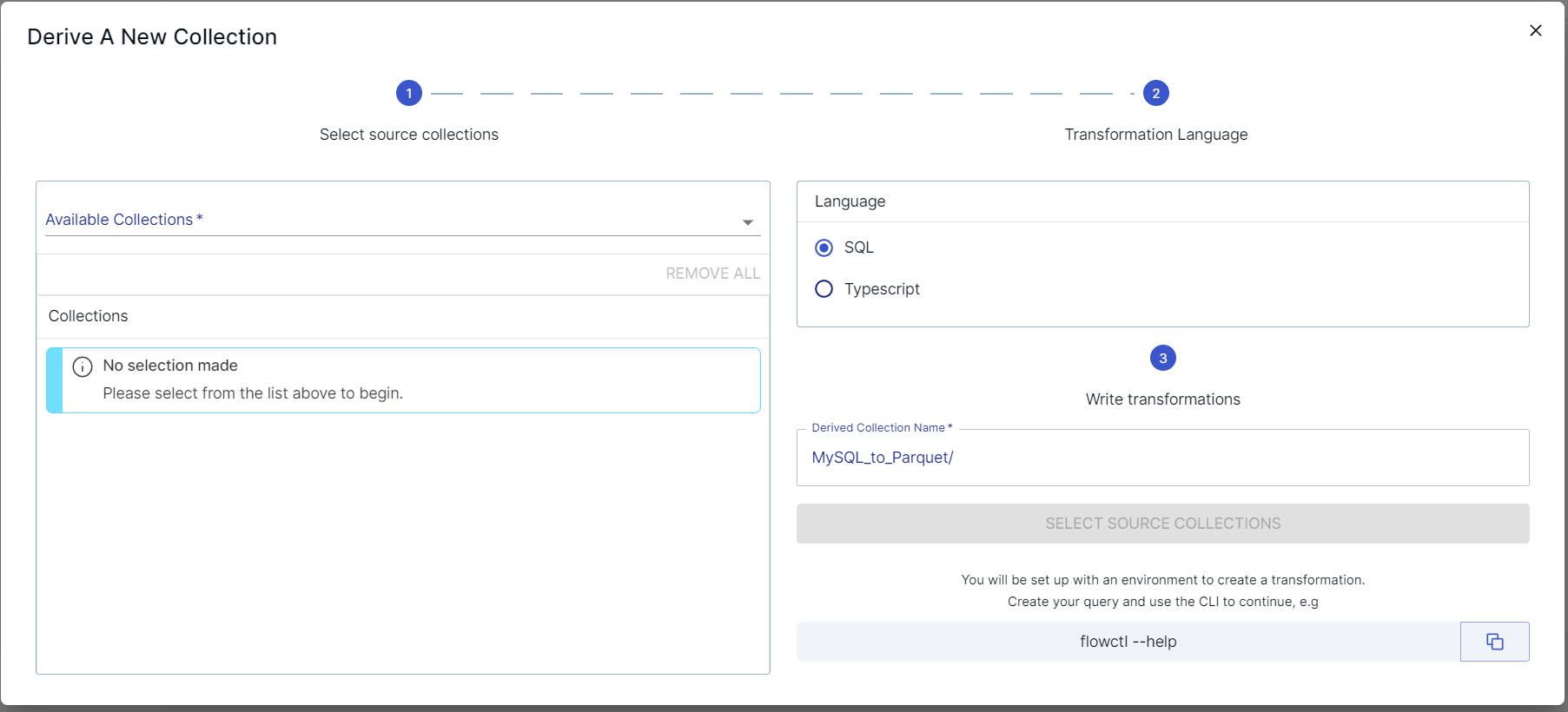

- Go to the ‘Collections’ tab and click on ‘New derived collection’.

- Choose the source collections for your transformation.

- Give a name to your derived collection.

- Write the transformation logic in SQL or TypeScript. This is the code that does the actual data transformation.

- When you’re done, click ‘Save and publish’.

Sending Data To Its Destination

Once your data is captured and transformed, it’s time to send it to its final destination. To do this:

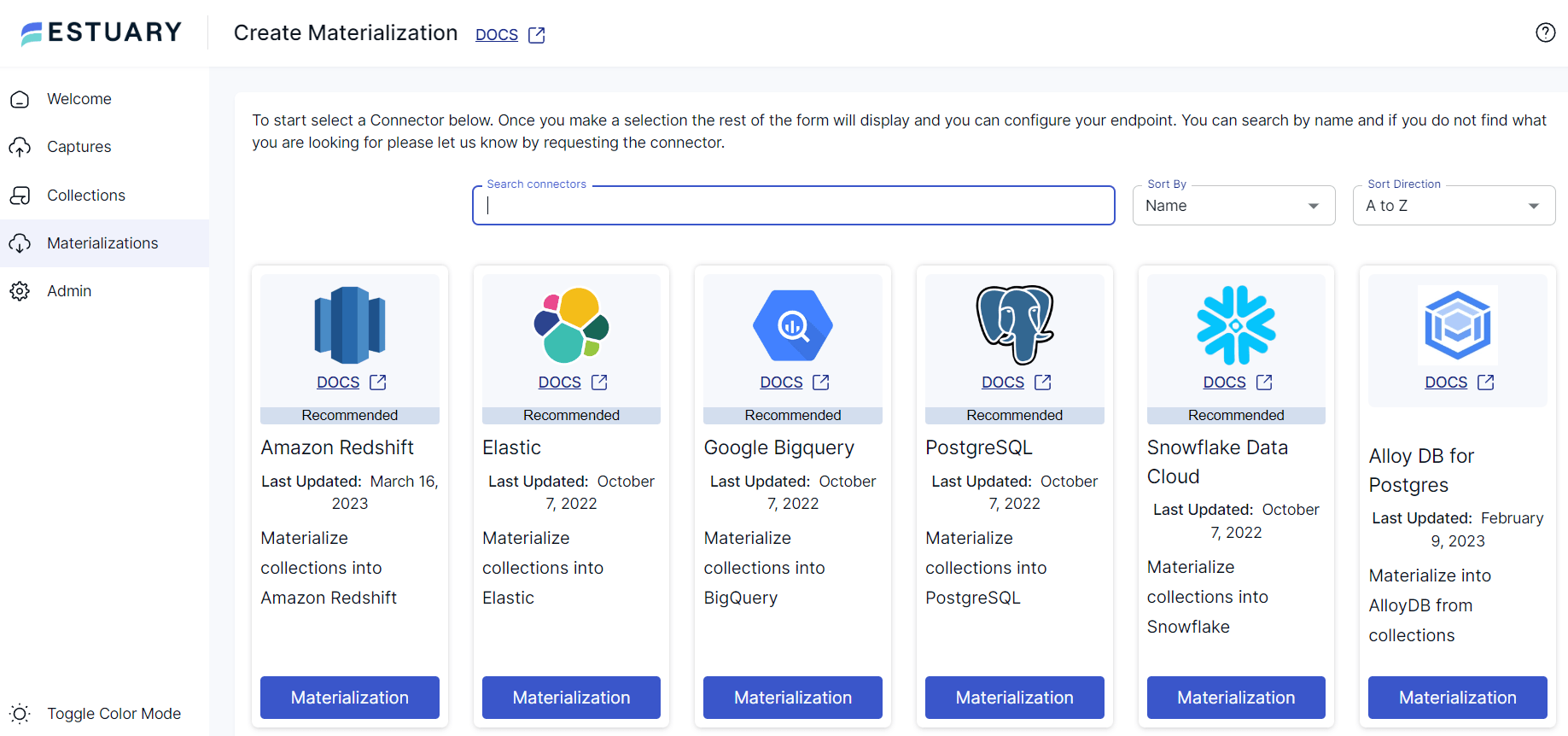

- Go to the ‘Materializations’ tab and click on ‘New materialization’.

- Pick the connector you want to use.

- Fill in the details it asks for. This will usually be things like account info and database name and location.

- Name your materialization and click ‘Next’.

- The Collection Selector will show the collections you've captured and transformed.

- When everything is set up right, click ‘Save and publish’. You’ll get a notification when everything is done.

Other Data Integration Tools to Consider

Let’s take a look at some other leading Data Integration Tools.

Striim

Striim is a dynamic data management tool specializing in real-time data movement and transformation. Here is what Striim has to offer:

- Data Pipeline Monitoring: With Striim, you can visualize your pipeline health, data latency, and table-level metrics through REST APIs.

- Analytics Acceleration: Striim ensures seamless integration with popular analytics platforms like Google BigQuery, Azure Synapse, Snowflake, and Databricks.

- Streaming SQL & Stream Processing: Built on a distributed, streaming SQL platform, Striim can run continuous queries and transformations on stream data and join it with historical caches.

- Intelligent Schema Evolution: Striim captures schema changes, letting you determine how each consumer propagates the change or provides an option to halt and alert when manual resolution is needed.

- Choice of Deployment: Striim allows for flexible deployment. You can choose Striim Cloud, a fully managed SaaS on AWS, Azure, and Google Cloud, or the Striim Platform, which is self-managed and available either on-prem or in AWS, Azure, and Google Cloud.

Talend

Talend Data Fabric is a comprehensive data management solution that thousands of organizations rely on. It enables end-to-end data handling, from integration to delivery, and connects to over 1,000 different data sources and destinations.

Here’s a simple breakdown of Talend’s key features:

- Easy to Use: Talend’s simple drag-and-drop interface lets you quickly build and use real-time data pipelines. It’s up to 10x faster than writing code.

- Data Quality: Talend keeps your data clean by checking it as it moves through your systems. This helps you spot and fix issues early and ensures that your data is always ready to use.

- Works with Big Data: Talend can manage large data sets and integrate well with data analytics platforms like Spark. It’s also compatible with leading cloud service providers, data warehouses, and analytics platforms.

- Flexible and Secure: Talend can be deployed on-prem, in the cloud, in a multi-cloud setting, or a hybrid-cloud setup. It allows you to access your data wherever it’s stored, even behind secure firewalls or in secure cloud environments.

Integrate.io

Integrate.io is a dynamic and user-friendly data integration platform built to streamline your data processes, creating efficient and reliable data flows for automated workflows. Here’s what it brings to your data management toolkit:

- Robust API: The powerful API provided by Integrate.io allows for the creation of custom data flows and integrations.

- Easy no-code UI: It provides a simple drag-and-drop builder capable of making real-time data integration solutions.

- Loads of connectors: Integrate.io has over 140 pre-built connectors to help you get data from lots of places without needing to write any code.

- Two-way data flow: It can pull data from different places and organize it for you. It can also take data from your target system and send it back to the source.

The Hard Way: Building Your Own Pipeline (with Tutorial)

Now let’s talk about doing it the hard way which is to code your own data integration solution from scratch. For this, you have to be an expert in different technologies, pay close attention to every detail, and be ready to fix any problems that come up. You’ll be in control of everything but it’s a lot of work and can get pretty complicated.

With this in mind, here are the steps you will need to follow:

Step 1: Identify Your Data Source

The first step in a data integration project is to identify your data sources. This could be databases, web APIs, legacy systems, or any other source of data. The choice of data sources will depend on the specific requirements of your project.

Step 2: Set Up API Access

If your data source is a web API, you’ll need to set up API access. This typically involves registering for an API key, which will be used to authenticate your requests to the API. Be sure to read the API’s documentation to understand how to make requests and what data you can access.

Step 3: Data Retrieval

At this stage, you’ll write a script or use a tool to fetch the data from your source. This could involve making API requests, reading from a database, or loading files from a file system.

Step 4: Data Transformation

Once you have the raw data, you’ll likely need to transform it into a structured format that’s easier to work with. This could involve parsing the data, cleaning it, and converting it into a format like JSON or CSV.

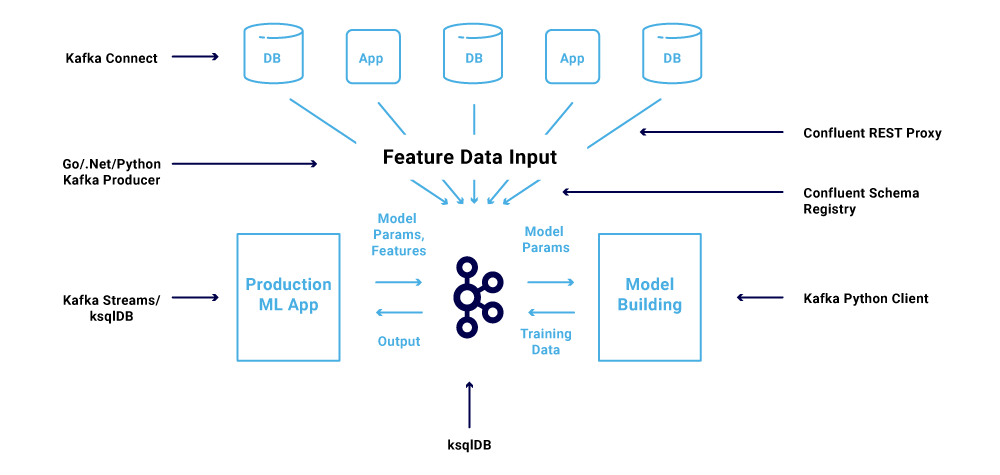

Step 5: Setup Streaming Platform

Next, you’ll need to set up a streaming platform. Apache Kafka is a popular choice for this. Kafka allows you to publish (write) and subscribe to (read) streams of records in real time. You’ll need to install Kafka and start a Kafka server.

Step 6: Create A Streaming Pipeline

Now you’re ready to create a streaming pipeline. This involves writing a producer that sends your structured data to a Kafka topic.

Step 7: Data Storage

After the data has been sent to Kafka, it’s ready to be stored. You can store the data in a database, a data warehouse, a data lake, or any other storage system. The choice of storage system will depend on how you plan to use the data.

Step 8: Integration Of Transformed Data To Storage System

Finally, you’ll need to write a consumer that reads the transformed data from Kafka and writes it to your storage system. This involves fetching the data from Kafka, possibly doing some final transformations, and then writing the data to the storage system.

Challenges and Considerations in Streaming Data Integration

- Data Quality - Ensuring the accuracy and consistency of streaming data is crucial.

- Latency - Minimizing the delay between data capture and analysis is important for real-time decision-making.

- Complexity & Expertise - Building and maintaining complex data integration pipelines can require specialized skills and expertise.

Conclusion: Take Your Data Integration to the Next Level

Streaming data integration is tricky but with our guide, it can be done easily using the right tools even if you’re starting from zero. If you’re good with code and want total control, you can make your own data pipeline. But remember, this method needs deep knowledge of data management and lots of different techs. It also takes time to troubleshoot and fine-tune your system.

If you like things more streamlined and easier to handle, ready-made tools like Estuary Flow are the best choice. Flow is easy to use and it helps you bring different kinds of data together in real time. It’s scalable if your business is growing and you want to stay quick and flexible. So sign up for Estuary Flow here and start developing amazing data integration solutions.

Author

Popular Articles