SQL Server is a popular relational database management system (RDBMS) used to store and analyze structured data. However, as data volumes increase and business requirements change, SQL Server's limitations with scaling, support for changing data models, etc., become more apparent. These constraints can hinder your business's ability to quickly analyze large amounts of data and adjust to evolving requirements.

Migrating to a NoSQL database like MongoDB offers a potential solution. A key benefit of MongoDB is its enhanced scalability, allowing it to easily analyze large datasets, allowing businesses to make informed decisions and stay competitive.

Let’s explore the different methods of migrating data from SQL Server to MongoDB after a quick look at the features and functionalities of each platform.

If you're looking for the reverse migration, check out our comprehensive guide MongoDB to SQL Server.

SQL Server – The Source

Microsoft SQL Server is a comprehensive RDBMS developed by Microsoft designed to handle a wide range of data types and applications. It can support small, single-machine environments as well as large-scale applications with many concurrent users.

Among SQL Server's notable features is its integration with the .NET framework. This allows developers to use .NET languages like C# and VB.NET to write applications that interact with SQL Server, enhancing productivity and flexibility.

Here are some of the key features of SQL Server:

- Intelligent Query Processing: SQL Server includes intelligent query processing capabilities that adapt and optimize performance based on the characteristics of the data and queries. It includes features like batch mode memory grant feedback, interleaved execution, and batch mode adaptive joins, which dynamically adjust memory allocation and execution plans. These features help enhance query efficiency and reduce resource usage.

- Polybase: Polybase is a feature in SQL Server that allows querying and combining data from various sources, including Hadoop, Azure Blob Storage, and other SQL Server instances, using standard T-SQL. This enables you to integrate and analyze data from different systems without the need for complex ETL processes.

- Temporal Tables: Temporal tables in SQL Server enable easy tracking and analysis of historical data changes. The tables automatically maintain a history of data modifications, allowing you to query and retrieve data as it existed at any point in time. This is useful for auditing, compliance, and trend analysis.

MongoDB – The Destination

MongoDB is a prominent open-source NoSQL database that offers flexibility, scalability, and high performance, making it ideal for modern applications. It is designed to store and handle vast amounts of unstructured or semi-structured data.

MongoDB employs a document-oriented model to store data in flexible, JSON-like documents called BSON (Binary JSON). This facilitates the storage of complex hierarchical structures within a single document, greatly reducing the need for joins and enabling faster data retrieval.

Here are some of the key features of MongoDB:

- Scalability: MongoDB can scale horizontally through a technique called sharding that distributes data across multiple servers, allowing the database to handle increased data volume and traffic loads seamlessly. This scalability makes MongoDB well-suited for applications that require high throughput and low latency.

- Replication: MongoDB ensures high availability through its built-in replication feature. A replica set in MongoDB consists of two or more copies of the data, allowing automatic failover and data redundancy. This ensures that in the event of a hardware failure or maintenance, the database service remains available, and data integrity is maintained.

- Indexing: MongoDB supports a wide variety of indexing options that enable fast data retrieval. These include primary and secondary indices, as well as special index types like text, geospatial, and hashed indexes. Proper indexing can considerably improve the performance of query operations by minimizing the number of documents the database scans during a query.

How to Transfer Data From SQL Server to MongoDB?

If you’re looking to load data from SQL Server to MongoDB, here are the methods you can use:

- The Automated Method: Using Estuary Flow for SQL Server to MongoDB Migration

- The Manual Approach: Migrating SQL Server to MongoDB using SSIS

The Automated Method: Using Estuary Flow for SQL Server to MongoDB Migration

Estuary Flow, a real-time Extract, Transform, Load (ETL) tool, assists in creating data pipelines that combine data from multiple sources and destinations of your choice. It is an impressive solution for migrating from SQL Server to MongoDB.

Prerequisites

Before you get started with Flow for your data pipeline creation, the following prerequisites have to be in place:

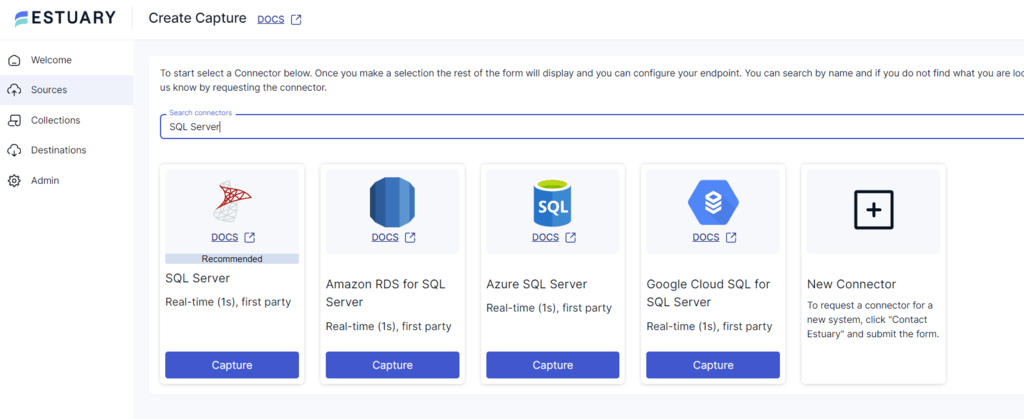

Step 1: Configure SQL Server as the Source

- Log in or register to Estuary Flow.

- After signing in, select the Sources tab from the left pane of the dashboard.

- On the Sources page, click + NEW CAPTURE.

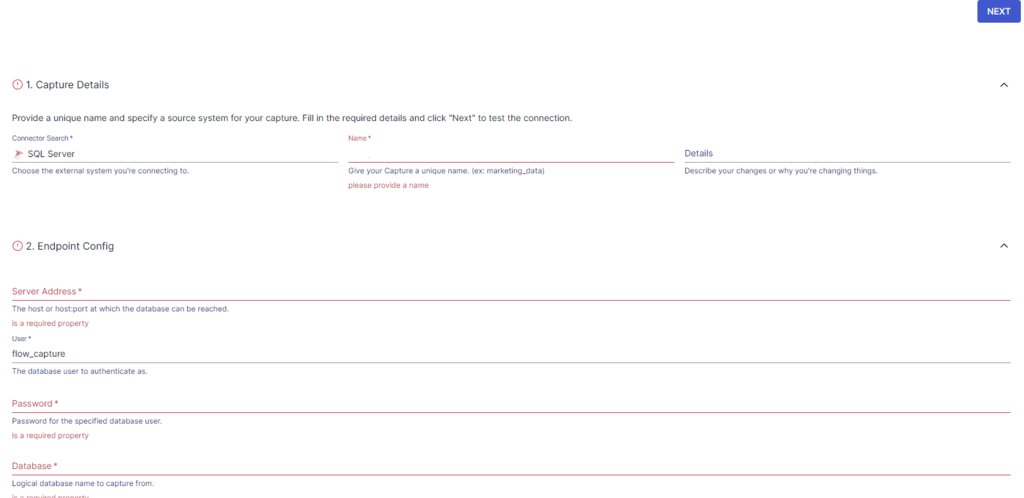

- Enter SQL Server in the Search connectors box. When you see the SQL Server connector in the search results, click its Capture button.

- On the Create Capture page, fill in the required details, including Name, Server Address, User, and more.

- To complete the process of configuring SQL Server as your source, select NEXT > SAVE AND PUBLISH. The CDC connector will continuously capture SQL Server updates into one or more Flow collections.

Step 2: Configure MongoDB as the Destination

- After a successful capture, a pop-up window containing the capture details appears. Click on the MATERIALIZE COLLECTIONS button in this window to continue configuring the destination end of the pipeline.

However, you can also navigate to the dashboard and click Destinations > + NEW MATERIALIZATION.

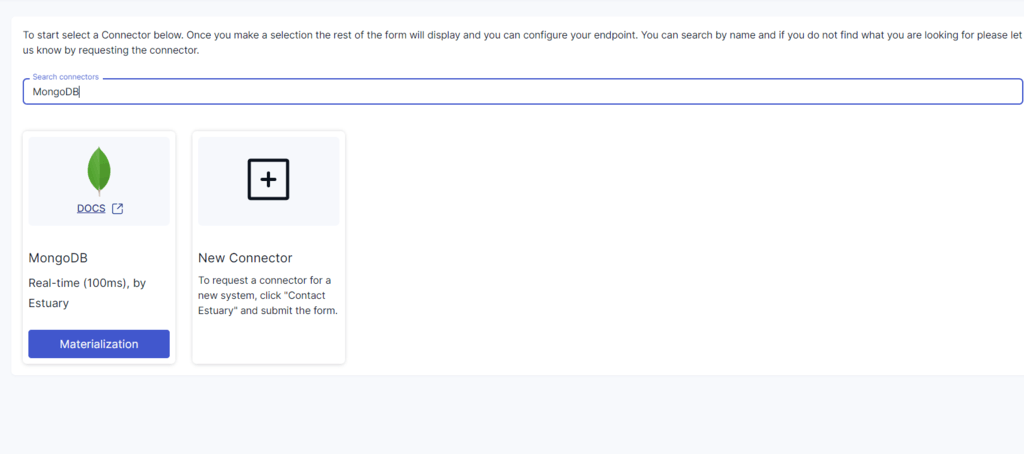

- Next, type MongoDB into the Search connectors box and click its Materialization button.

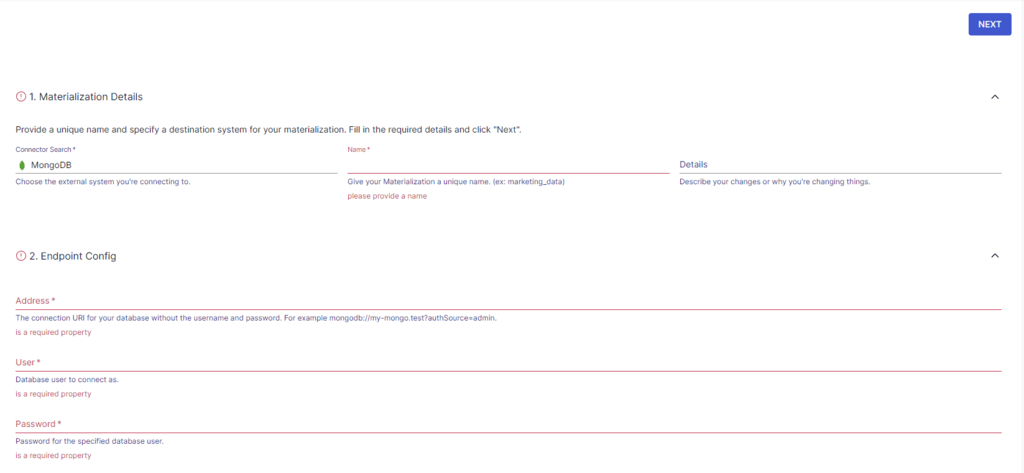

- On the Create Materialization page, enter the mandatory details, such as Name, Address, User, Password, and more. Then, click NEXT > SAVE AND PUBLISH.

- The connector will materialize Estuary Flow collections containing your SQL Server data to your MongoDB collections.

Benefits of Estuary Flow

- Scalability: Estuary Flow is designed to efficiently and easily handle large volumes of data, scaling up to 7 GB/s. This makes it suitable for high-demand scenarios where vast amounts of streaming data must be processed quickly and reliably.

- Extensive Connectivity: With over 200 pre-built connectors, Flow facilitates easy integration with various data sources. This connectivity is crucial for organizations that rely on diverse data environments.

- Change Data Capture: Flow uses intricate log-based Change Data Capture (CDC) algorithms to collect detailed data changes. This aids in reducing delays and preserving data integrity.

- Processing Data in Real Time: Estuary Flow allows for seamless and continuous real-time data flow from SQL Server to MongoDB. This guarantees that downstream applications are always utilizing the most up-to-date data, which, in turn, facilitates real-time decision-making.

The Manual Approach: Migrating SQL Server to MongoDB Using SSIS

This section will explain how to migrate your data from an SQL Server database to MongoDB using SQL Server Integration Services (SSIS). It is a Microsoft SQL Server database component that supports data integration and transformation tasks.

We will use a sample dataset, the Employees table, from the AdventureWorks2017 database to demonstrate the migration process step by step.

Prerequisites

Before starting with the process, ensure the following prerequisites:

- Visual Studio with SQL Server Integration Services (SSIS) installed

- SQL Server with the AdventureWorks2017 database

- MongoDB is installed and running on your local machine

Step 1: Set up the SSIS Project

- Create a new Integration Services project in Visual Studio and add an OLE DB connection manager to establish a connection with SQL Server.

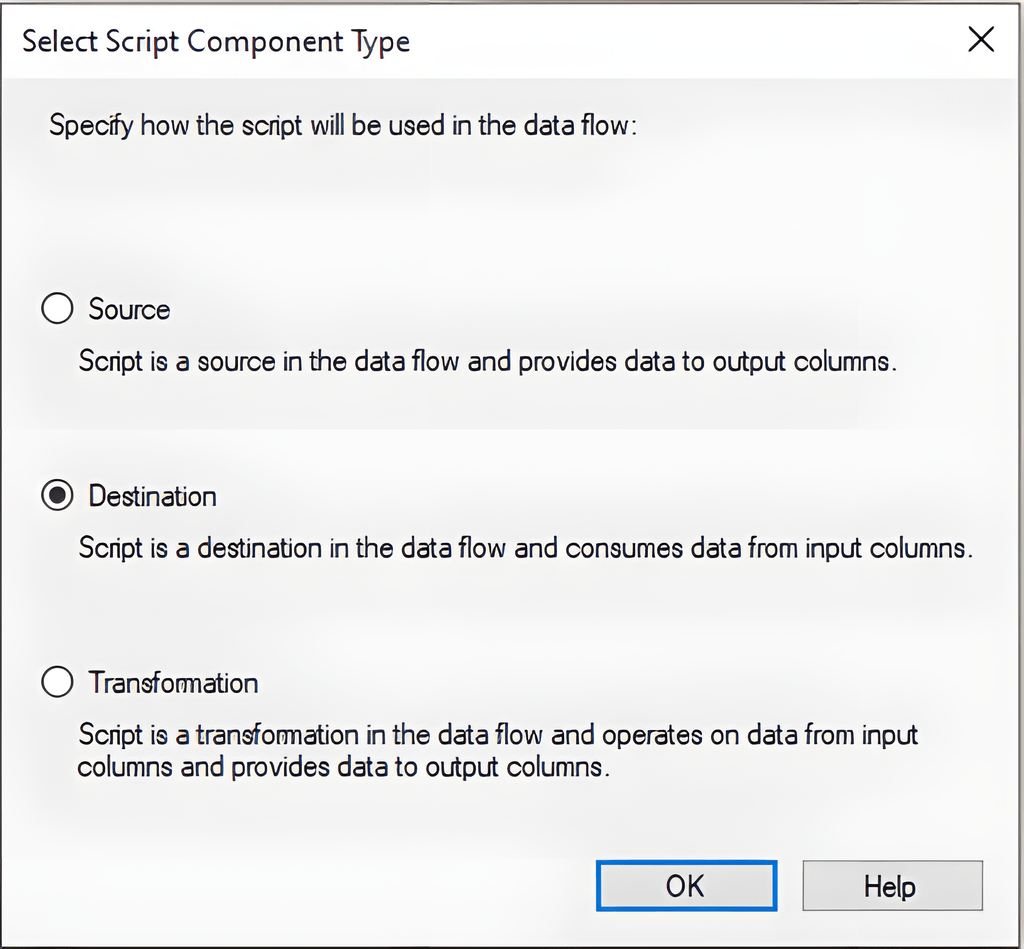

- Add an OLE DB source component to read the Employee tables from the AdventureWorks2017 database. Then, add a Script Component and configure it as a destination.

With the project and components set up, we can now proceed to the next step.

Step 2: Install MongoDB Driver

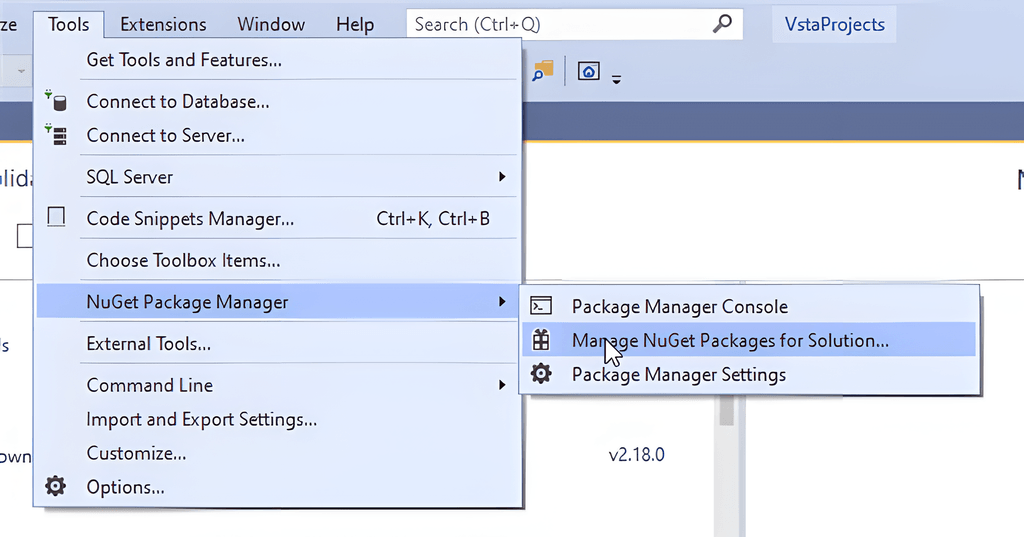

- In the Script Component editor, navigate Tools > NuGet Package Manager > Manage NuGet Packages for Solution...

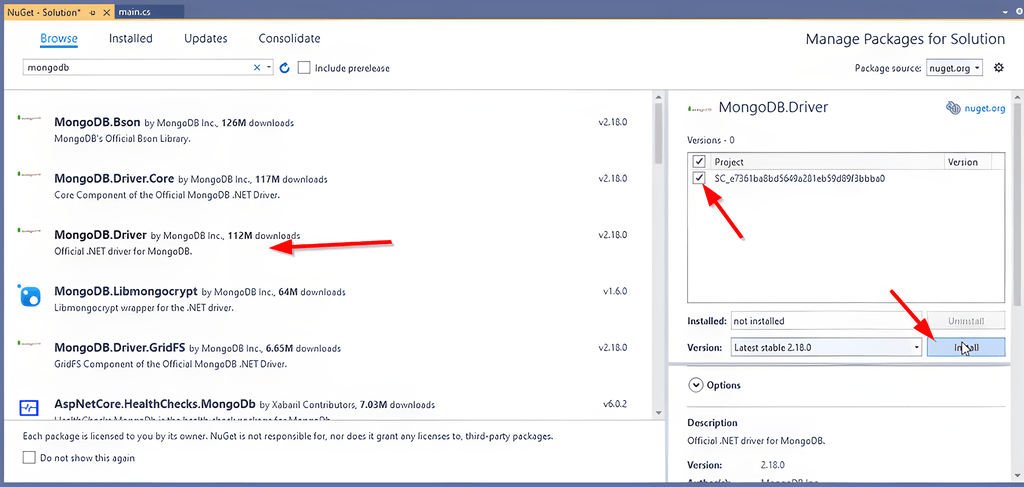

- In the NuGet Package Manager window, find the MongoDB.Driver package to install the necessary MongoDB libraries. Choose the current version of the script component project and click Install. This package includes all the essential libraries, such as MongoDB.Driver, MongoDB.Bson, and more.

Step 3: Write the Custom Script

- After the NuGet package is installed successfully, open the main.cs class in the C# editor.

- Use the following lines of code to add the MongoDB.Bson and MongoDB.Driver namespaces in the Namespaces region.

| using MongoDB.Bson; using MongoDB.Driver; |

- Within the ScriptMain class, define your MongoDB client, database, and collection objects as follows:

| MongoClient client; IMongoDatabase database; IMongoCollection<BsonDocument> collection; |

- In the PreExecute() method, establish the connection and specify the MongoDB database and collection. Replace the connection string with your MongoDB installation details.

| client = new MongoClient("mongodb://localhost:27017/?readPreference=primary&ssl=false"); //Make sure to change the connection string based on your MongoDB installation. database = client.GetDatabase("AdventureWorks"); collection = database.GetCollection<BsonDocument>("Employees"); |

- Enter the data insertion logic in the <Input Buffer name>_ProcessInputRow function. Serialize each data row into a BsonDocument object before inserting it into the MongoDB collection.

| var document = new BsonDocument { { "BusinessEntityID", Row.BusinessEntityID }, { "NationalIDNumber", Row.NationalIDNumber }, { "LoginID", Row.LoginID }, { "JobTitle", Row.JobTitle }, { "BirthDate", Row.BirthDate }, { "MaritalStatus", Row.MaritalStatus }, { "Gender", Row.Gender }, { "HireDate", Row.HireDate }, { "SalariedFlag", Row.SalariedFlag }, { "VacationHours", Row.VacationHours }, { "SickLeaveHours", Row.SickLeaveHours }, { "CurrentFlag", Row.CurrentFlag }, { "rowguid", Row.rowguid.ToString() }, { "ModifiedDate", Row.ModifiedDate } }; |

- Finally, use the collection.InsertOne() method to insert the data into the MongoDB collection.

| collection.InsertOne(document); |

Optional Step: Bulk Inserting Whole Document

If you need to insert all the data at once and not row-by-row, follow these additional steps.

- In the ScriptMain class, create a list of BsonDocument and instantiate the PreExecute() function.

| List<BsonDocument> documents; documents = new List<BsonDocument>(); |

- In the ProcessInputRow() function, instead of using collection.InsertOne(), add the serialized data row to the documents list.

| documents.Add(document); |

- Lastly, use the collection.InsertMany() method to insert all documents into the MongoDB collection.

| collection.InsertMany(documents); |

By following these steps, you will be able to migrate the data from the SQL Server database to MongoDB using a Script Component in SSIS.

Limitations of using SSIS for SQL Server to MongoDB Integration

- Lack of Real-time Integration: Manual methods such as SSIS and custom scripts lack real-time data loading capabilities, making them unsuitable for streaming data between SQL Server and MongoDB.

- Complexity: Setting up and configuring an effective SSIS package for SQL Server to MongoDB migration requires a good understanding of both database systems. Compared to ETL tools that mostly provide ready-to-use connectors, this demands increased technical skills.

The Takeaway

Migrating your data from SQL Server to MongoDB offers significant advantages in terms of scalability, flexibility, and performance, especially when dealing with evolving datasets. This allows you to handle large volumes of data without compromising performance or query speed.

The two methods discussed in this article offer different approaches to this data migration task. Estuary Flow simplifies this migration with its real-time capabilities, powerful transformations, and robust change data capture features.

On the other hand, SSIS provides a development-centric approach for SQL Server to MongoDB migration, and is suitable for one-time batch transfers. The method you choose depends on your specific project requirements and the need for real-time data synchronization.

Check out the amazing features of Estuary Flow to streamline your data integration workflows. Create an account or log in right away.

FAQs

- Why migrate from SQL Server to MongoDB?

MongoDB's scalability, flexible schema, and performance advantages make it ideal for handling large, dynamic datasets. Businesses needing to adapt quickly to changing requirements and handle massive volumes of data often benefit from this migration.

- What are the key differences between SQL Server and MongoDB?

SQL Server uses a relational model with tables, rows, and strict schemas. MongoDB uses a document-oriented model with JSON-like (BSON) documents, offering greater flexibility for evolving data structures.

- Do I need to rewrite my application code after migration?

Yes. Since MongoDB uses a different query language and data access patterns, your application code will need to be adjusted to interact with the new database

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles