Moving data from Salesforce, a popular customer engagement platform, to Databricks, an efficient data management and analytics platform, presents a compelling opportunity with various advantages.

This data integration allows you to leverage Databricks’ robust, open analytics platform. Additionally, the extensive data source compatibility, enhanced productivity, and flexibility for various job sizes make Databricks an impressive destination.

How to Migrate Salesforce to Databricks: To migrate Salesforce data to Databricks, you can use either an automated real-time ETL tool like Estuary Flow or follow a manual process involving CSV export/import. The automated approach offers real-time updates, while the manual approach involves more control but requires extra effort. In this tutorial, we’ll walk you through both options in detail.

Salesforce: An Overview

Salesforce is a cloud-based customer relationship management (CRM) platform that efficiently helps businesses manage their sales, marketing, customer service, and other operations. It offers a set of tools and services designed to streamline and automate different aspects of customer management.

Databricks: An Overview

Databricks is a cloud-based platform designed to build, deploy, share, and maintain data analytics solutions at scale. Its workspace includes tools for data processing, report generation, visualizations, ML modeling, and performance tracking. With this collaborative, scalable, and secure workspace, Databricks enables organizations to harness the full potential of their data, accelerate innovation, and make data-driven decisions with confidence.

Some of the key features of Databricks are:

- Productivity and Collaboration: Databricks provides a shared workspace that fosters a collaborative environment for data scientists, engineers, and business analysts. The platform enhances productivity and collaboration with features like Databricks Notebook, which facilitates using multiple programming languages (Python, R, Scala), interactive data exploration, and visualization.

- Scalable Analytics: Databricks is a distributed computing platform that can process huge volumes of data in parallel. This capability ensures you can handle complex analytics workloads without performance degradation for evolving business needs.

- Flexibility: Built on Apache Spark and compatible with major cloud providers, including AWS, Azure, and GCP, Databricks offers impressive flexibility. This makes it suitable for various tasks, from small-scale development and testing to large-scale operations like Big Data processing.

Why Migrate Salesforce Data to Databricks?

Migrating data from Salesforce to Databricks can significantly enhance your data analytics capabilities. Leveraging Data Integration Tools ensures smooth data migration, real-time synchronization, and allows businesses to harness the full potential of their data.

Some key points to note are:

- Databricks provides advanced analytics and AI capabilities, allowing you to build real-time analytics models and derive actionable insights from your data, resulting in faster innovation.

- Unlike Salesforce, which primarily focuses on customer and CRM data, Databricks allows you to integrate data from various sources. This provides a more comprehensive view of your data for enhanced analytics and decision-making.

- Databricks offers a cost-effective pricing model, allowing you to optimize your data analytics expenditure.

Businesses migrating Salesforce data often choose multiple platforms depending on their use cases. While Databricks is great for big data analytics, platforms like Salesforce to Bigquery and Salesforce to Snowflake offer equally robust cloud solutions for companies looking to optimize real-time data insights and scalability.

Let's explore two ways to seamlessly migrate your Salesforce data to Databricks, starting with the most efficient and automated approach.

Method 1: Using Estuary Flow to Load Data from Salesforce to Databricks

Estuary Flow is a low-code, real-time ETL solution that helps streamline data integration. Unlike manually creating a data pipeline that involves intensive coding and maintenance, Estuary Flow offers an effortless ETL setup process. It's a suitable choice for your varied integration needs to move data from any number of sources into a destination.

You can learn more about capturing historical and real-time Salesforce data through Estuary in this detailed guide.

Here are some of the key features of Estuary Flow:

- No-code Configuration: Estuary Flow provides a choice of 200+ connectors to establish connections between diverse sources and destinations. Configuring these connectors doesn’t require writing a single line of code.

- Change Data Capture: A vital feature of Estuary Flow, CDC allows you to track and capture changes in the source data as they occur. Any update in the source system is immediately replicated to the target system. This real-time capability is essential if you require up-to-date data for decision-making.

- Scheduling and Automation: Estuary Flow provides workflow scheduling options. You can execute the workflows based on the specific time intervals mentioned in the workflow schedule. It also offers automation capabilities to ensure regular data updates and processes for recurring ETL setup.

Here is a step-by-step guide to using Estuary Flow to migrate from Salesforce to Databricks.

Prerequisites

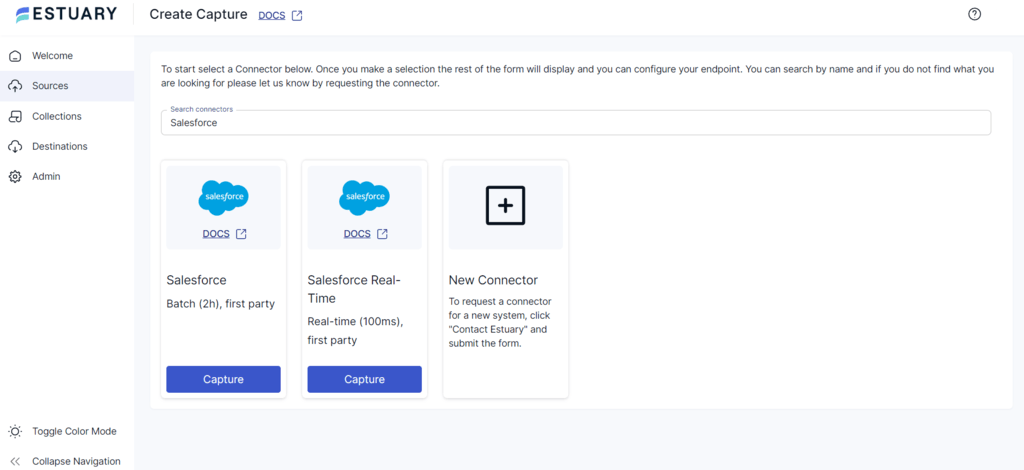

Step 1: Configure Salesforce as the Source

- Sign in to your Estuary Flow account to access the dashboard.

- To initiate the process of configuring Salesforce as the source, click the Sources option on the left navigation pane.

- Click the + NEW CAPTURE button.

- Search for the Salesforce connector using the Search connectors field on the Create Capture page.

- Click the Capture button of the Salesforce Real-Time connector in the search results.

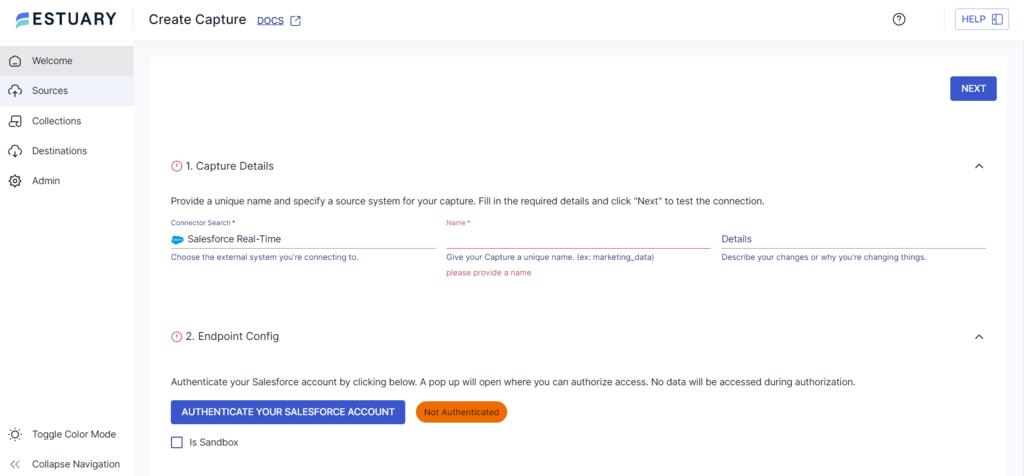

- On the connector configuration page, provide essential details, including a Name for your capture. In the Endpoint Config section, click AUTHENTICATE YOUR SALESFORCE ACCOUNT to authorize access to your Salesforce account.

- Finally, click the NEXT button on the top right corner and then SAVE AND PUBLISH to complete the source configuration.

The connector uses the Salesforce PushTopic API to capture data from Salesforce objects into Flow collections in real-time.

Step 2: Configure Databricks as the Destination

- After a successful capture, a pop-up window with the details of the capture will appear. To set up Databricks as the destination for the integration pipeline, click on MATERIALIZE COLLECTIONS in the pop-up window.

Alternatively, to configure Databricks as the destination, click the Destinations option on the left navigation pane of the Estuary Flow dashboard.

- Click the + NEW MATERIALIZATION button on the Destinations page.

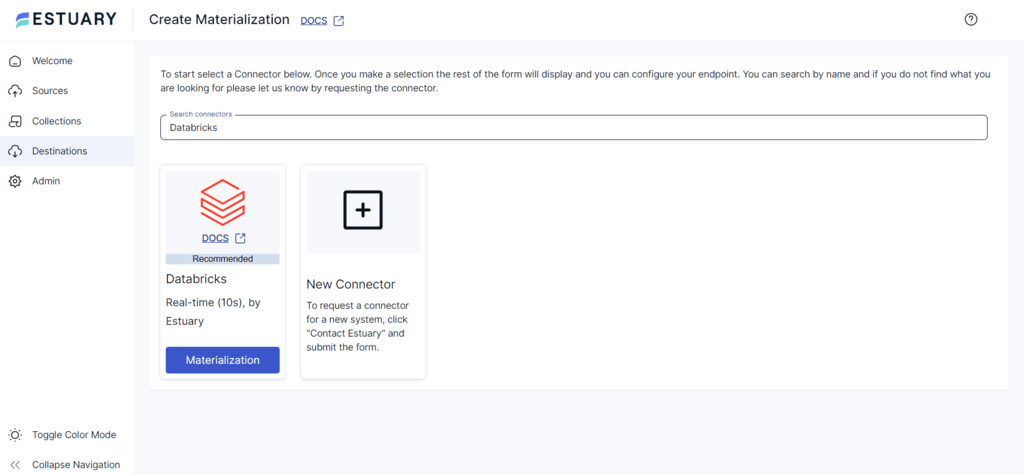

- On the Create Materialization page, use the Search connectors field to search for the Databricks connector. In the Search results, click the connector’s Materialization button.

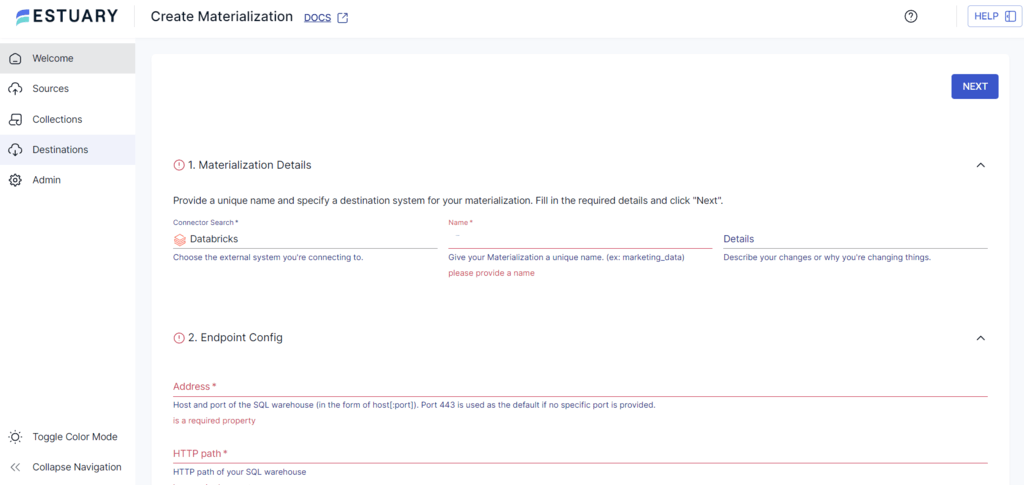

- On the Create Materialization page, fill in the necessary fields, such as Name, Address, HTTP path, and Catalog Name. For authentication, specify the Personal Access Token details.

- Although the collections added to your capture will be automatically included in your materialization, you can manually use the Source Collections section to add a capture to your materialization.

Click the SOURCE FROM CAPTURE button under the Source collections section to link a capture to your materialization.

- Then, click NEXT > SAVE AND PUBLISH to materialize your Salesforce data in Flow collections into tables in your Databricks warehouse.

Ready to streamline your real-time data integration and unlock the full potential of your Salesforce data? Try Estuary Flow for free today and experience seamless Salesforce data migration to Databricks!

Method 2: Using CSV Export/Import to Move Data from Salesforce to Databricks

This method involves extracting data from Salesforce in CSV format and loading it into Databricks. Let’s look at the details of the steps involved in this method:

Step 1: Extract Salesforce Data as CSV

You can export data from Salesforce manually or using an automated schedule. By default, the data is exported in CSV format. There are two different methods to export Salesforce data:

- Data Export Service: This in-browser service allows you to export Salesforce data regularly (weekly or monthly) to a secure location outside of Salesforce. It also enables you to schedule automatic data backups, ensuring you have access to historical records and recover data in the event of data loss, corruption, or accidental deletion.

- Data Loader: Once installed, Data Loader automatically connects to your logged-in Salesforce account and allows you to import, export, update, and delete large volumes of Salesforce data. It provides a user-friendly interface and supports bulk data operations, making it an essential tool for administrators and users who need to manage Salesforce data effectively.

Follow these steps to export Salesforce data using the Data Export Service:

- Login to the Salesforce setup, type Data Export in the Quick Find box, then select Data Export and Export Now or Schedule Export.

- The Export Now option only exports the data if sufficient time has elapsed since the last export.

- Schedule Export allows you to set up the export process to run at weekly or monthly intervals.

- Select the encoding based on the export file and the appropriate options to include images, docs, and attachments in the export.

- Choose the Include all data option to select all data types for export.

- Click the Save or Start Export buttons to save or export the data. Once you start the export, you will be emailed a zip archive of CSV files.

- The zip files get deleted 48 hours after the email is sent, so click the Data Export button to download the zip file promptly.

Step 2: Load CSV to Databricks

To load a CSV file into Databricks, follow these steps:

- Log in to the Databricks account to access the dashboard and locate the sidebar menu.

- Select the Data option from the sidebar menu and click the Create Table button.

- Browse and upload the CSV files from your local directory.

- Once uploaded, click the Create Table with UI button to access your data and create a data table.

- You can now read and make changes to the CSV data within Databricks.

Challenges of Using CSV Export/Import for a Salesforce Databricks Integration

- Effort-intensive: Manual data export/import requires significant human effort to extract data from Salesforce and upload it in Databricks. Apart from resulting in a slower migration process, it also increases the likelihood of errors and data loss.

- Lack of Real-time Capabilities: The CSV export/import method lacks real-time capabilities because each step requires manual effort. Any updations or modifications made to the source data after migration will require manual effort to be synchronized.

- Limited Transformations: The manual CSV export/import method can pose challenges when handling complex data transformations, such as aggregation, normalization, ML feature engineering, and complex SQL queries. Advanced data migration tools are better suited for handling these kinds of operations.

Conclusion

Migrating data from Salesforce to Databricks offers significant benefits, including real-time insights, effortless machine learning, data security, and scalability. To migrate from Salesforce to Databricks, you can use Estuary Flow or the manual CSV export/import method.

Despite being effective, the manual CSV export/import method lacks real-time data integration capabilities and is error-prone. Opting for Estuary Flow for for Salesforce to Databricks ETL can reduce the need for repetitive and resource-intensive tasks. With its comprehensive set of 200+ pre-built connectors, you can seamlessly connect diverse data sources and destinations without intensive effort.

Are you looking to instantly transfer data between diverse platforms? Register for your free Estuary account to get started with effortless integration.

FAQs

- What are some use cases for connecting Salesforce to Databricks?

Some common use cases for connecting Salesforce to Databricks include sales performance analysis, customer segmentation, lead scoring, churn prediction, and marketing campaign optimization.

- Can I perform a real-time analysis of Salesforce data in Databricks?

Yes. You can perform real-time analysis of Salesforce data in Databricks by setting up a streaming pipeline using tools like Estuary Flow, Apache Spark, or Apache Kafka.

- How can I connect Salesforce to Databricks?

There are many methods to connect Salesforce to Databricks, such as Salesforce API or third-party integration tools like Estuary Flow.

Related Guide on integrating Salesforce to other platform;

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles