NetSuite is a leading enterprise resource planning (ERP) system provider, offering a comprehensive suite of tools to help you manage various business operations. However, despite its extensive features, NetSuite’s capabilities might not suffice every organization’s needs for large-scale data processing and advanced analytics.

If you prioritize data-driven decision-making, Databricks is an impressive choice. Migrating from NetSuite to Databricks offers numerous benefits, including faster data processing, real-time insights, and leveraging sophisticated analytical techniques. These benefits can lead to improved business innovation to gain a competitive edge.

In this article, you will explore how to effectively connect NetSuite to Databricks.

If you prefer to skip the overview and jump right into the step-by-step methods for connecting NetSuite to Databricks, click here to go directly to the instructions.

Overview of NetSuite

NetSuite ERP is a comprehensive cloud business management solution that provides visibility into real-time financial and operational performance.

As an integrated suite of applications, NetSuite is handy for managing accounting, order processing, inventory management, production, supply chain, and warehouse operations. Automating such critical operations enables your business to gain greater control over operations and run more efficiently.

Overview of Databricks

Databricks is a cloud-based platform built on the robust Apache Spark engine. It is designed to handle massive datasets and complex data processing tasks. Databricks provides a centralized workspace that facilitates collaboration among business analysts, developers, and scientists, enabling them to efficiently develop and deploy data-driven applications.

With integrated support for ML frameworks such as TensorFlow, PyTorch, and Scikit-learn, Databricks helps you track experiments and share and deploy ML models.

A few key features of Databricks include:

- Databricks is highly scalable, allowing you to manage massive data volumes effortlessly. It also adapts to various data processing workloads with configurations that support batch processing, real-time streaming, and machine learning.

- Databricks provides robust security features designed to meet the requirements of enterprise-level deployments. This includes compliance standards such as GDPR, HIPAA, and SOC2.

- Databricks is available on major cloud providers, including AWS, Microsoft Azure, and GCP, allowing you to leverage cloud scalability and flexibility.

Why Integrate NetSuite with Databricks?

Some of the advantages of a Databricks NetSuite integration are listed below:

- Databricks enable real-time data processing for quick analysis. This is crucial for applications that require real-time customer interaction management for immediate decision-making.

- Databricks is a modern, single platform for all your analytics and AI use cases, offering a comprehensive environment for data analytics. This is particularly useful for extending NetSuite's analytical functions, allowing more complex and varied data analysis workflows.

- Databricks accelerates the development of AI and ML capabilities by leveraging collaborative, self-service tools and open-source technologies like MLflow and Apache Spark. This can help provide deeper insights and predictive analytics for your NetSuite data.

How to Connect NetSuite to Databricks: 2 Methods

Let’s look into two methods that can help integrate NetSuite with Databricks:

- Method 1: Using Estuary Flow for a NetSuite Databricks Connection

- Method 2: Using CSV Export/Import to Integrate NetSuite with Databricks

Method 1: Using Estuary Flow for a NetSuite Databricks Connection

Estuary Flow is an efficient real-time ETL solution that offers improved scalability, reliability, and integration capabilities. It allows you to transfer data from NetSuite to Databricks with just a few clicks and without in-depth technical expertise.

Some of the important features of Estuary Flow are listed below:

- Wide Range of Connectors: Estuary Flow provides over 200+ pre-built connectors to help establish connections, integrating data from various sources to your choice destinations. Owing to the no-code configuration of these connectors, the process of setting up a data pipeline is significantly simplified.

- Change Data Capture (CDC): Estuary Flow supports CDC for a seamless Databricks NetSuite integration. All updates to the NetSuite database will be immediately reflected in Databricks without requiring human intervention, helping achieve real-time analytics and decision-making.

- Scalability: Estuary Flow is designed for horizontal scaling to handle varying workloads and data volumes. It can run active workloads from any database at up to 7 GB/s.

Here are the steps to migrate from NetSuite to Databricks using Estuary Flow.

Prerequisites

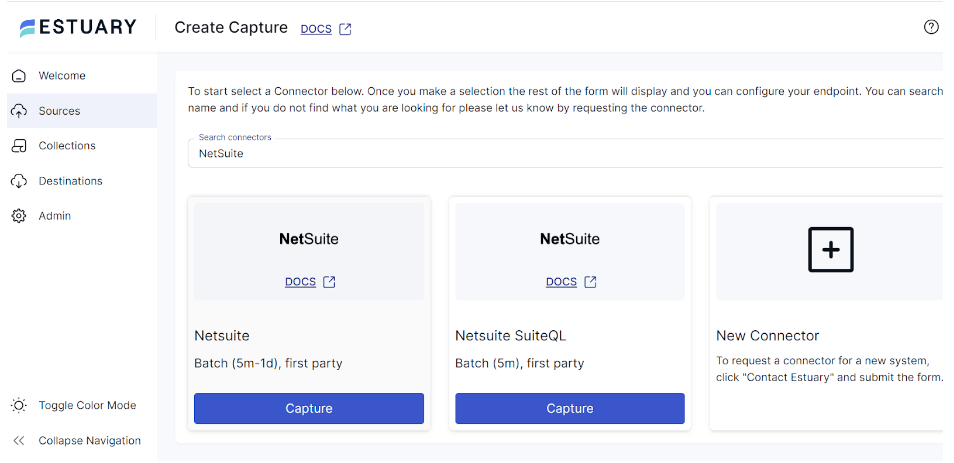

Step 1: Configure NetSuite as Your Source

- Log in to your Estuary account.

- Click Sources on the left-side pane of the dashboard.

- To proceed setting up the source end of the integration pipeline, click + NEW CAPTURE.

- In the Search connectors box, type NetSuite. Click the Capture button of the NetSuite connector when you see it in the search results.

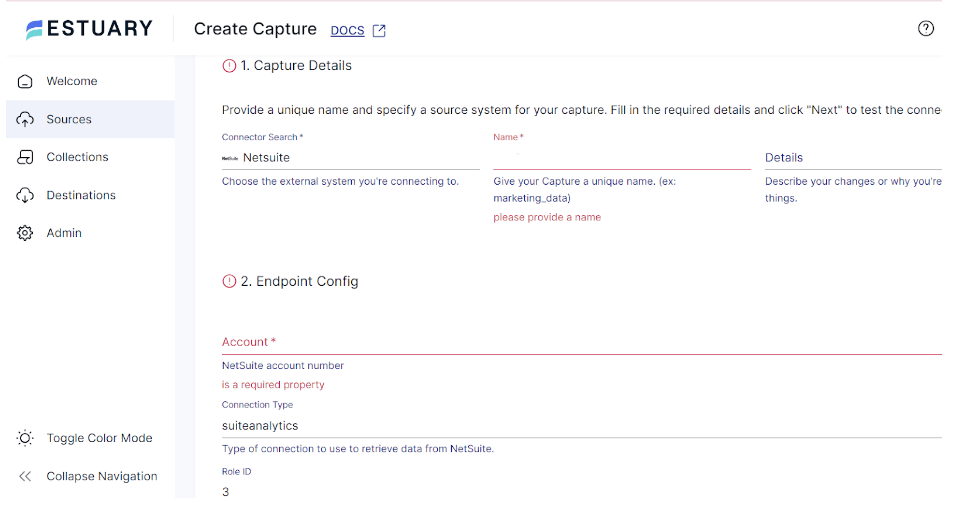

- On the NetSuite connector configuration page, provide a Name for your capture and other required information, such as the Account, Connection Type, and Role ID.

- Click NEXT > SAVE AND PUBLISH. The connector will capture data from NetSuite into Flow collections.

Step 2: Configure Databricks as Your Destination

- Following the source configuration, the next step is configuring Databricks as the pipeline's destination end. To do this, click MATERIALIZATION COLLECTIONS in the pop-up window after a successful capture.

Alternatively, navigate to the Estuary dashboard and click Destinations > + NEW MATERIALIZATION.

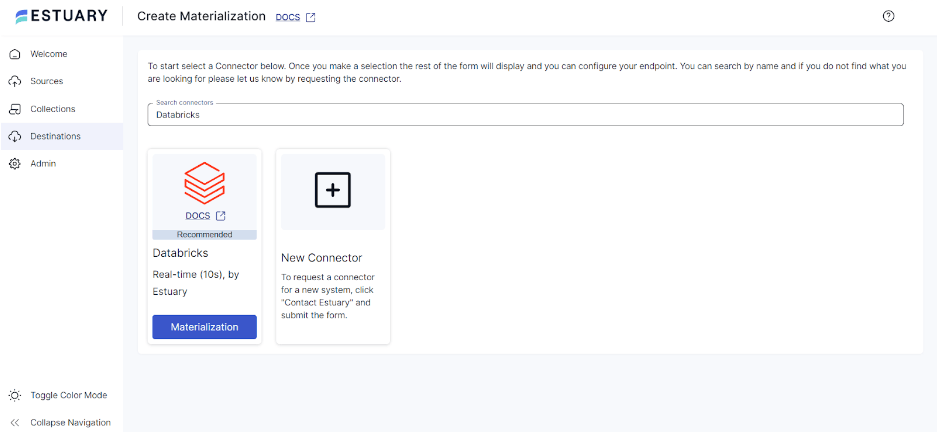

- On the Create Materialization page, type Databricks in the Search connectors box, and click the Materialization button of the connector when it appears in search results.

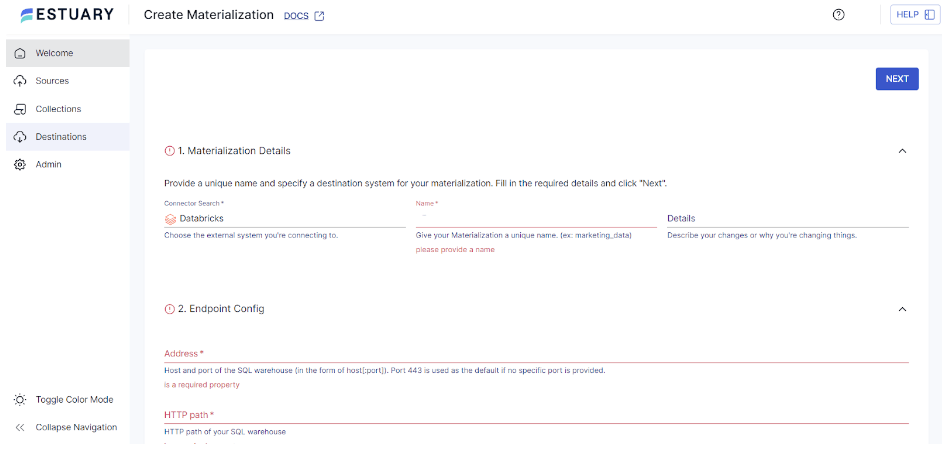

- On the Create Materialization page, enter the necessary information, such as the Address, HTTP path, and Catalog Name, in the appropriate fields.

- While collections added to your capture will be automatically included in your materialization, consider using the Source Collections section to add a capture to your materialization manually.

- Then, click NEXT > SAVE AND PUBLISH to materialize Flow collections of your NetSuite data into Databricks tables.

Method 2: Using CSV Export/Import to Integrate NetSuite with Databricks

This method involves extracting data from NetSuite as CSV and loading the extracted data into Databricks.

Step 1: Export NetSuite Data as CSV Files

To export NetSuite data as CSV files, follow the steps below:

- In your NetSuite account, navigate to Setup > Import/Export > Export Tasks > Full CSV Export.

- Click Submit. A progress bar that indicates the status of your export will appear.

- Upon completion of the export process, a File Download window will pop up.

- In the File Download window, select Save this file to disk, then click OK.

- A Save As dialog box opens with the File Name field highlighted.

- Enter the desired file name for your CSV files.

- Click Save to download your files.

- The files will be saved in ZIP format. Open the ZIP file to view the titles of the exported CSV files.

- Use a spreadsheet program or text editor to open and review the CSV file.

Step 2: Export CSV to Databricks

- In the Databricks workspace, click the Data tab in the sidebar to open the Data View window.

- Select the CSV files you want to upload by clicking the Upload File button.

- By default, Databricks assigns a table name based on the file name or format. However, you can change the settings or add a unique table name as required.

- After a successful upload, select the Data tab in your Databricks workspace to confirm the files are correctly uploaded.

Limitations of Using CSV Files to Migrate from NetSuite to Databricks

Although migrating data using CSV files can be effective for a NetSuite-Databricks integration, there are some associated limitations, including:

- Resource-Intensive: This method involves manual efforts to extract NetSuite data as CSV files and import CSV into Databricks. It can be significantly time-consuming and resource-intensive, particularly for frequent data transfers.

- Data Integrity Issues: Due to the manual efforts involved in this method, there’s an increased risk of data errors or inconsistencies, leading to reduced data integrity.

- Lacks Real-time Integration: For any updates, deletions, or additions to your NetSuite data, you must either manually update or re-import the entire dataset to reflect the changes in Databricks. As a result, this method isn’t suitable for applications requiring real-time data updates.

Also Read:

Conclusion

Connecting NetSuite to Databricks provides a valuable solution for harnessing the combined strength of integrated data management and advanced analytics. You can connect NetSuite to Databricks by using CSV file export/import or Estuary Flow.

While using the CSV method to migrate NetSuite to Databricks may seem straightforward, it is associated with drawbacks, such as being resource-intensive, data integrity issues, and lacking real-time integration. On the contrary, Estuary Flow offers seamless NetSuite integration with Databricks in addition to automation, improved data integrity, and real-time integration.

Looking for a solution for effortless integration between sources and destinations of your choice? Sign up for your Estuary account today! With no-code connectors, CDC capabilities, and robust transformation support, you won’t have to look any further.

FAQs

Can I use VNET with Azure Databricks?

Yes, you can use an Azure Virtual Network (VNET) with Azure Databricks.

How does NetSuite pricing work?

NetSuite operates on a modular pricing structure; you only pay for what you actually use. Your license consists of three components: the core platform, optional modules, and the number of users. There’s also a one-time implementation fee for the initial setup.

Is real-time data integration possible between NetSuite and Databricks?

Yes, real-time data integration between NetSuite and Databricks is possible using Estuary Flow. Estuary Flow supports Change Data Capture (CDC), enabling immediate updates from NetSuite to Databricks for timely analytics and decision-making.

Related Guides on Integrate NetSuite Data With Other Platforms:

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles