Want to power a project or app with Elasticsearch, but all your data lives in MySQL?

There are lots of ways to move data from MySQL to Elasticsearch, but not all of them are easy or perform optimally. Especially if you’re not an expert in both platforms, many tutorials you’ll find online will fall flat.

In this post, we’ll demonstrate an easy, no-code method for setting up a real-time MySQL to Elasticsearch pipeline. This method requires minimal expertise and can be set up in minutes using the Estuary Flow web app.

We’ll also offer an adaptation of Elastic’s native method for integrating MySQL data, which uses Logstash and the JDBC input plugin.

Let’s take a look at the platforms in question before we get started.

What Is MySQL?

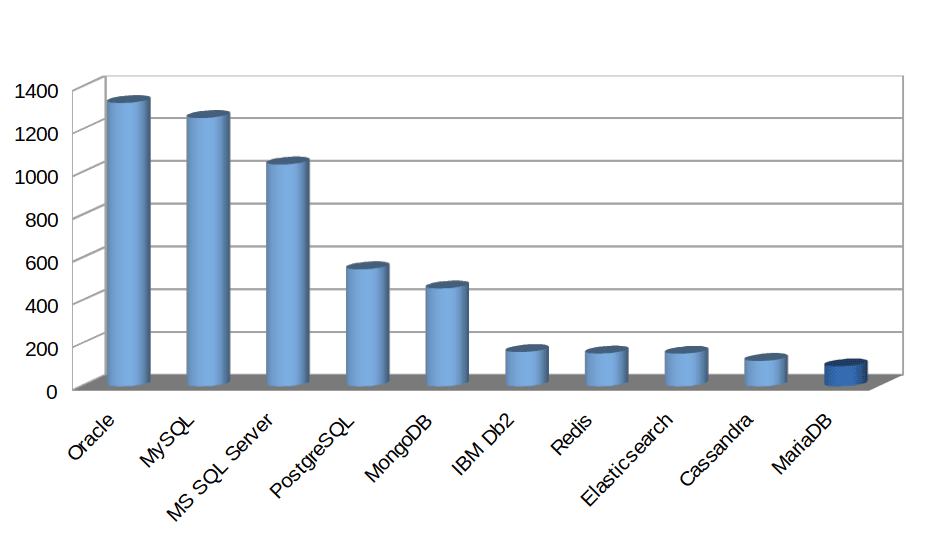

MySQL is one of the most popular open-source relational database management systems (RDBMS) used by enterprises of all sizes.

MySQL is based on SQL and is a cornerstone of many software stacks. It helps businesses build and maintain powerful data-driven B2B services and customer-facing web applications. MySQL is open-source and has a rich feature set with excellent reliability. It is also supported through regular updates by Oracle.

Uses Of MySQL And Reasons For Its Popularity

MySQL is most ideally suited for use cases that rely on simple, read-only query logic, but is generally an adaptable and easy-to-use database.

Here are some of the most important reasons why businesses choose MySQL over other database management systems.

MySQL Is Secure

Security is ultimately up to the user to implement, but MySQL offers all the tools you need, including SSL encryption, authentication plugins, and data masking. This is essential when personally identifiable information and financial details are stored in the database.

MySQL Is Scalable

MySQL’s scalability is unrivaled since it facilitates the management of deeply embedded apps using a smaller footprint. The database management platform can work in massive warehouses that stack terabytes of data. It is also flexible and allows the deployment from traditional apps and software to eCommerce platforms too.

MySQL Empowers System Admins

With its distinct storage-engine framework, system admins can configure the MySQL database server for flawless performance. Regardless of the queries received, MySQL is designed to meet even the most demanding applications while ensuring optimum speed.

What Is Elasticsearch?

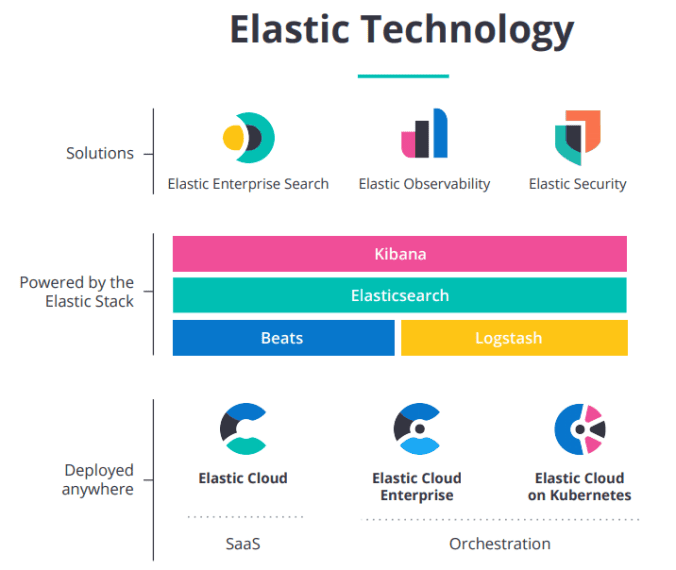

Elasticsearch (now known as Elastic) is an open-source and distributed analytics search engine for all types of data. The software can handle textual, numerical, structured, unstructured, and geospatial data. The platform is built on Apache Lucene and was first released in 2010.

Since then, the platform is known for its REST APIs, speed, and scalability. The entire Elastic Stack is a set of free and open tools for data ingestion, storage, analysis, enrichment, and visualization.

The entire system works by feeding data into Elasticsearch from a variety of data sources. These sources can include metrics, logs, and web apps. This data is then parsed, enriched, normalized, and indexed in the Elasticsearch platform.

From here, users can run complex queries against their data sets and create powerful visualizations using Kibana. They can also share dashboards, and manage the Elastic Stack. Logstash, Elasticsearch, and Kibana are referred to as the ELK Stack and work in tandem with the main platform.

Significant Uses Of Elasticsearch

Used by companies like Cisco, Citigroup, and GitHub, Elasticsearch indexes all types of data very quickly and is scalable. These qualities mean that Elasticsearch’s search engine can be used for a large variety of use cases:

- Full-text search

- Website search

- Security analytics

- Big data analytics

- Enterprise search

- Business Analytics

- Logging and log analytics

- App performance monitoring

- Geospatial data analysis and visualization

- Infrastructure metrics and container monitoring

Programming Languages Supported

Elasticsearch Data supports a variety of languages. The platform and its official clients are available for:

- Go

- Perl

- PHP

- Java

- Ruby

- Python

- .NET (C#)

- JSON Docs

- JavaScript (Node.js)

Should You Use Elasticsearch Over MySQL?

In very simple terms, both MySQL and Elastic can store data and allow data to be queried. But they are far from interchangeable.

MySQL is ideally suited to provide the source of truth for all your transactional workflows. Elastic is more specialized: it’s designed with search in mind. It has stronger indexing and handles high-volume real-time operations better.

We don’t recommend entirely moving away from MySQL in favor of Elasticsearch. Businesses should always opt to use the two platforms in tandem since the two have their unique characteristics that can lend to better data handling, querying, and management.

It’s a good idea to keep MySQL as a record-keeping system and perform all CRUD (Create, Read, Update, Delete) operations from your app against MySQL. Elasticsearch can then be used to move data from MySQL to your app.

This way if Elasticsearch goes down, you only lose the search feature but your primary data store is still intact. You can configure ElasticSearch as a cluster since it can handle a large number of rows due to its scalability.

Plus, a complete migration from MySQL will have you face many challenges. Some of the most substantial issues include:

- Struggling to deal with transactional data through Elasticsearch.

- Not all MySQL database tables map easily to Elasticsearch’s schema/document structures and parameters.

- Updates and deletions need to be carefully handled. Dealing with updates and deletes is very chaotic because the types in Elasticsearch are MySQL’s alternative to tables. The two do not format well.

- Elasticsearch isn’t great at dealing with complex data relationships. MySQL, on the other hand, can specify relationships in tables that are easy to update/delete based on foreign key relationships. However, in Elasticsearch, it is very difficult to relate two separate types (tables) and it requires a lot of strategizing and planning to link the two together.

The true implementation should involve a mix of the two platforms; MySQL and Elasticsearch. This way, MySQL can be treated as a primary data store and Elasticsearch can lend its use as a secondary data store. It will be especially useful if large data volumes are to be queried.

Still, many businesses may need to use one or the other and if you’re already deploying MySQL but require a platform to handle complex queries, Elasticsearch may be the one to choose.

How To Ingest And Extract Data From MySQL To Elasticsearch

Now that you’re reacquainted with the two platforms and their uses, let’s outline the main processes required to ingest and extract data from MySQL to Elastic.

If you use a data integration platform such as Estuary Flow, the process is simple and well laid out. With the help of the relevant documentation and their straightforward connector guides, you can easily use the configuration file to integrate and parse your data in Elasticsearch.

You can also use a Logstash pipeline for a more DIY approach.

Method 1: Integrate MySQL and Elasticsearch with Estuary Flow

"Flow is 1000x times better than LogStash or Elastic Enterprise Data Ingestion Tool (for moving data from MySQL to Elastic)." - Pompato

In this method, we’ll connect your MySQL database to Elasticsearch using an Estuary Flow data pipeline. You’ll create it using Flow’s no-code web app. Because the data integrations are powered by change data capture, all events in MySQL will be reflected in Elastic within milliseconds.

Before you begin, head to the web app and sign up for a free trial if you don’t have an account.

Create a Capture From MySQL

- Go to the create a new capture page of the Estuary web app and select the MySQL connector.

- Configure your MySQL database to meet the prerequisites.

- Add a unique name for the capture. Provide the MySQL server address, database username (this should be “flow_capture” if you followed the prerequisite steps), and a password.

- Click the Next button. Flow lists all the tables in your database, which it will convert into Flow data collections. You can remove any tables you don’t want to capture.

- Click Save and Publish.

Materialize data to Elasticsearch

- Click the Materialize collections button to continue.

- Choose the Elasticsearch connector.

- Add a unique name for the materialization.

- Provide the Elastic endpoint in the format

https://CLUSTER_ID.REGION.CLOUD_PLATFORM.DOMAIN:PORT, username, and password. - Scroll down to view the Collection Selector. Each data collection you just captured from MySQL will be mapped to a separate index in Elasticsearch. Provide a name for each.

- Optional: for finer-grain control of how fields are mapped to Elastic field types, use field overrides.

- Click Next.

- Click Save and Publish.

All historical data from your MySQL database will be copied to Elasticsearch indices. Any new data that appears in MySQL will also be copied to Elastic in real-time.

For more help with this method, see the Estuary Flow documentation on:

Method 2: Integrate MySQL and Elasticsearch with Elastic

Alternatively, you can use Elastic’s documented process using Logstash, which we’ve adapted below.

Note that this method is more challenging to implement, and won’t capture MySQL DELETE events. Though it’s an event-based integration, it’s not log-based change data capture; it instead relies on timestamps. Learn more about types of MySQL CDC here.

Gather Credentials

To specify the details of the connection, you’ll need your Cloud ID. Go to the Kibana main menu and select “Management” > “Integrations” > “View Deployment Details”.

To authenticate, you’ll use your Elasticsearch API key.

Get Logstash and the MySQL JDBC driver

- Download and install Logstash.

- Download and unpack the JDBC driver and take note of the driver’s location for later use.

Add Timestamps to MySQL Tables

For each table you plan to move to Elasticsearch, you’ll need a timestamp column with the last updated time.

This example adds a column called modification_time to the table my_table in the database my_db.

plaintextUSE my_db;

ALTER TABLE my_table

ADD modification_time TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP;Set up a Logstash pipeline with the JDBC input plugin

Next, you’ll need to set up a pipeline to ingest data from MySQL via the JDBC plugin

- Create a new file called

jdbc.confin<localpath>/logstash-7.12.0.

Paste the code below into the file to generate the Logstash pipeline, being sure to substitute your credentials and configuration details.

plaintextinput {

jdbc {

jdbc_driver_library => "<driverpath>/mysql-connector-java-<versionNumber>.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://<MySQL host>:3306/es_db"

jdbc_user => "<myusername>"

jdbc_password => "<mypassword>"

jdbc_paging_enabled => true

tracking_column => "unix_ts_in_secs"

use_column_value => true

tracking_column_type => "numeric"

schedule => "*/5 * * * * *"

statement => "SELECT *, UNIX_TIMESTAMP(modification_time) AS unix_ts_in_secs FROM es_table WHERE (UNIX_TIMESTAMP(modification_time) > :sql_last_value AND modification_time < NOW()) ORDER BY modification_time ASC"

}

}

filter {

mutate {

copy => { "id" => "[@metadata][_id]"}

remove_field => ["id", "@version", "unix_ts_in_secs"]

}

}

output {

elasticsearch {

index => "rdbms_idx"

ilm_enabled => false

cloud_id => "<DeploymentName>:<ID>"

cloud_auth => "elastic:<Password>"

ssl => true

api_key => "<myAPIid:myAPIkey>"

}

}With the new configuration saved, launch Logstash:

plaintextbin/logstash -f jdbc.confLogstash will start to move data from Postgres to Elasticsearch.

Conclusion

While MySQL is an excellent cornerstone for transactional workloads, Elasticsearch provides unparalleled capabilities when it comes to search functionality. The two are a great combination to power your app or project, as long as they agree about the current state of your data.

That’s why it’s important to connect MySQL to Elasticsearch in a way that:

- Syncs the two platforms with no delay.

- Is relatively easy and quick to accomplish.

- Accurately captures all types of data changes.

Elastic provides a customizable DIY option, but if you’re looking for a quicker method backed by CDC and real-time streaming, we recommend Estuary Flow.

Beyond MySQL and Elastic, Flow supports a variety of other data sources and destinations.

To start building your first pipeline, you can start your free trial or contact us for help with enterprise-level deployments.

Related Articles From Estuary

Author

Popular Articles