In today’s fast-paced and competitive business environment, organizations constantly seek ways to improve their decision-making processes. To succeed, they need to make use of the data they generate.

However, since the data is generated at an extraordinary pace, it’s an ongoing challenge to guarantee that data is accurate, high-quality, and nearly real-time. Hence, we need scalable data warehousing platforms and powerful analytical engines… and robust data pipelines to connect them.

Today’s guide will explore two such platforms; MongoDB and BigQuery. MongoDB is a popular NoSQL database that provides a flexible and scalable way to store unstructured data. BigQuery, on the other hand, is a cloud-based data warehousing solution that can perform complex analytics and generate insights.

MongoDB and BigQuery serve different purposes. To fully harness the power of data, organizations need to move data seamlessly between them.

This article will provide two step-by-step guides to capturing data from MongoDB and moving it to BigQuery. However, before we start, let’s look at these platforms.

What is MongoDB?

MongoDB is an open-source NoSQL database. NoSQL means the data aren’t stored in tables, schemas, columns, and rows with a fixed structure, as seen in relational databases. Instead, MongoDB uses collections and is document-oriented with a JavaScript Object Notation (JSON)-like document structure. Values here are stored in the form of key-value pairs.

MongoDB is popular for its scalability, speed, high performance, and ability to handle data modeling and data management of massive datasets. It also supports many programming languages like Python, Java, and Swift.

Features of MongoDB

Some key features of MongoDB include:

- Its auto replication feature and high availability. Thus, MongoDB can handle heavy traffic and a large amount of data.

- Its document-oriented structure makes it a flexible and scalable solution for various data types.

- The sharding technique and load balancing allow it to horizontally partition data across multiple machines or servers.

- Powerful indexing and querying capabilities for advanced data analysis.

- GridFS: This file system allows you to store and retrieve large files directly from the database.

- It supports multiple storage engines and programming languages, so integrating various systems and tools is easy.

What is BigQuery?

BigQuery is Google’s fully-managed, cloud-native enterprise data warehousing platform with analytics capabilities. It allows users to run SQL queries fast through its highly-optimized fast query engine and infrastructure’s processing power. BigQuery also has machine-learning capabilities and can collect data from various sources.

This platform is highly scalable and was designed on Google’s Dremel technology. Thus, you can quickly and seamlessly increase your data storage and processing capacity as your needs grow.

Features of BigQuery

Some fantastic features of the fast and serverless data warehouse are:

- Its data processing architecture has a built-in query engine that ensures large query runs in seconds.

- It supports queries using Structured Query Language (SQL) and has a Relational Database Management System (RDMS) structure.

- Real-time analytics with seamless scalability.

- You can manage infrastructure across multiple clouds (multi-cloud capability)

- It has powerful data analytics, machine learning, and artificial intelligence capabilities.

- BigQuery GIS offers Geospatial analytics to provide location intelligence.

- Database administration tasks are minimized and made simple.

- Ability to connect with multiple data sources and integration with Google Cloud Platform (GCP).

Data Integration between MongoDB and BigQuery

Let’s lay out how you can move data from MongoDB to BigQuery now that we have covered the uses of both platforms and what they bring to your data stack.

There are various ways of achieving data integration between both platforms. For starters, we can capture data from MongoDB to BigQuery using the MongoDB to BigQuery (batch) Google CloudDataFlow template. This Dataflow lets you quickly load your data inside BigQuery for further analysis. Alternatively, you can use third-party data integration platforms such as Estuary Flow, a much simpler method.

However, regardless of your choice, with relevant documentation and research, you can easily capture and integrate both platforms.

Method 1: Capture Data from MongoDB to BigQuery Using the BigQuery Dataflow Template

Generally, Google Cloud Dataflow works as a unified, serverless stream and bath processing system. The MongoDB to BigQuery Dataflow template is no different. This batch pipeline allows you to read documents from MongoDB and writes them to BigQuery as specified by your userOption parameter. This helps take away any operational overhead so you can focus on migrating your data from MongoDB to BigQuery.

Here are the steps involved.

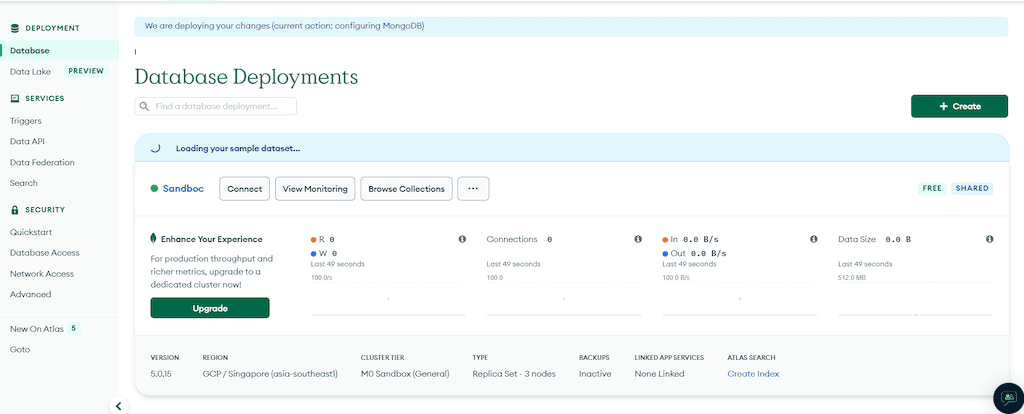

Set up MongoDB

- Create an account on MongoDB and choose Google Cloud as your provider. You will use Google Cloud to create a BigQuery data warehouse.

- Create and name your cluster. For my cluster, I went with Sandboc as the name.

- Hover over to the database page.

- I’ll use the MongoDB-provided sample dataset to keep this tutorial simple and easy to follow. To use it, simply click on Create and load sample dataset.

- Next, we head to Database Access under Security to create a Database user. You input a username and password and select a “built-in” role. We will select Read and Write to any database because we want to modify the database. Once done, click on Add user.

Create the BigQuery Dataset

- We need to create a BigQuery dataset table for our data. To do this, log in to your Google Cloud Console. From the welcome page, search for BigQuery and click on Run a query in BigQuery.

- Once you’re at your Google Cloud project, create a Dataset. Pick a descriptive name for your Dataset ID.

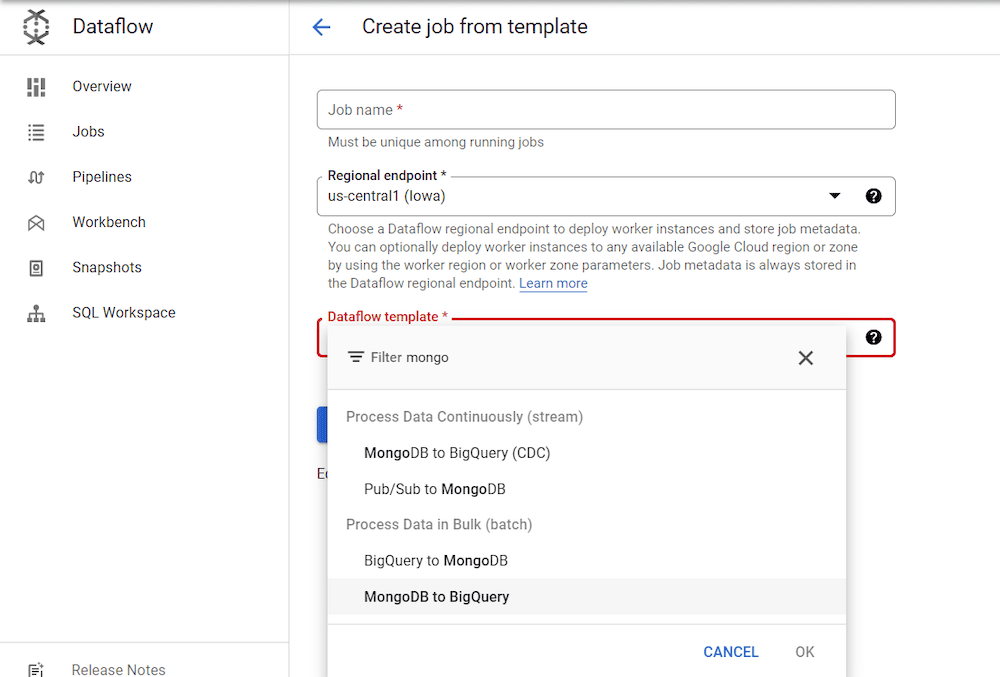

Create a Dataflow pipeline

- Create a Dataflow pipeline from Dataflow UI.

- From Dataflow, name your Job and pick the MongoDB to BigQuery template under the Process Data in Bulk (batch).

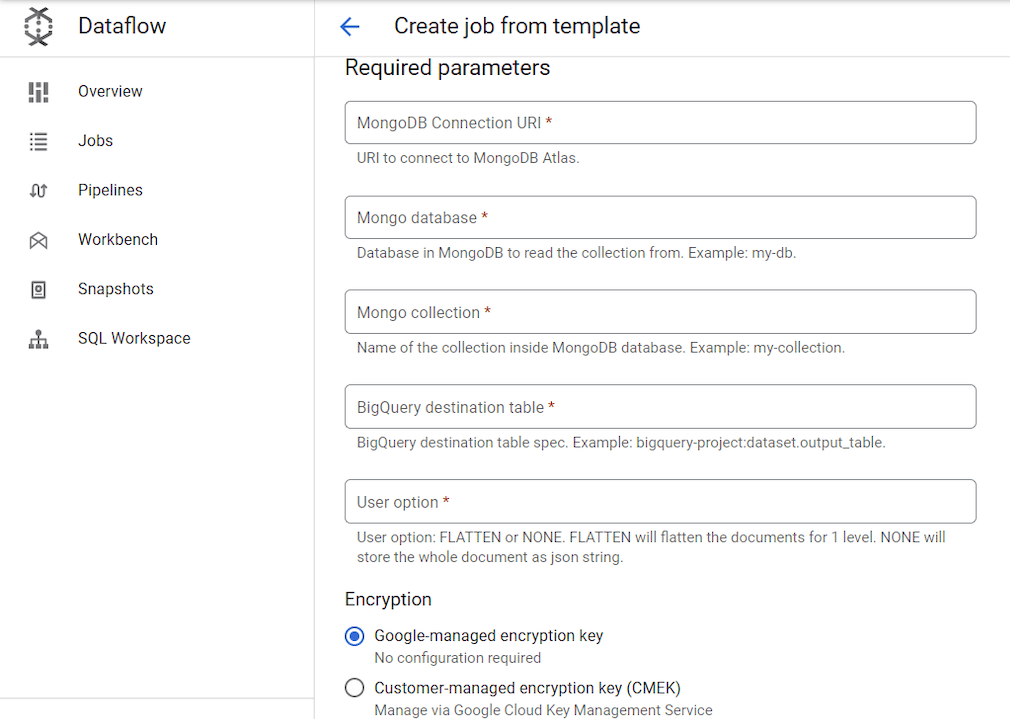

- The MongoDB to BigQuery under Process Data in Bulk (Batch) allows us to read data from MongoDB and write them to BigQuery as specified by our

userOptionparameter. However, MongoDB to BigQuery CDC (Change Data Capture) is for streaming. It allows us to access MongoDB change streams and incremental load.

We’ll use the batch method here. The next tutorial in this article will show a streaming CDC method. - Add parameters. Input the name of your MongoDB database, Mongo collection, and BigQuery destination table. For the User option, we can go with either

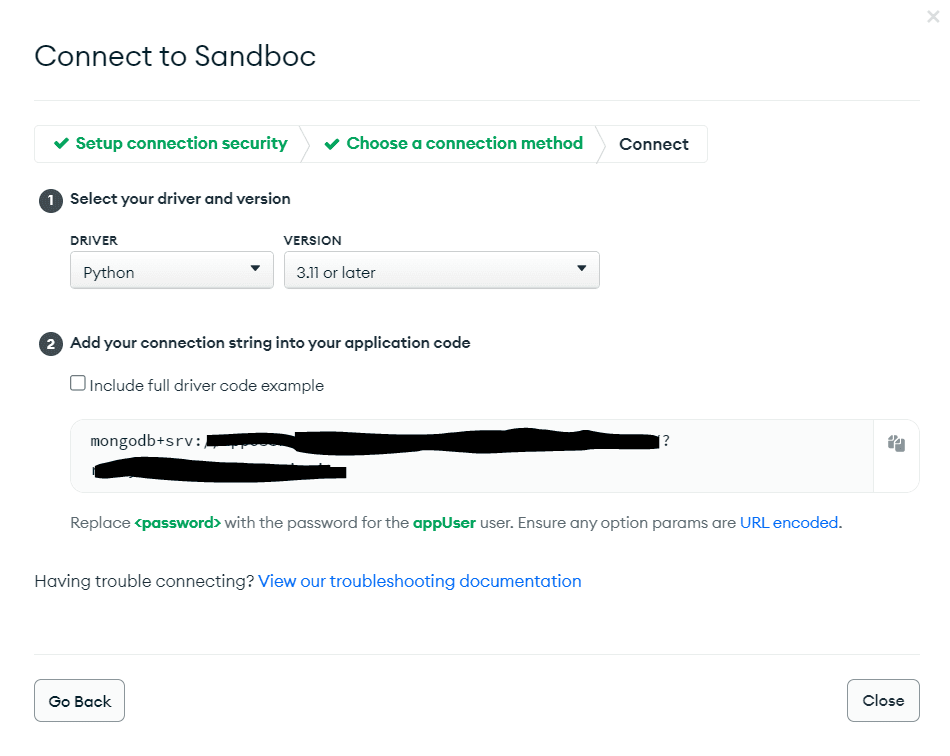

NONEorFLATTEN.FLATTENwill flatten our document into various columns, while NONE will store the document as JSON. Let’s go with NONE. For the database, the sample data we used earlier is calledsample_mflix. - Head over to the Database at MonogoDB. Connect to your sandbox and copy the Connection String. It is also called MongoDB Connection URI. Ensure you replace the <password> in the connection string with the password you created in step four.

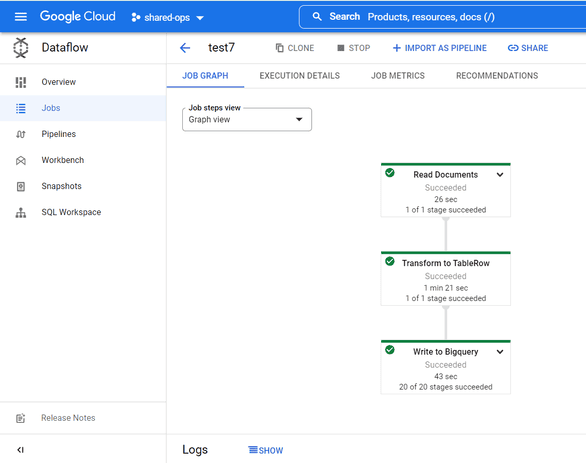

- Run the Dataflow job.

- The job gets queued and will start running once the status changes to Running. Once the job is executed successfully, its status changes to Succeed. You will now be able to see the data in the BigQuery table.

- Our data is loaded in BigQuery after every job has successfully run. You can go further and validate the data ingestion through Schema Validation.

That’s it for this method. You have successfully transferred data from MongoDB to Google BigQuery. You can now use BigQuery’s analytics capabilities to get insight from your data.

Method 2: Use Estuary Flow to Stream Data from MongoDB to BigQuery

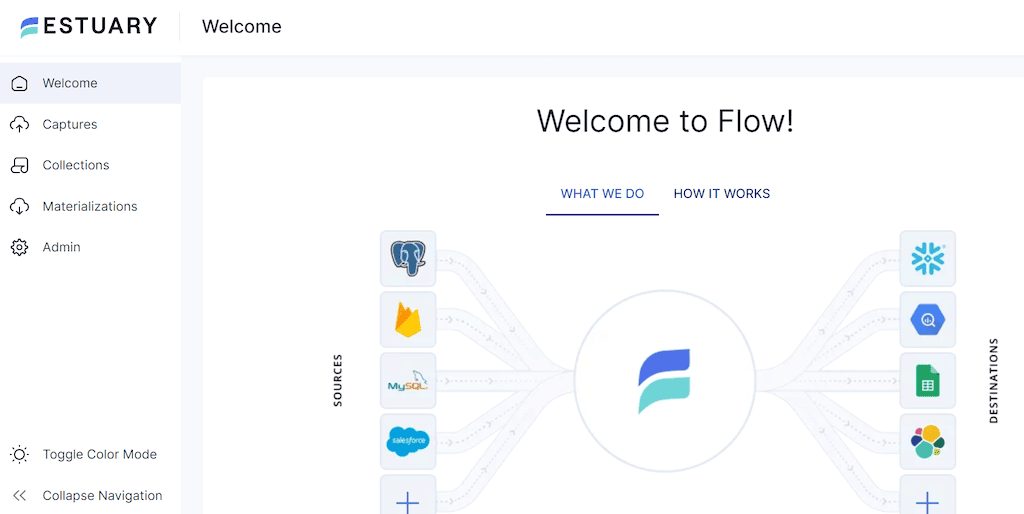

Similarly, we can use third-party Extract, transform, and load (ETL) or data integration tools like Estuary Flow to extract data from MongoDB to BigQuery.

Estuary Flow is a real-time data operation and integration platform with various unique connectors. With these connectors, data engineers can sync data and build pipelines with historical and real-time data across platforms with little to no code. A few of Estuary’s great features are its low-latency materialization, real-time scalable ETL capabilities, and cost-efficiency. Estuary Flow is $0.75/GB of data streamed without compromising timeliness.

Prerequisites

To get started, you’ll need the following:

- An Estuary free trial account. Just head over to the web app to sign up.

- A MongoDB database prepared for use with Flow:

- A username and password with Read access to any collections you want to capture.

- ReplicaSet enabled.

- Access granted to Estuary’s IP address: 34.121.207.128

- A username and password with Read access to any collections you want to capture.

- A BigQuery dataset

- A new Google Cloud Storage bucket in the same region as your BigQuery dataset.

- A new Google service account. When you create the account:

- Grant access to your Google Cloud project

- Grant user roles

roles/bigquery.dataEditor,roles/bigquery.jobUser, androles/storage.objectAdmin. - Generate a JSON service account key.

- Grant access to your Google Cloud project

Data Integration from MongoDB to BigQuery with Estuary Flows

Follow these step-by-step guides to get started.

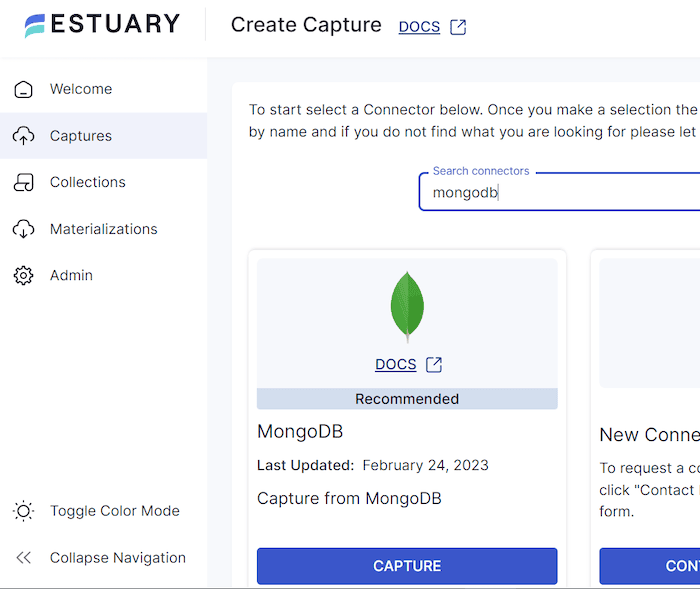

- Sign in to the Estuary web app and go to the Capture page.

- Search for the MongoDB connector and click on capture.

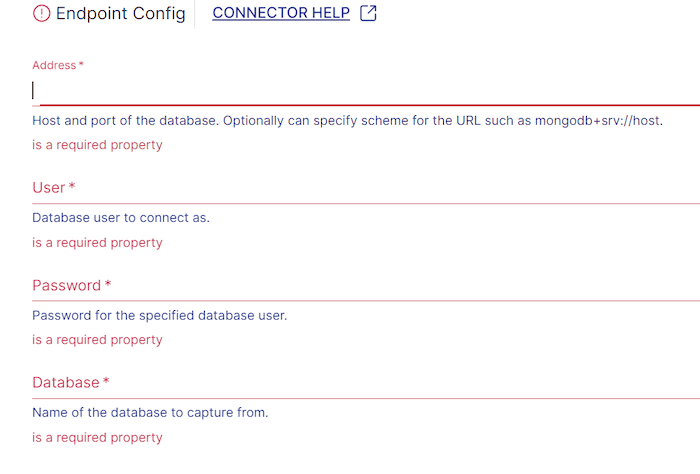

- Add a unique name and specify a source system for your capture. You will need to provide the Endpoint Config details. Details like the Address (connection string at MongoDB), user, password, and database name.

We created a database from the MongoDB sample file; the name is sample_mflix. Lastly, replace the <password> in the connection string with your password. - Click Next. Flow initiates a connection with MongoDB and identifies data collections.

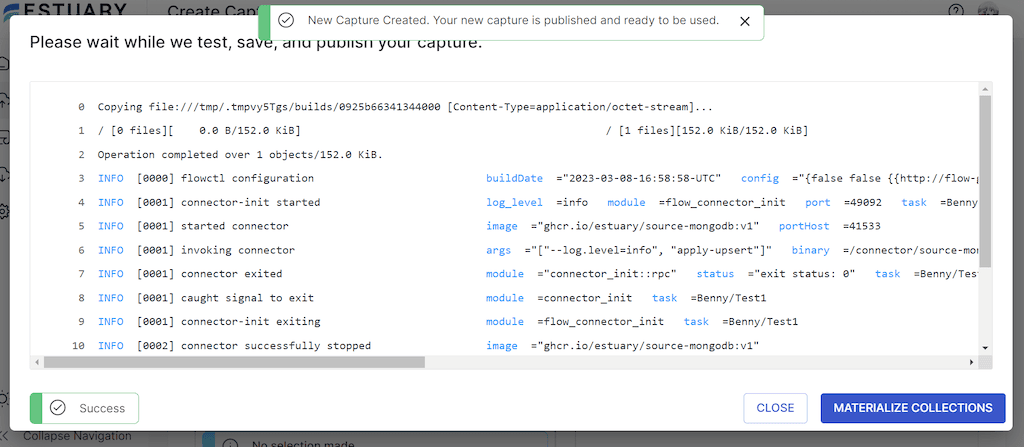

- Save and Publish.

- Click Materialize collections.

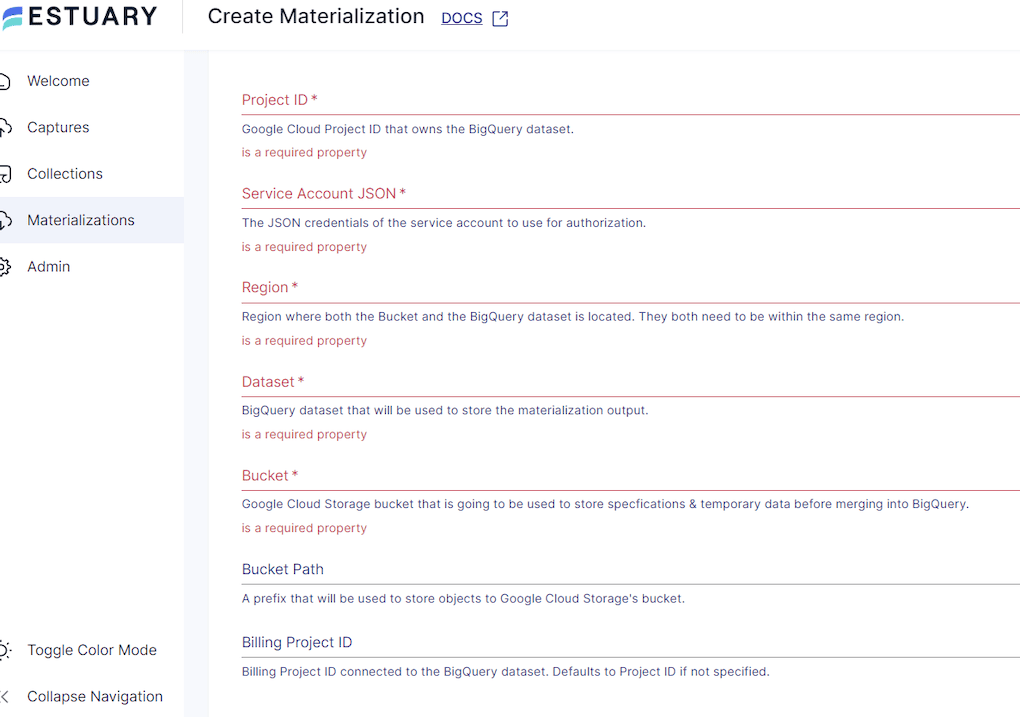

- Search and choose the BigQuery connector.

- Choose a unique name for the materialization.

- Provide the Endpoint details for the Google Cloud Project that owns the BigQuery dataset and authenticate with Google.

- Click Next, and then Save and Publish.

Each collection in your MongoDB database is mapped to a new BigQuery table. All historical data is immediately ported over to BigQuery. Going forward, as soon as new data appears in MongoDB collections, it’ll be materialized to BigQuery instantly.

With Estuary Flow, you can create endless variations of dataflows like this one using any supported connectors.

Check out the official documentation and blog to learn more about Flow.

- How to create a basic Data Flow

- Estuary’s Connectors: What are these?

- MongoDB’s Capture Connector

- BigQuery Materialization Connectors

Takeaway

Integrating across your different data platforms is critical to take full advantage of your organization’s data. But it’s challenging because of these platforms’ different data structures, models, and formats.

While there are many ways to overcome these challenges, it’s essential to prioritize flexibility, reliability, and efficiency to gain a competitive edge in your industry. Luckily, a powerful and seamless platform like Estuary can offer you all that and consolidate data from any data source like MongoDB to BigQuery in a few minutes.

So get started with Estuary by starting your free trial today or contacting our developer support team with any questions or use-cases.

You can also check out our blog to learn how Estuary Flows helps take this burden off your engineering team!

Related posts:

Author

Popular Articles