If your business is trying to unlock a competitive edge in this fast-paced world of data analytics, one well known secret is to use the vast streams of real-time data.

If you’re looking for ways to use this data for real-time analytics, consider streaming data from Amazon Kinesis to Amazon Redshift. By doing so, you can harness the real-time data ingestion capabilities of Kinesis and the powerful analytical capabilities of Redshift.

Streaming data from Kinesis to Redshift has been used by many companies process and analyze large datasets in real time. It’s a proven combination your business can use to respond swiftly to new information and insights.

If you’re looking to stream data from Kinesis to Redshift, you’ve come to the right place. Keep reading for a quick overview of both platforms and learn two different ways that you can stream data between the two platforms.

What Is Kinesis? An Overview

Amazon Kinesis, a cloud-based service offered by Amazon Web Services (AWS), is designed to process large-scale data streams from a range of services in real-time. Versatile by design, Kinesis can process lots of different data types, including audio, video, application logs, website clickstreams, and IoT telemetry.

Rather than waiting for a complete dataset to arrive to process and prepare it for analysis in batch, you can process and analyze data continuously with Kinesis. This helps you gain quick, timely insights in seconds or minutes rather than hours, days, or weeks — enabling you to make data-driven decisions at the speed of thought. Additionally, since Kinesis is a managed platform, you don’t have to hire more administrators and manage any infrastructure.

Kinesis offers four key services:

- Kinesis Data Streams, a serverless streaming data service that simplifies the capture, storage, and processing of data streams at any scale. It can ingest gigabytes of data per second from a range of sources; the data collected is nearly immediately available for real-time analytics (within 70 milliseconds of collection).

- Kinesis Video Streams, a data streaming service you can use to securely stream videos from connected devices to AWS for analytics, ML, playback, and other processing.

- Kinesis Data Firehose, an ETL service you can use to load streaming data into data lakes, data stores, and analytics services. It reliably captures, transforms, and delivers streaming data into Amazon S3, Amazon Redshift, Amazon Elasticsearch Service, and Splunk.

- Kinesis Data Analytics, a service that enables you to analyze streaming data using standard SQL. Data Analytics supplies the results or outputs to Kinesis Data Streams.

What Is Redshift? An Overview

Amazon Redshift is a fully managed, petabyte-scale data warehouse service provided by AWS. While it is designed for large-scale storage and analysis, it’s particularly effective for handling structured data and performing complex queries.

One of the main benefits of Redshift is that it automatically provisions the required resources for data warehouses and also automates administrative functions like replication, backups, and fault tolerance. Apart from this, here are some other key features of Redshift that make it a popular data warehouse choice:

- Columnar storage. Redshift stores data in a columnar format. This format is particularly efficient for analytical queries as it only reads specific columns from large datasets to quickly fetch the data.

- Massively parallel processing (MPP). Redshift automatically distributes the data and loads the query across various nodes. This helps achieve faster query performance as your data warehouse grows.

- Automated backups. Redshift automatically backs up snapshots, which are point-in-time backups of clusters, to Amazon S3. You can also replicate the snapshots in another region for disaster recovery purposes.

- SQL interface. Redshift uses an in-browser SQL interface for data queries on clusters. This makes it compatible with many existing applications and tools for data analysis.

How to Stream Data from Kinesis to Redshift?

There are several methods you can use to stream data from Kinesis to Redshift; each has its own benefits. Since Kinesis offers Data Firehose as a solution, it’s the most direct method to load data into Redshift. With that in mind, let’s take a look at some of the approaches you can use to stream data from Kinesis to Redshift.

Method #1: Using Kinesis Data Firehose to Integrate Kinesis to Redshift

Amazon Kinesis Data Firehose can capture and automatically load streaming data into Amazon Redshift. However, this involves an intermediate S3 destination since the Data Firehose delivers your data to an S3 bucket first before loading it into a Redshift cluster.

Here are the steps involved in this process:

- Sign in to the AWS Management Console and open the Kinesis console.

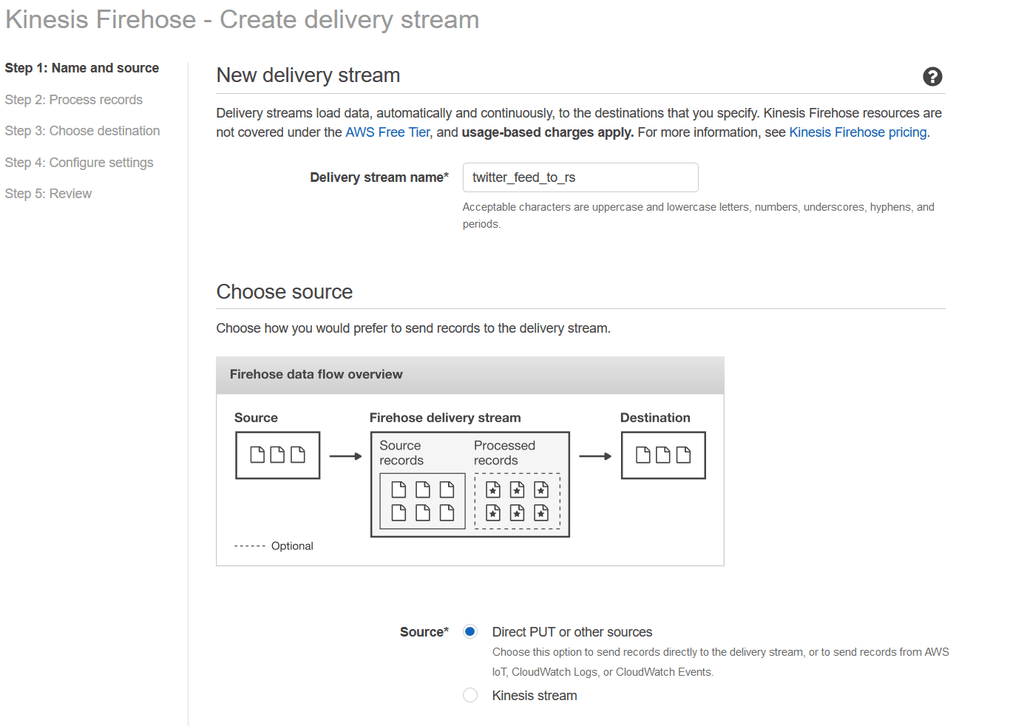

- Select Data Firehose from the navigation pane and choose Create Delivery Stream.

- Name your delivery stream and select the Source of the data. You will be provided with two options for Source:

- Kinesis Stream: To configure a delivery stream that uses Kinesis Data Stream as the data source.

- Direct PUT or other sources: To create a delivery stream that producer applications write to directly.

- Next, you must select the destination where you want to load the data. Choose Redshift and fill in the required details, including Cluster, User name, Password, Database, and Table.

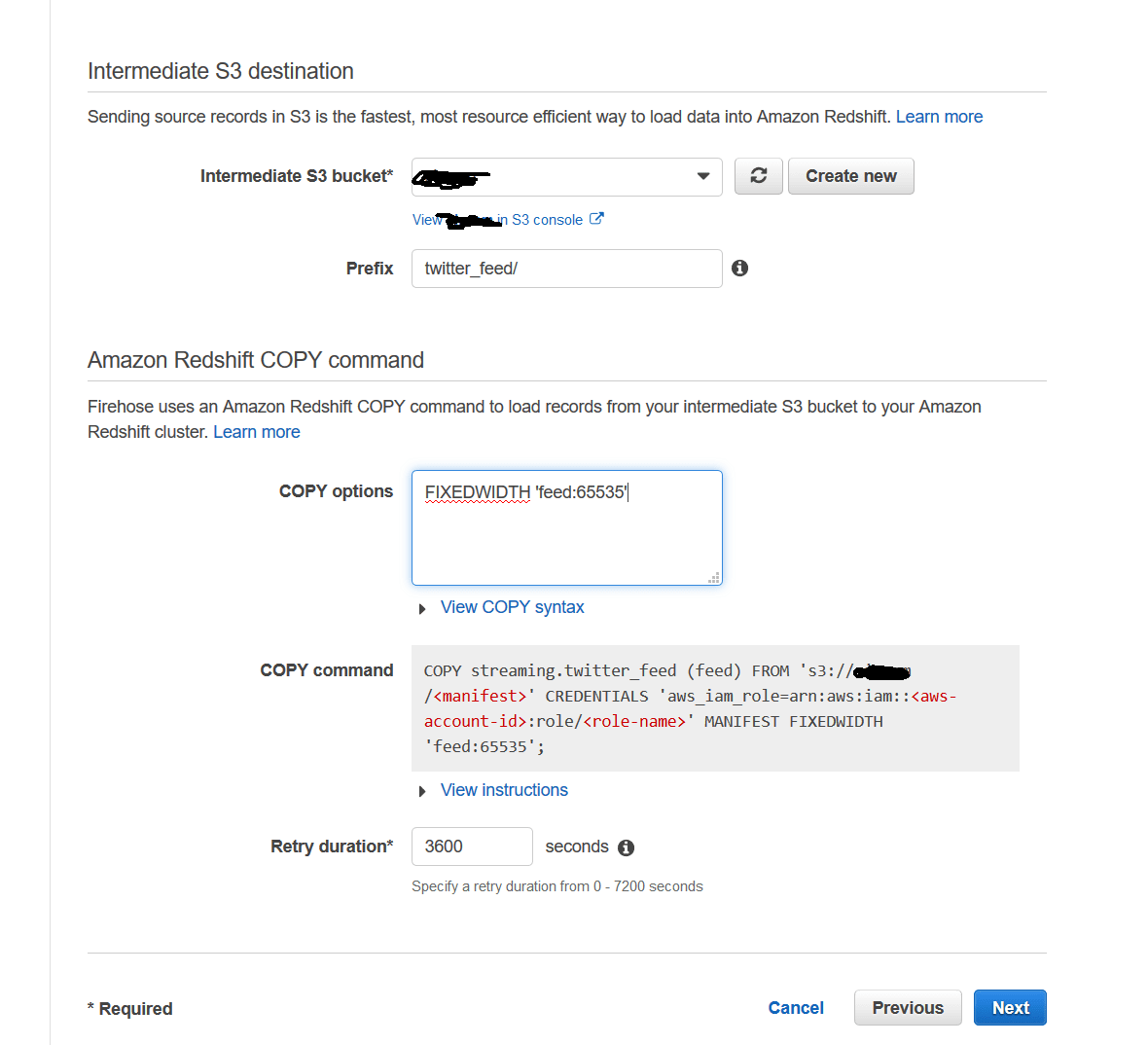

- As mentioned earlier, the integration requires an S3 bucket for staging the data. You must specify the details of the S3 bucket where the streaming data will be delivered. If you don’t already have one, create an S3 bucket and specify the details accordingly.

- In the Amazon Redshift COPY command section, the COPY options are parameters you can specify in the Redshift COPY command. Provide the COPY command and Retry duration for Data Firehose to retry if the data COPY to your Redshift cluster fails.

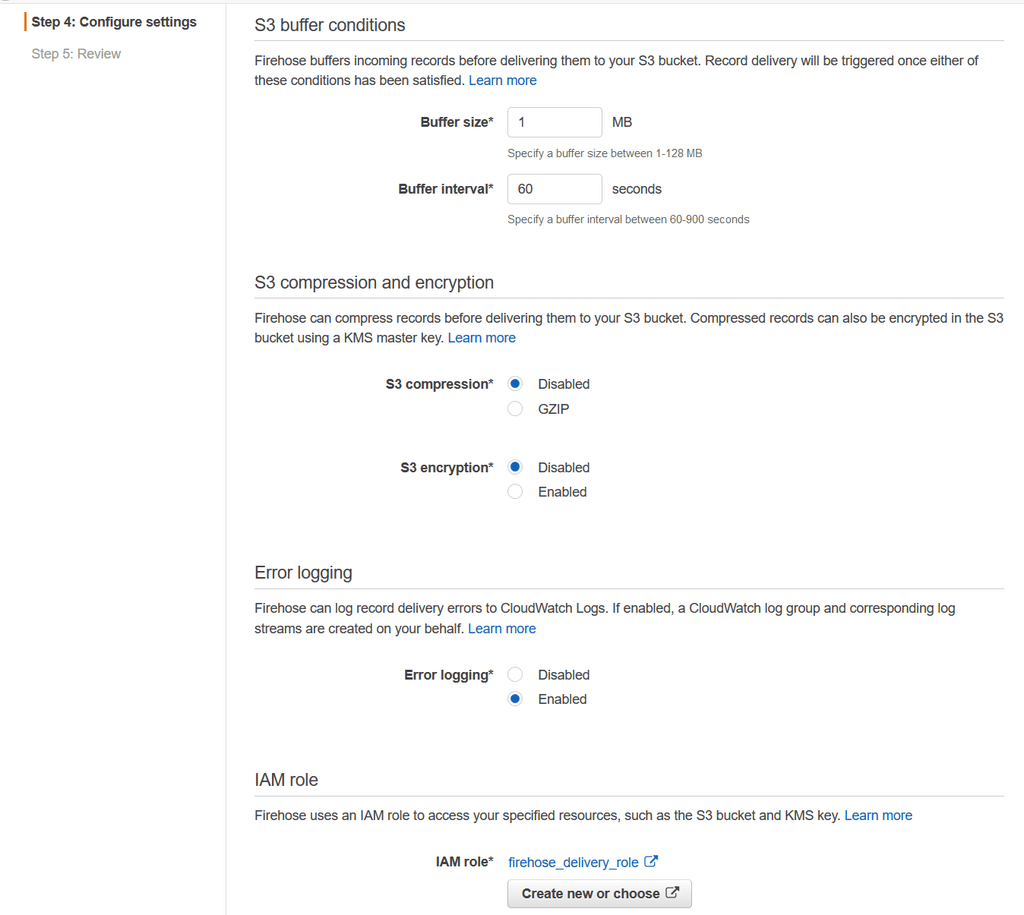

- Set up buffering hints, which define when the data should be delivered to the destination. These can be based on a size limit or a time limit.

- Then, choose S3 compression and encryption settings and Error logging settings as required.

- Firehose requires you to grant permission to access your data sources and destinations. You can choose an existing IAM role or create a new one to do this.

- Finally, when you create the delivery stream, an automated, continuous pipeline will be set up for capturing and loading streaming data into Redshift. You can send data to the delivery stream directly from data producers or by using AWS CLI.

As much as this may seem like a direct, in-house AWS solution for streaming data from Kinesis to Redshift, it does have several drawbacks, including:

- Potential for data loss. While the retry duration can be set between 0 and 7,200 seconds, Kinesis Data Firehose retries every five minutes until the retry duration ends. If you set the retry duration to 0 seconds, Firehose doesn’t retry upon a COPY command failure. Unfortunately, this may result in data loss.

- Buffer size/interval limits. Firehose buffers incoming data based on the time and size intervals before delivering it to the destination. These buffering limits may cause delays in data availability and not be able to meet all your real-time processing requirements.

Method #2: Using No-Code Tools like Estuary Flow to Move Data from Kinesis to Redshift

In addition to using Amazon’s tools, you can also stream data from Kinesis to Redshift using a third-party tool like Estuary Flow.

To start streaming data from Kinesis to Redshift with Flow, sign in to your Estuary account. If you don’t already have one, register for a free account. Once you’re in, follow these steps.

Step 1: Configure Kinesis as the Source

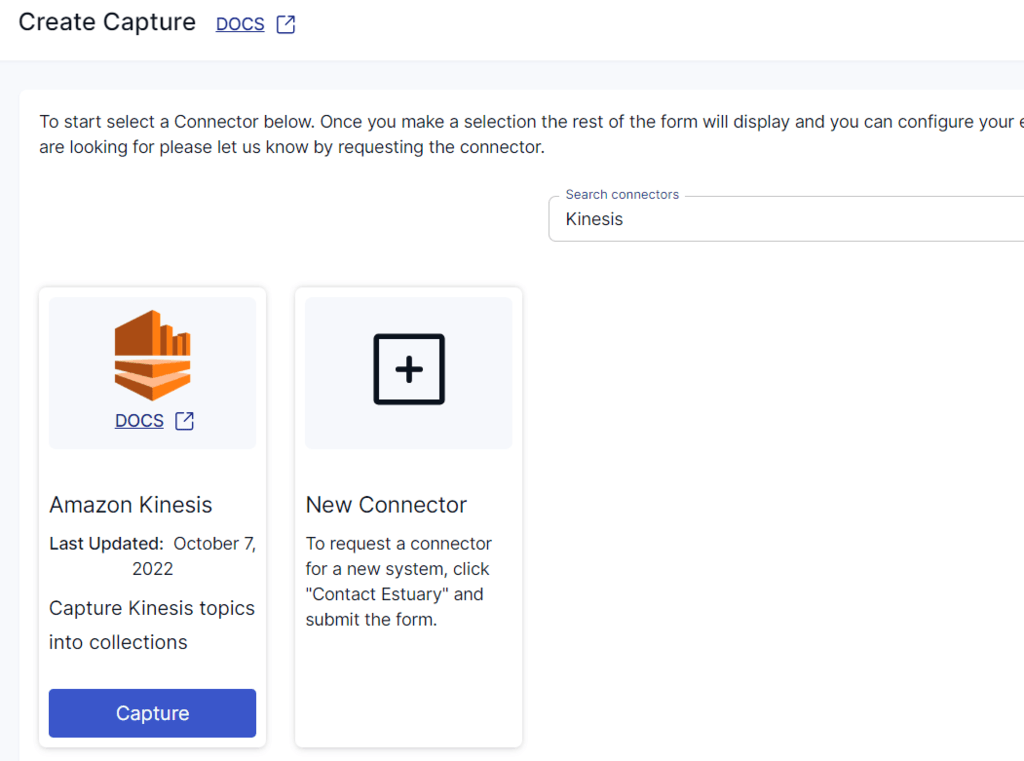

Click on Sources on the left pane of the Estuary dashboard. To proceed, click the + NEW CAPTURE button and use the Search connectors box to look for Kinesis. When the connector appears in the search results, click the Capture button.

Note: Before you start configuring the connector, there are a few prerequisites you must meet.

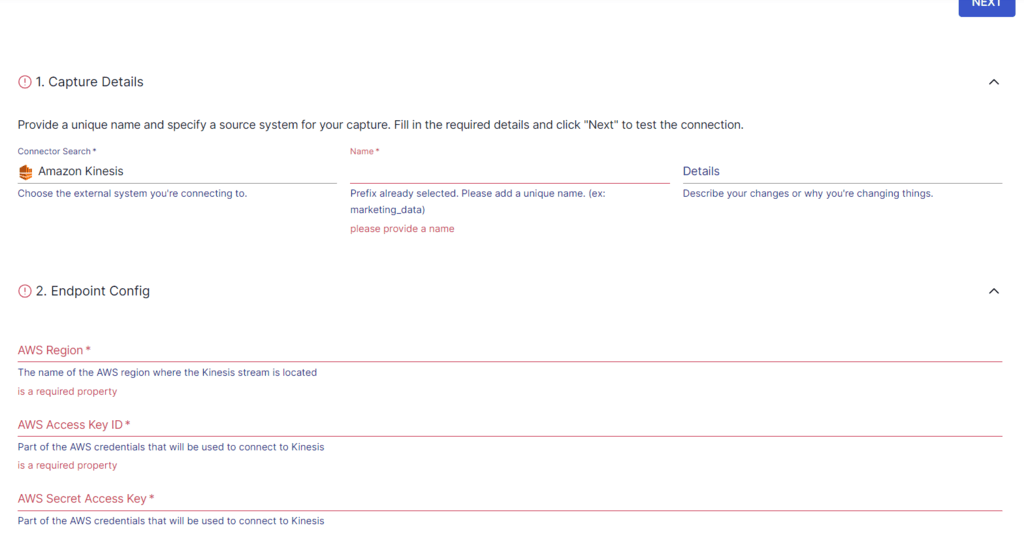

On the Amazon Kinesis connector page, specify the required details, such as a Name for the capture, the AWS Region, Access Key ID, and Secret Access Key. Then, click NEXT followed by SAVE AND PUBLISH.

The connector will then capture data from Amazon Kinesis streams into a Flow collection.

Step 2: Configure Redshift as the Destination

After a successful capture, you’ll see a pop-up window displaying the details of the capture. Click the MATERIALIZE CONNECTIONS button on this pop-up to start setting up the destination end of the pipeline. Alternatively, navigate back to the Estuary dashboard and click Destinations > + NEW MATERIALIZATION.

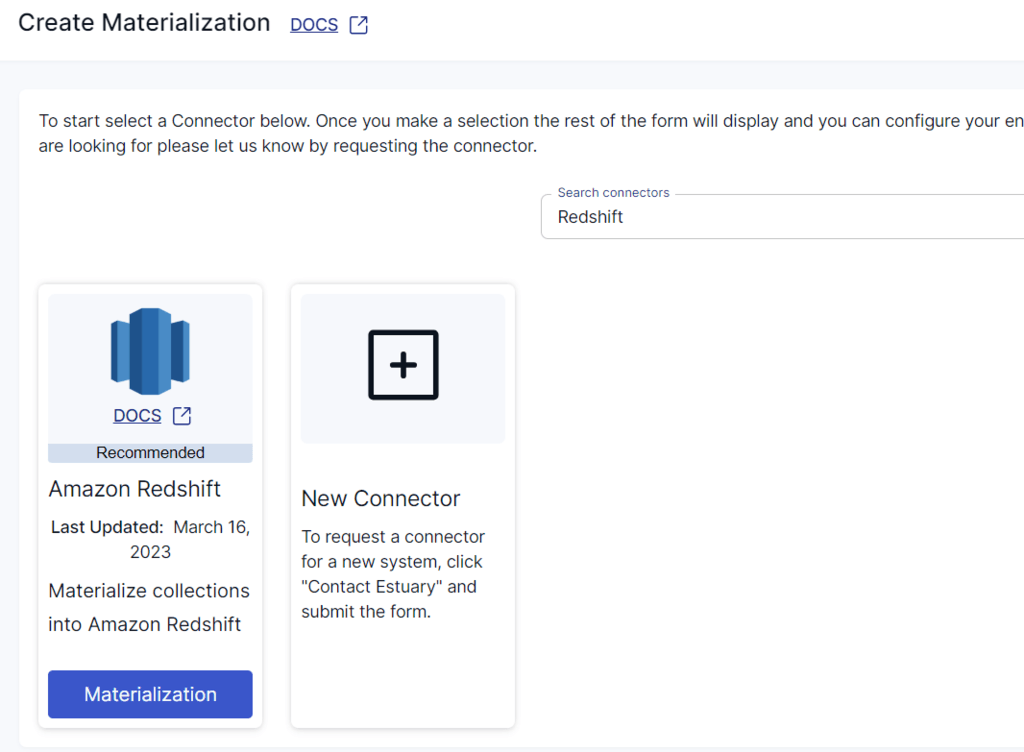

In the Search connectors box, enter Redshift. The search results will display the Amazon Redshift connector. Click the Materialization button to proceed.

Note: There are a few prerequisites you must meet to use the Redshift connector.

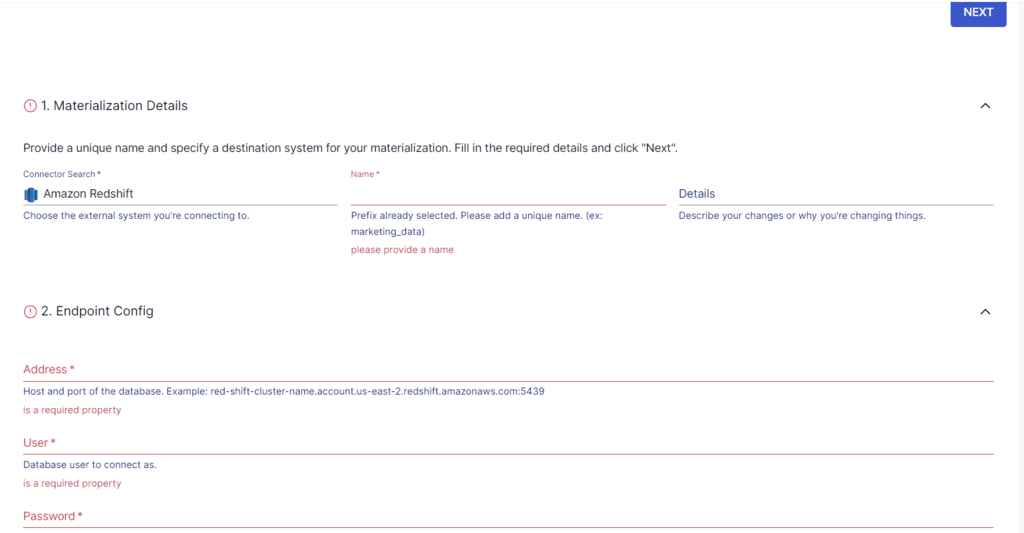

On the Redshift connector page, you’ll need to fill in some required details to configure the connector. These include a Name for the materialization, Address, User, and Password, among others.

If the Flow collections of Kinesis haven’t been automatically selected, use the Source Collections feature to manually add the collections. Click on LINK CAPTURE to select a capture to link to your materialization. Any collections added to your capture will automatically be added to your materialization.

After providing all the configuration details, click NEXT > SAVE AND PUBLISH. This will materialize the Flow collections of your Amazon Kinesis data into tables in an Amazon Redshift database.

That’s it! After a few minutes of configuration and a few more of loading Redshift, you’re done.

If you’d like more information about the configuration process of the connectors, check out these Estuary docs:

Benefits of Using Estuary Flow for Kinesis to Redshift Streaming

Flow is a suitable choice for streaming data from Kinesis to Redshift thanks to these key capabilities:

- Real-time data processing. One of Estuary Flow’s strengths is its ability to perform real-time data processing. This is beneficial if you require immediate insights from streaming data.

- Easy to set up data pipelines. Estuary’s intuitive interface and readily available connectors simplify the process of setting up and managing streaming data pipelines.

- Scalability. Estuary Flow pipelines can automatically scale to accommodate increasing data volumes. This helps ensure data integrity and consistent performance.

Ready to start streaming data with Estuary Flow?

If your business is looking to leverage real-time analytics and make swift, informed decisions, streaming data from Kinesis to Redshift is the way to go.

But you’ll have to figure out whether to use Kinesis Data Firehose or a SaaS alternative like Estuary Flow to complete the process.

Using Firehose involves several drawbacks, including potential data loss and delayed data availability, which isn’t suitable for real-time data processing.

On the other hand, Estuary Flow supports real-time data processing, impressive scalability, and easy-to-setup data pipelines.

Consider using Flow to build real-time data pipelines to seamlessly migrate data between platforms. A wide range of readily available connectors will help simplify the task for you. Sign in or register for an Estuary account to get started today!

FAQs

Can Kinesis write to Redshift?

Yes, Amazon Redshift allows you to stream data from Amazon Kinesis Data Streams. The Redshift streaming ingestion feature allows you to perform high-speed data ingestion from Kinesis to Redshift.

What is the difference between Amazon Redshift and Kinesis?

Amazon Redshift is an AWS-hosted data warehouse platform designed to store massive volumes of data and perform complex queries and analyses on it. On the other hand, Kinesis is a real-time data streaming platform optimized for high-throughput data ingestion and low-latency processing.

Is Kinesis an ETL tool?

No, Amazon Kinesis is not an ETL tool. It is a platform designed for real-time data streaming and processing. Kinesis allows you to continuously collect, process, and analyze streaming data for real-time insights.

Author

Popular Articles