Are you looking for a straightforward Jira to Snowflake integration process? Look no further because you've landed at the right place. Integrating Jira with Snowflake streamlines data consolidation enhances collaboration, and facilitates data-driven decisions. The article will guide you through two distinct approaches for accomplishing your data pipeline. While the former uses include the manual process, the latter automates data integration and replication processes in real-time. But before we dive into the steps, let’s briefly understand what Jira and Snowflake are.

Introduction to Jira

Jira is a management tool used by software development teams to plan, track, and organize their work efficiently. It allows the team to create and manage tasks, monitor progress, set priorities, and collaborate in real-time. This helps the team members to communicate and work together to quickly ship the software.

Jira’s flexible features and support for Agile methodologies make it a popular choice to streamline an organization’s workflows and overall productivity. It enables teams to define custom workflows that represent their specific development process. Teams can also track issues, identify bottlenecks, and make data-driven decisions using its built-in analytics and reporting features. This allows them to stay organized and focused on delivering high-quality software products.

Introduction to Snowflake

Snowflake is a cloud-based data warehousing and analytics service that offers a fully managed platform for storing, processing, and analyzing data. It provides near real time data ingestion, data integration, and querying at scale. This enables you to leverage the power of data for actionable insights and informed decision-making.

With Snowflake’s cloud-native architecture, you can efficiently handle diverse data types, including structured and semi-structured data. This allows you to seamlessly integrate and process various data formats.

Methods to Connect Jira to Snowflake

- Method 1: Manually Load Data from Jira to Snowflake

- Method 2: Using Estuary Flow for Jira to Snowflake Integration

Method 1: Manually Load Data from Jira to Snowflake

Manually loading data from Jira to Snowflake involves several steps. Jira offers built-in export functionality that allows you to retrieve data from your Jira instance and save them in CSV format. Here’s a detailed guide on how to do it:

Prerequisites:

- Active Jira account

- Snowflake account

- Target table in Snowflake

- Cloud Storage account (optional) like Amazon S3 or Google Cloud Storage to stage CSV files for loading into Snowflake.

Step 1: Export Data into CSV

Jira allows you to export users, groups, and a list of issues by choosing specific projects from your Jira instance. It can export data in CSV, XML, Excel, PDF, or Word format. CSV is a commonly used format for data interchange, as it is simple, lightweight, and widely supported. In this method, we will export issues from the Jira account into CSV files.

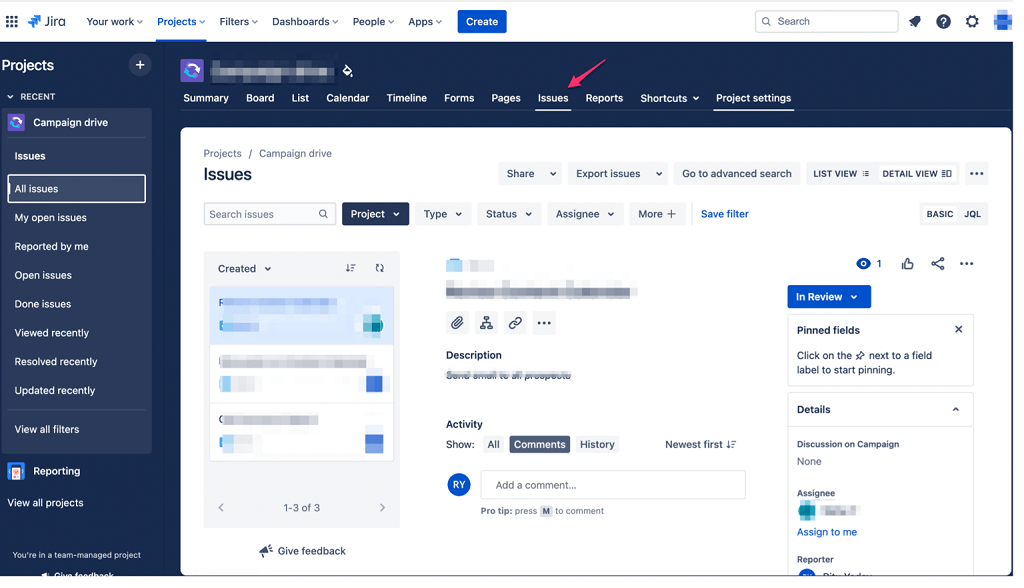

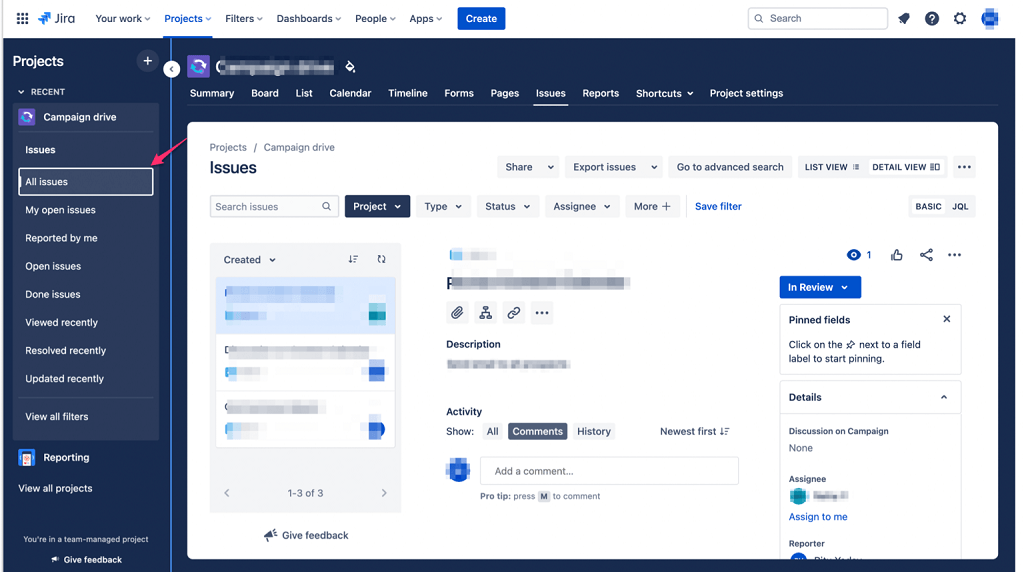

- Log into your Jira instance.

- Go to the project from where you want to export issues and navigate to the Issues located at the top.

- Select the type of issues that you want to export to CSV, such as All issues, My open issues, or Done issues from the project. Here, we will proceed with All issues.

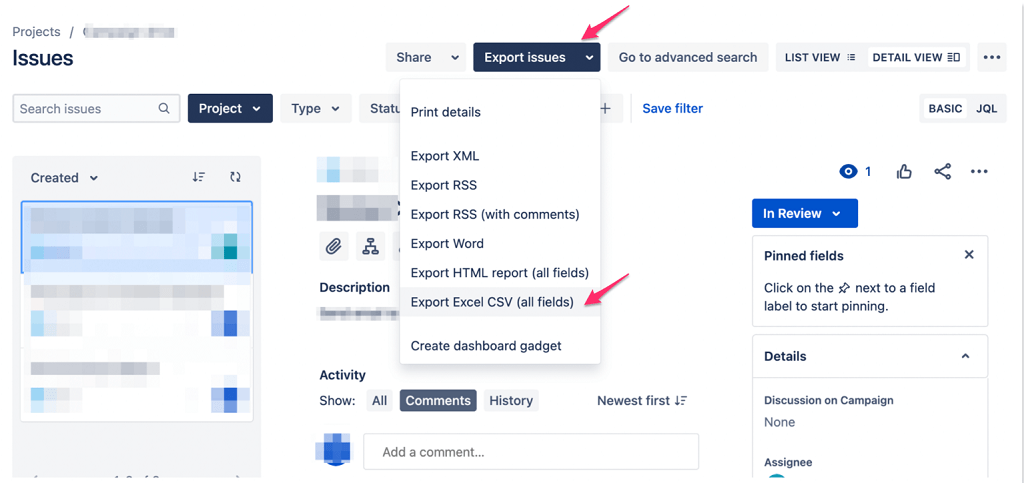

- Click on the Export issues button and choose the Export Excel CSV (all fields) option to export the data in CSV format. The exported CSV file(s) will be saved to your local machine.

- If you want to customize the columns and add filters then navigate to Filters > View all filters > Create Filter. Search and select the required issues in the search box. Next, click on Export Excel CSV (my defaults). The required fields will be downloaded in a CSV file on your local machine.

Step 2: Upload CSV to the Internal or External Stage

Before you upload any CSV files in the Snowflake table, you need to load them into the Snowflake stage. It is a critical component of Snowflake's architecture that facilitates seamless data loading and integration processes. There are two types of stages in Snowflake:

- Internal Stage: It is Snowflake’s internal storage to temporarily house data during the data loading process. This stage is primarily used when loading data from local files or loading data from one Snowflake table into another.

- Before uploading data to Snowflake’s internal stage, ensure CSV file data is properly cleaned, transformed, and matches the Snowflake table structure.

Let’s understand how to load CSV file data located on a local machine to Snowflake’s internal stage.

- Start the SnowSQL CLI by providing your Snowflake account information.

- Create an internal stage using SnowSQL CLI using the following command:

plaintextCREATE STAGE internal_stageReplace internal_stage with the name of your choice for the stage.

- With the help of the PUT command, you can upload CSV files from the local machine to the Snowflake internal stage.

plaintextPUT file:///local_csv_file_path/jira.csv @internal_stage;Replace /local_csv_file_path/jira.csv with the path and name of the CSV file on your local machine. Enter the internal stage name that you’ve just created in @internal_stage.

- Now, your CSV files stored in the local machine are successfully uploaded into Snowflake’s internal stage.

- External Stage: It is primarily used for loading data from files stored in cloud storage or for sharing data between Snowflake accounts. Commonly used cloud storage for external stages are Azure Blob Storage, Amazon S3, and Google Cloud Storage.

This process assumes that your CSV files are already cleaned, transformed, and located in Amazon S3. Here’s an overview of the steps involved in uploading data from cloud storage (Amazon S3) to Snowflake’s external stage.

- Create and configure a storage integration in Snowflake that allows you to securely connect to your cloud storage provider. If you haven’t created a storage integration, create one using the following command:

plaintextCREATE STORAGE INTEGRATION <storage_integration_name>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'S3’

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = '<iam_role>' STORAGE_ALLOWED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/')

[ STORAGE_BLOCKED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/') ]Here, storage_integration_name is the name of the new integration, iam_role is the Amazon Resource Name (ARN) of the role, and STORAGE_ALLOWED_ALLOCATIONS is the S3 bucket name that stores your data files.

- Create an external stage using SnowSQL CLI and the following command:

plaintextCREATE STAGE s3_extenal_stage_name

STORAGE_INTEGRATION = <storage_integration_name>

URL = 's3://s3_bucket_name/encrypted_files/'

FILE_FORMAT = (TYPE = ‘CSV’);

Replace the necessary field names and paths with your AWS S3 bucket and file information.Step 3: Load Data into Snowflake Tables

You need to use the COPY INTO command to load data from internal or external storage into the Snowflake table. Here’s the syntax for COPY INTO command.

- Load Data From Internal Storage into Snowflake Tables.

plaintextCOPY INTO snowflake_table_name

FROM <internal_stage>/<path_to_csv_file_>

FILE_FORMAT = (TYPE = ‘CSV’)

;Replace snowflake_table_name with the name of the Snowflake target table you want to load data into. Enter the path and CSV filename which you’ve uploaded to the internal stage.

- Load Data From External Storage into Snowflake Tables.

plaintextCOPY INTO snowflake_target_table

FROM s3://s3_bucket_name/data/files credentials=(AWS_KEY_ID='$AWS_ACCESS_KEY_ID' AWS_SECRET_KEY='$AWS_SECRET_ACCESS_KEY')

FILE_FORMAT = (TYPE = 'CSV')

;Replace snowflake_target_table with the Snowflake target table name in which you want to load data. Mention details and credentials of the AWS S3 bucket.

That’s it! You’ve successfully loaded data from Jira to the Snowflake table!

Method 2: Using Estuary Flow for Jira to Snowflake Integration

Using CSV files is often a one-off approach to transferring data from Jira to Snowflake. It is a completely manual process that requires human intervention, which may not be suitable for automated or frequent data transfers. If the data is not cleaned before loading, it can lead to more human errors and cause loading issues. On the other hand, as data volume grows, the CSV approach may become inefficient and time-consuming due to manual dependency.

To overcome these limitations associated with the above method, you can use SaaS tools like Estuary Flow. It is a data integration and replication tool that transfers data in real time. Estuary offers a wide range of pre-built connectors with which you can connect seamlessly without writing extensive code.

Here are some main features and advantages of Estuary Flow:

- Low-Code UI and CLI: Flow provides a low-code user interface for simple workflows and a CLI for fine-grained control over pipelines. This allows seamless switching between the two options during pipeline development and refinement.

- Real-time Data: It uses the Change Data Capture technique, which captures data from sources and instantly replicates it in your target system. This facilitates up-to-date data available for analysis and fosters real-time decision-making.

- Automation: Flow automates both schema management and data deduplication, removing the necessity for additional scheduling or orchestration tools.

With these two simple steps, you can quickly connect Jira to Snowflake using Estuary Flow. But before proceeding with the steps, ensure you have fulfilled the prerequisites for Jira and Snowflake.

Step 1: Connect Jira as Source

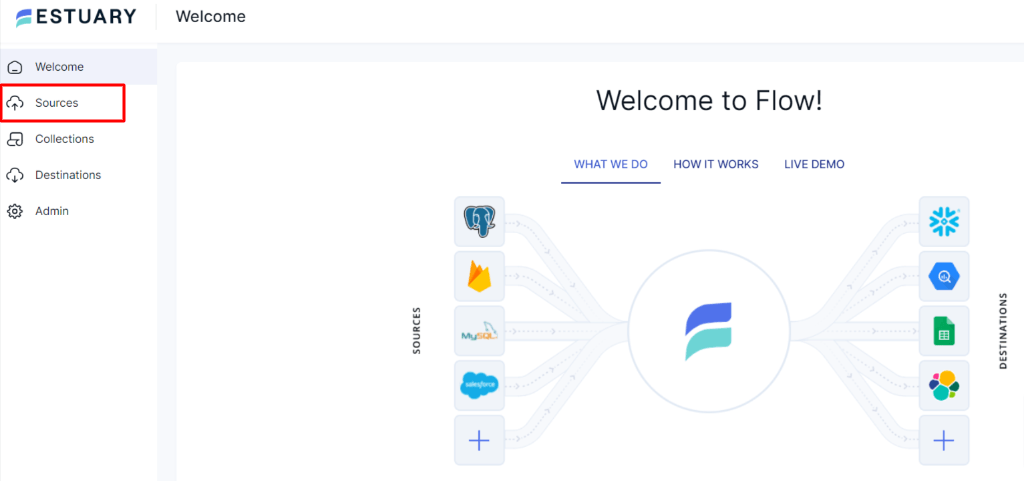

- Log in to your Estuary account.

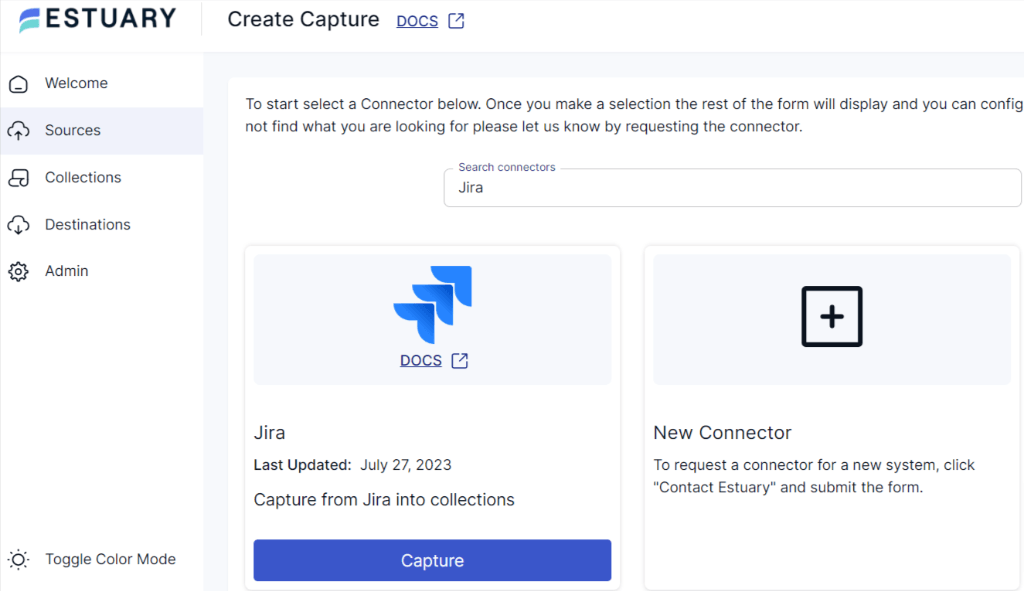

- On the Estuary dashboard, simply navigate to Sources to set the source of your data pipeline.

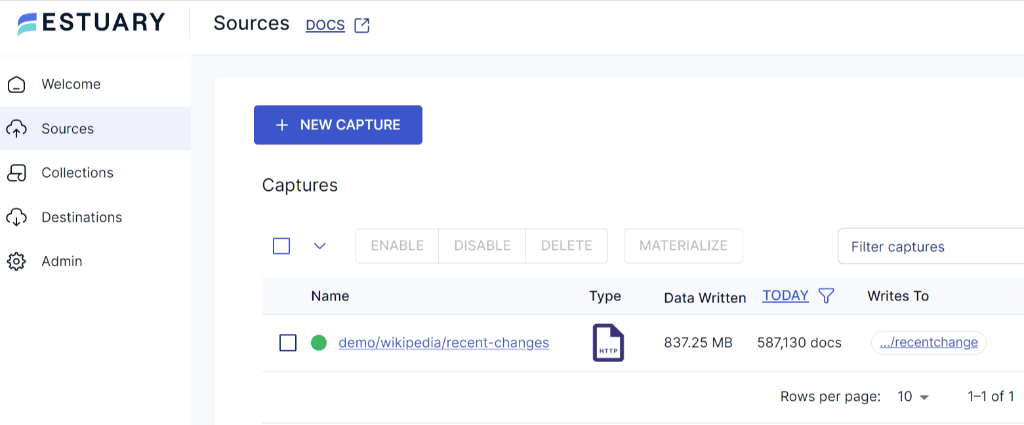

- Click on the + New Capture button on the Sources page.

- On the Create Capture page, search for the Jira connector in the Search Connectors box and click on the Capture button.

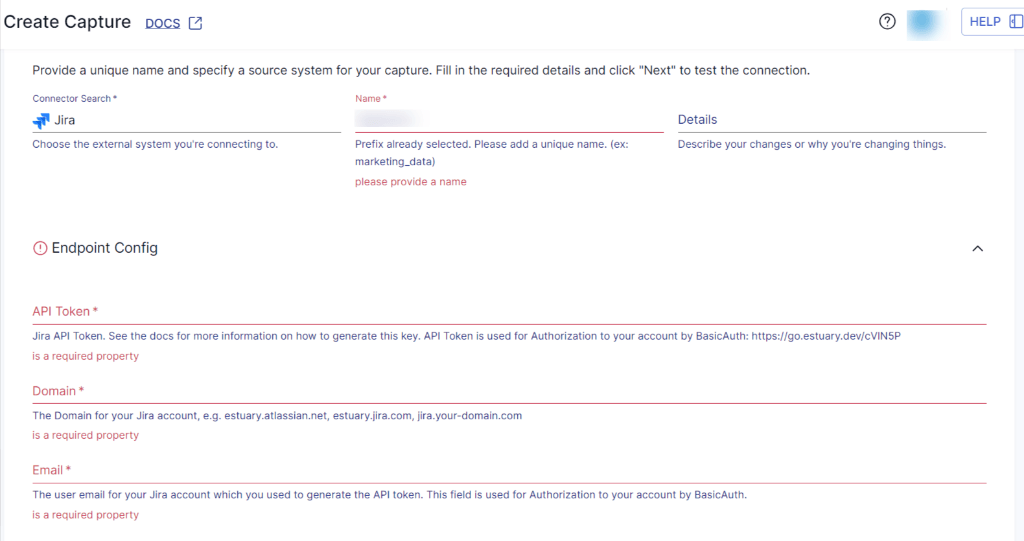

- Now you’ll be directed to the Jira connector page. Enter a unique name for the connector and fill in the endpoint config details such as API Token, Domain, and Email. Click on Next, followed by Save and Publish.

Step 2: Connect Snowflake as Destination

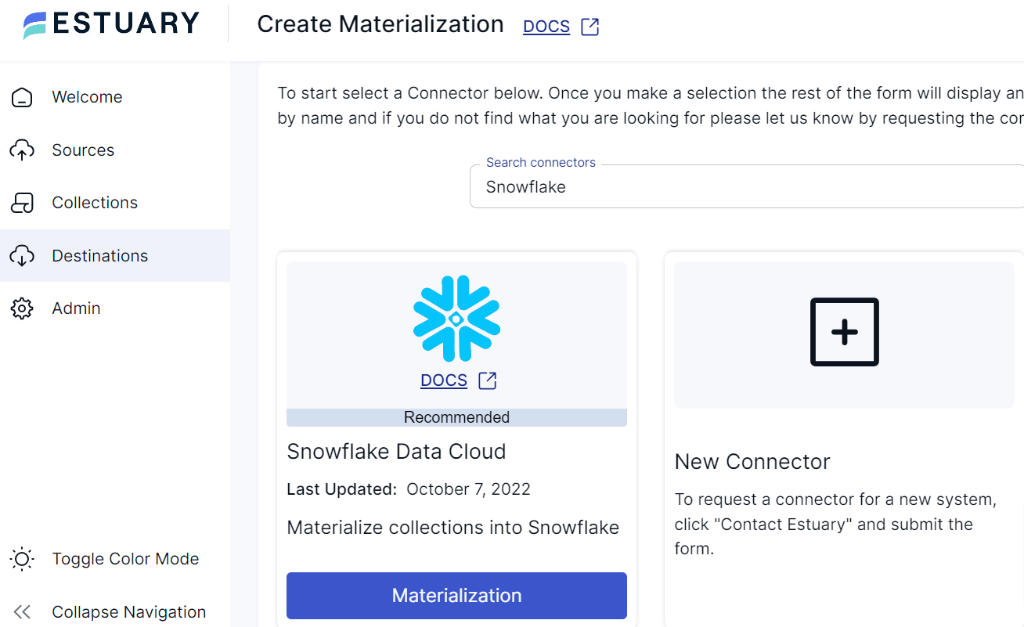

- To set up the Snowflake as a destination, navigate to the Estuary dashboard, choose Destinations, and click + New Materialization.

- Search for Snowflake in the Search Connector box and click on the Materialization button. This step will take you to the Snowflake materialization page.

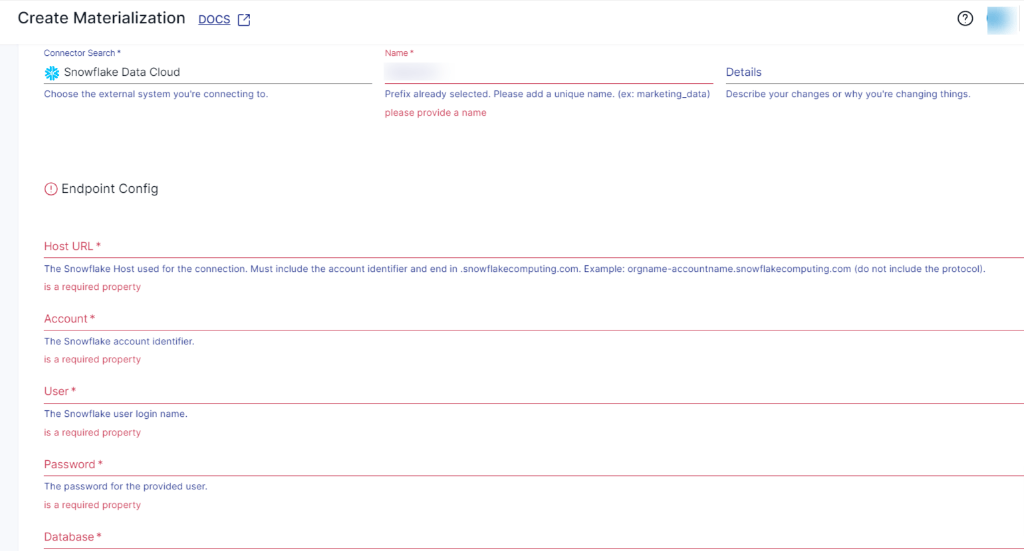

- Provide a unique name for your materialization. Fill in the Endpoint Config details, such as the Snowflake Account identifier, User login name, Password, Host URL, SQL Database, and Schema information.

- Finally, click on Next > Save and Publish. These two straightforward steps using Estuary Flow will complete Jira Snowflake integration.

For comprehensive instructions on establishing a complete Data Flow, refer to the documentation provided by Estuary.

Conclusion

You learned two ways of Jira to Snowflake migration. Both approaches are simple and help you transfer data from Jira to Snowflake tables. Whichever approach you choose, it is necessary to gauge your requirements and resources before. The first method completed the data integration process using CSV files and manual coding. Though this method looks time-consuming, it is convenient for small datasets and occasional updates. However, the second method completes Jira Snowflake integration using Estuary Flow without writing a single line of code. Hence, this method is user-friendly for both technical and non-technical users. Additionally, it will replicate data in your Snowflake tables in real time without any human intervention.

Looking for an automated and reliable data pipeline tool to integrate Jira to Snowflake? Try Estuary Flow today, your first pipeline is free!

Author

Popular Articles