With digital interactions escalating across diverse platforms, the ability to extract meaningful insights from various sources is crucial for businesses to stay competitive. Analyzing user behavior can provide you with actionable insights for making informed decisions. Google Analytics 4 is designed to capture and analyze user interactions across multiple channels. However, a Google Analytics 4 to Redshift integration can provide you with advanced data analytics.

Amazon Redshift, a highly scalable data warehouse, has impressive data analytics capabilities. It can efficiently process and analyze large volumes of data. By migrating data from Google Analytics to Redshift, you can harness the benefits of Redshift to uncover the true potential of your data. Let’s dive in to see how this works.

An Overview of Google Analytics 4

Google Analytics 4 (GA4), offered by Google Web’s analytics platform, is an analytics service that allows you to measure traffic and engagement across your websites and apps. It is a significant evolution from the previous version, Universal Analytics, and is designed to provide more valuable insights into user interactions across multiple digital platforms.

The focus of GA4 is on a user-centric data model. Rather than relying only on cookies, it utilizes an anonymous and unique user ID to track user interactions across devices, sessions, and platforms. This helps provide more accurate insights into user behavior. GA4 tracks user interactions across multiple platforms and devices, including mobile apps, websites, and other digital channels. This allows businesses to gain insights into the entire customer journey even when users switch between devices.

Among the many essential features of GA4 is its life cycle reporting, which allows you to analyze user behavior from acquisition to retention. The life cycle collection includes detailed reports on acquisition, engagement, monetization, and retention. This helps you understand how users engage with your content over time.

An Overview of Redshift

Amazon Redshift is a petabyte-scale data warehousing service provided by Amazon Web Services (AWS). This enterprise-class relational database query and management system is specifically designed for Online Analytical Processing (OLAP) workloads to support complex queries against large datasets. This makes it ideal for data warehousing, data analytics, and business intelligence.

One of the most impressive features of Redshift is its accelerated query performance, mainly due to:

- Columnar Storage: Unlike traditional database systems that store their data in rows, Redshift stores its data in a columnar format. This enhances query performance since querying involves efficient retrieval of only the specific columns needed for analysis.

- Massively Parallel Processing (MPP): Redshift employs an MPP architecture, where data is distributed across multiple nodes in a cluster. Each node has a separate CPU, memory, and storage, allowing for parallel execution of queries and processing of tasks. This significantly improves query performance.

- Data Compression: With the use of specialized data compression encodings, Redshift optimizes memory storage and disk I/O. This also helps boost the overall efficiency of query processing.

How to Move Data from Google Analytics to Redshift

To integrate Google Analytics to Redshift, you can use one of the following two methods:

- The Automated Method: Using no-code data integration tools like Estuary Flow

- The Manual Method: Using API and custom scripts

The Automated Method: Using No-Code Tools like Estuary to Connect Google Analytics to Amazon Redshift

Data integration tools are designed to automate data migration processes and significantly reduce the time required to set up integration pipelines. Such tools often come with pre-built connectors for popular data sources and destinations to simplify the process of setting up the data transfer. Estuary Flow, for example, is a reliable solution to migrate data from Google Analytics to data warehouses like Amazon Redshift.

Estuary Flow is an advanced DataOps platform that can handle real-time data operations through managed CDC and ETL pipelines. It automates the process of extracting data from the source, performing complex real-time transformations and aggregations, and loading the data into the desired destination. With its rapid streaming data capabilities capabilities, the end-to-end latency is in the millisecond range.

To begin setting up a Google Analytics to Redshift data integration pipeline with Flow, you must register for a free account. If you already have one, sign in to your account and follow these simple steps:

Step 1: Configure Google Analytics 4 as the Data Source

First, there are some prerequisites you need to fulfill for using this connector:

- A Google Analytics 4 property ID.

- The Google Analytics Data API enabled on your Google project with which your Analytics project is associated.

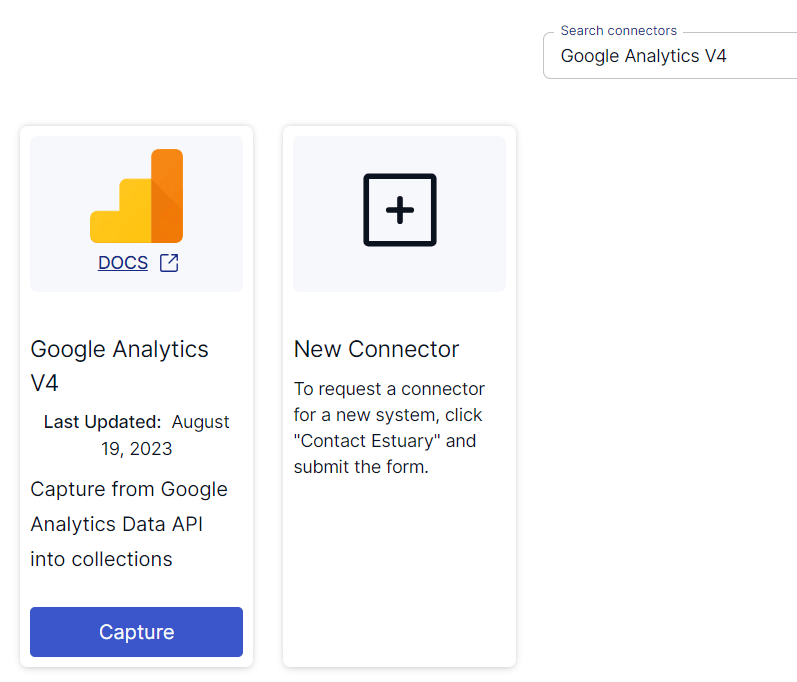

Upon signing in, you will be redirected to the Estuary Flow dashboard. Click on Sources → + NEW CAPTURE. Search for Google Analytics using the Search connectors box and click on the Capture button of the Google Analytics V4 connector in the search results.

Image Source: Estuary

On the Google Analytics 4 connector page, specify the required details, such as a Name for the capture, Property ID, and Start Date. For Credentials, you can authenticate via Google (OAuth) or use a service account key authentication. After providing the necessary information, click on NEXT → SAVE AND PUBLISH.

The connector will use the Google Analytics Data API to capture data from GA4 properties into Flow collections. Here’s a list of the data resources this connector supports:

- Devices

- Locations

- Pages

- Traffic sources

- Website overview

- Daily active users

- Weekly active users

- Four-weekly active users

Step 2: Configure Amazon Redshift as the Data Destination

Before you use the Redshift connector, you must ensure you fulfill the prerequisites.

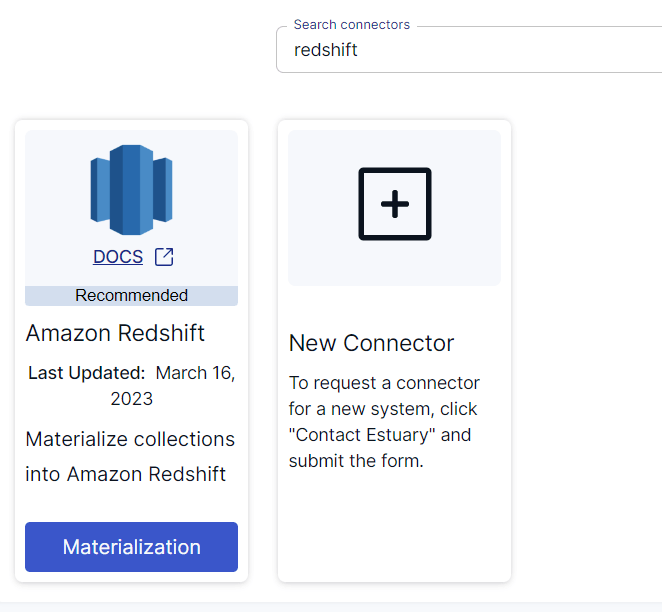

After a successful capture, you will see a pop-up with the details of the capture. Click on the MATERIALIZE COLLECTIONS button on this pop-up to proceed with setting up the destination end of the pipeline. You will be redirected to the Create Materialization page, where you can search for Redshift using the Search connectors box. When you locate the Amazon Redshift connector in the search results, click on its Materialization option.

Image Source: Estuary

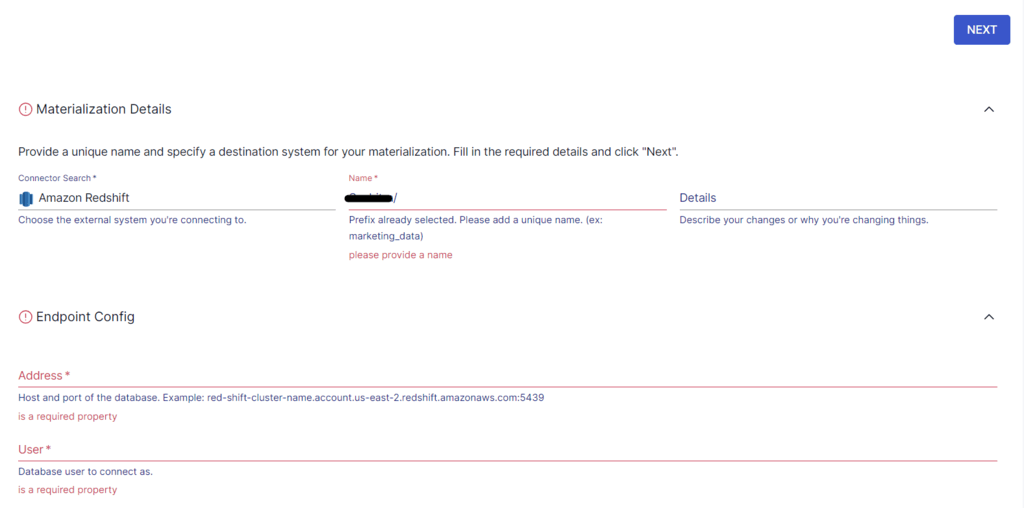

On the Amazon Redshift connector page, specify the required details, including a Name for the materialization, Address, S3 bucket details, User, and Password. If the data captured from Google Analytics 4 wasn’t filled in automatically, use the Source Collections feature to manually add the collections. Then, click on NEXT → SAVE AND PUBLISH.

Image Source: Estuary

This will materialize the Flow collections of your GA4 data into tables in an Amazon Redshift database. The connector uses your AWS account to materialize into Redshift tables by using an S3 bucket as a temporary staging area.

For more details about setting up the data migration pipeline, refer to the Estuary documentation:

The Manual Method: Using API and Custom Scripts to Load Data from Google Analytics 4 to Redshift

You can use the Google Analytics Reporting API to export report data from Google Analytics.

Before you start with this method, ensure the following prerequisites are fulfilled:

- A GA4 account with access to the data you want to ingest.

- An AWS account with access to S3 and Redshift.

- Python 3.6 or higher installed on your local machine.\

Here are the steps you need to follow:

Step 1: Set up Google Analytics API

- Go to the Google API Console.

- Click on the project dropdown menu → NEW PROJECT.

- Specify a project name and click on CREATE.

- You will be redirected back to the dashboard, where you must click on + ENABLE APIS AND SERVICES.

- Use the search bar to look for Google Analytics Reporting API. When you see it in the search results, click on it.

- Click on ENABLE to enable the Google Analytics Reporting API for the project.

- On the left-side pane, click on Credentials → + CREATE CREDENTIALS → OAuth client ID.

- Select Desktop app as the application type, enter a name for the client, and click on Create.

- A client secret JSON file will be created that you can download and save to your local machine.

Step 2: Ingest Data into Amazon S3

- Sign in to the AWS Management Console and navigate to the S3 Console.

- From the left navigation pane, select Buckets → Create bucket.

- Enter a unique name for your bucket, choose a region, and configure any additional settings as required. Then, click on Create bucket.

- In the AWS IAM console, configure your AWS credentials by setting the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY environment variables.

- Use the following Python script to ingest data from Google Analytics to your S3 bucket:

pythonimport os

import json

import boto3

from google.oauth2.credentials import Credentials

from google_auth_oauthlib.flow import InstalledAppFlow

from googleapiclient.discovery import build

# Set up Google Analytics API

client_secrets_path = 'path/to/client_secret.json'

scopes = ['https://www.googleapis.com/auth/analytics.readonly']

flow = InstalledAppFlow.from_client_secrets_file(client_secrets_path, scopes)

credentials = flow.run_local_server(port=0)

analytics = build('analyticsreporting', 'v4', credentials=credentials)

# Replace with your Google Analytics View ID

view_id = 'YOUR_VIEW_ID'

# Define the Google Analytics query

query = {

'viewId': view_id,

'dateRanges': [{'startDate': '30daysAgo', 'endDate': 'today'}],

'metrics': [{'expression': 'ga:sessions'}],

'dimensions': [{'name': 'ga:date'}]

}

# Execute the query

response = analytics.reports().batchGet(body={'reportRequests': [query]}).execute()

# Save the data as a JSON file

data = response['reports'][0]['data']['rows']

with open('google_analytics_data.json', 'w') as f:

json.dump(data, f)

# Upload the JSON file to Amazon S3

s3 = boto3.client('s3')

bucket_name = 'your-s3-bucket-name'

s3.upload_file('google_analytics_data.json', bucket_name, 'google_analytics_data.json')

This will fetch the number of sessions of the past 30 days from your GA4 account and upload the data as a JSON file to your S3 bucket.Step 3: Ingest Data into Amazon Redshift

Ensure you have a Redshift cluster and a table in the cluster to store the GA4 data. Then, use the Redshift COPY command to load the data from the S3 bucket to a Redshift table.

plaintextcopy table from 's3://<your-bucket-name>/load/key_prefix'

credentials 'aws_iam_role=arn:aws:iam::<aws-account-id>:role/<role-name>'

options;Use the name of your S3 bucket that is in the same region as your Redshift cluster. AWS account and IAM role details are also required to run the COPY command.

This will successfully migrate your GA4 data, through an S3 bucket, to a Redshift table.

Conclusion

Integrating Google Analytics to Redshift helps businesses unlock meaningful insights and offers greater control over the data analytics process. Combining GA’s user-centric model, cross-platform insights, and life cycle reporting with Redshift’s scalability and columnar storage helps boost effective decision-making.

The two popular methods to migrate Google Analytics 4 to Redshift are by using API with custom coding and using SaaS alternatives. For an easy-to-use, real-time data migration solution, SaaS alternatives like Estuary Flow make an excellent choice. The range of built-in connectors, an intuitive interface, and automation capabilities simplify the process of integrating GA4 and Redshift.

Estuary Flow seamlessly migrates data between supported platforms in real time. All it takes is a few minutes to set up the data integration pipeline. Register for your free account and get started with Flow today!

Author

Popular Articles