If we talk about managing and analyzing big data sets, data mapping is the first thing that grips the mind. The whole concept of data mapping is simple – creating a link between different data sets to streamline data processes and derive value. Since it is a numero uno feature of data integration and transformation, the intricacies linked to it can get overwhelming and challenging to get hold of.

So, how relevant is data mapping in the world today? In one word, inarguable. Here’s why.

Right now, the big data and analytics market stands at 274 billion. While the numbers are impressive, putting data mapping to its potential requires an in-depth understanding of its types, tools, and best techniques.

If you understand how valuable data mapping is to the future of big data, our guide is just what you need. We will take you through a step-by-step understanding of what data mapping is and how it operates via different tools along with the techniques for data mapping that promise efficient outcomes.

What is Data Mapping?

Data mapping is the process of matching and connecting data fields from different datasets into a centralized database or schema. With it, you can seamlessly transfer, ingest, process, and manage data. The ultimate goal of data maps is to merge multiple datasets into a single, cohesive entity that can be used effectively.

In the business world, data mapping is a common practice. As data and the systems that use it have grown in complexity, the data mapping process became more intricate. This requires advanced technologies and automation to ensure accuracy and efficiency.

Mapping data fields reduces the potential for errors and promotes data standardization. It provides a clear visualization of how different data points are connected, similar to how a map helps us navigate from one place to another. Errors in data mapping can have serious consequences for critical data management initiatives.

Data mapping lets you understand the movement and transformation of data. It is an important step in the end-to-end data integration process where data from various sources is consolidated into a single destination. In today's business world, where data is becoming more abundant and complicated, smart and automated solutions are needed for successful data mapping.

Here’s why data mapping is important for your business:

- Data mapping minimizes errors in data-rich organizations.

- It helps standardize data across various platforms and systems.

- It simplifies the data integration process and makes it more comprehensible.

- Intelligent, automated data mapping solutions are the only ones capable of handling the complexity and volume of today’s data.

- Data mapping provides a visual representation of data movement and transformations which improves the understanding and interpretation of data.

So, this tells us how it can be defined, but what is data mapping really in practice; how does it work, what is data mapping in ETL (Extract, Transform, Load) processes, what are some of the best tools and techniques? Read on to delve deeper into the practical aspects and discover the answers to these questions.

A Closer Look At How Data Mapping Works

Data mapping begins with a clear understanding of the data. It uses an instruction set to identify data sources, targets, and the relationship between them. Data mapping simplifies the integration of data into a workflow or data warehouse and effectively manages and transfers data between cloud and on-premises applications.

For a better understanding of the process, let’s take a look at its key elements.

6 Key Elements Of Data Mapping That Power Data Mapping Success

Data mapping has a range of components that act as either sources or targets. These are sequenced or paralleled to create a comprehensive data map.

Data Sources

These are the applications or services from which data is moved. The first step in managing data sources is to make sure that you have the necessary configurations for data access.

Data Targets

Applications, services, or any process which acts as a destination for the data falls under this category. Properly align the data source type, target type, source object, and target object fields for the successful data flow.

Data Transformations

Transformation tasks are included in the mappings and the data is processed in the order defined in the workflow. Multiple data transformations can be applied to a single mapping, including joiner, filter, lookup, router, data masking, and expression transformations.

Mapplets

A mapplet is a reusable component comprising several transformation rules.

Data Mapping Parameters & Variables

These are sets of constant values for transformation or mapping. They can be manually or automatically changed and hold values each time a mapping is run.

User-Defined Functions

These are customized functions applied to the mappings like an email alert system.

Understanding The Data Mapping Process

The data mapping process is a systematic series of steps for accurate and efficient data migration from source to destination. Here's its simplified workflow:

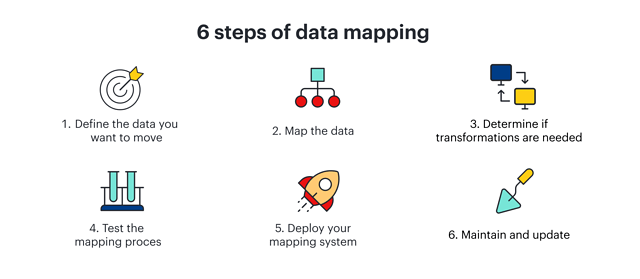

Define The Data

Start by outlining the data you plan to transfer. This includes specifying the tables, fields, and the data format. Determine the table and field formats in the target system and the data mapping frequency if data integrations are part of your plan.

Conduct Data Mapping

In this step, you associate the tables and fields in the source data with the ones in the destination. This mapping ensures that data ends up in the correct place in the target system.

Ascertain If Transformations Are Required

Sometimes, data needs to transform to fit into the target system's format. If necessary, define the transformation rule or formula for these transformations.

Testing Phase

Test your mapping process using a small sample of data. This gives you an idea of how well your mapping works. Make necessary adjustments and retest if issues arise.

Deploy The Mapping System

Once you're satisfied with the testing phase and are confident in the mapping process's efficacy, it's time to deploy your data mapping system.

Maintenance and Updates

The data mapping process requires ongoing maintenance and updates to accommodate changes in data sources and the addition of new ones. This ensures that the system remains accurate and efficient over time.

5 Different Types Of Data Mapping For Effective Data Integration & Analysis

Data mapping is a critical process in data management and has different types. Each type serves a unique purpose and is used in different scenarios. Understanding these types will help you choose the right approach for your data integration project.

Let's look at the common types of data mapping and explore their use cases.

Direct Data Mapping

Direct mapping, or one-to-one mapping, is the simplest form of data mapping. Here, each field in the source system corresponds directly to a single field in the target system.

Example

Transferring customer data from one CRM system to another. The 'Customer Name' field in the source system maps directly to the 'Customer Name' field in the target system.

Transformational Data Mapping

It involves changing the data from the source system before it is loaded into the target system. This involves calculations, concatenations, or other operations.

Example

Converting a 'Full Name' field in the source system into 'First Name' and 'Last Name' fields in the target system.

Complex Data Mapping

This type has multiple transformations and includes several source and target systems. It's used when data from multiple systems need to be consolidated or when the target system requires a different data structure.

Example

Consolidating customer data from multiple systems (CRM, billing, support) into a single data warehouse.

Schema Data Mapping

Schema mapping is used when integrating databases. It involves mapping one database schema to another and is often used during database migration or integration projects.

Example

Migrating data from an old customer database to a new one. The 'CustomerID' field in the old database might map to a 'CustomerNumber' field in the new one.

Semantic Data Mapping

Semantic mapping is used when integrating systems that have different data models or when the data is to be mapped from one semantic context to another. This type is all about understanding the data’s meaning in the source and target systems.

Example

Integrating a medical records system with a billing system. The 'PatientID' in the medical records system might map to a 'BillingAccountNumber' in the billing system.

7 Best Data Mapping Tools For A Streamlined Workflow

Here are 7 leading data mapping tools that simplify data visualization and interpretation and let your teams work efficiently regardless of technical prowess.

Estuary Flow

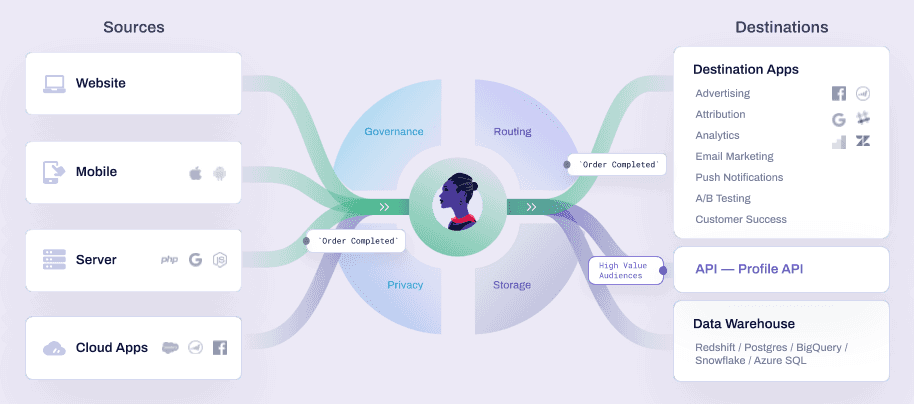

Estuary Flow, a sophisticated DataOps tool, stands out in data mapping with its strong emphasis on real-time ETL processes. This makes it a perfect fit for data mapping tasks in fast-paced settings.

With the ability to capture data from a wide range of sources, including databases, SaaS applications, and cloud storage, Flow transforms this data into a unified data model which is critical for data mapping.

One of Flow’s most useful features is its automated schema management. It automatically infers and manages data schemas to effectively transform unstructured data into a structured format. This simplifies the data mapping process, especially when dealing with complex or unstructured data sources.

Flow is a real-time ETL tool capable of capturing data from various sources. It uses streaming SQL and JavaScript for data transformations. Estuary's fault-tolerant architecture provides reliable and accurate data mapping. It uses low-impact Change Data Capture (CDC) to guarantee minimal load on system resources.

With its capacity to handle large data volumes, up to 7GB/s, and support for real-time database replication, Estuary Flow demonstrates scalability in data mapping, making it suitable for operations ranging from small datasets to those at a terabyte scale.

Talend Open Studio

Talend Open Studio goes beyond basic data mapping. This tool, with its graphical user interface, lets you map data visually from source to destination. It supports over 100 connectors for various data sources, provides continuous integration, and reduces repository management and deployment cost.

Talend allows custom code in Java. It supports dynamic schemas for processing records without pre-knowledge of columns and types. With its powerful GUI-driven Master Data Management (MDM) system, you get a unified view of enterprise data

However, it has limited scheduling and streaming features and is better suited for Big Data applications rather than ETL.

CloverETL (CloverDX)

CloverETL (or CloverDX) is a Java-built open-source tool for data mapping and integration. It provides a flexible use data model that can work as a standalone application, a server application, a command-line tool, or embedded within other applications.

CloverETL has both visual and coding interfaces for mapping and data transformation. It offers high-speed data transformation and can create web services with data parallelism data services.

Despite these advantages, CloverETL has been criticized for its lack of proper documentation for setup and implementation and supports fewer files and formats compared to other tools.

IBM InfoSphere

IBM InfoSphere is a data integration platform designed to cleanse, monitor, and transform data. It delivers top-notch performance in data mapping and loading with its Massively Parallel Processing (MPP) capabilities. This data mapping tool is both scalable and flexible and handles significant data volumes in real time.

It can seamlessly integrate with other IBM Data Management solutions. However, it has a steeper learning curve which makes it less user-friendly. Also, it is costlier than many other data mapping tools.

Microsoft SQL Server Integration Services

Microsoft SQL Server Integration Services is a part of the Microsoft SQL suite for data integration and migration. It automates SQL server database maintenance and multidimensional cube data updates. It requires substantial coding and has a workspace similar to Visual Studio Code.

Microsoft SQL Server Integration Services has an intuitive GUI and comes with a comprehensive collection of pre-built tasks and transformation tools. On the downside, it is less efficient in handling JSON with limited Excel connections. Also, it requires skilled developers because of its coding interface.

Oracle Integration Cloud Service

Oracle Integration Cloud Service (ICS) is an integration application specializing in source-to-target mapping for numerous cloud-based applications and data sources. Its flexible design also supports certain on-premise data integration and 50+ native app adapters.

Oracle Integration Cloud Service (ICS) is a consolidated product of SaaS Extension and Data Integration. It can easily integrate with other Oracle tools and services. However, while its advanced suite of features, such as process automation and visual application building, is impressive, it might be overwhelming for some users. Additionally, due to the extensive range of features it provides, the cost can also be a deal breaker.

Dell Boomi AtomSphere

Dell Boomi AtomSphere is a cloud-based data integration and mapping tool that caters to companies of all sizes. With user-friendly drag-and-drop features, its visual designer can easily map and integrate data between different platforms. It has predefined accelerators, recipes, and solutions for streamlined data mapping.

The main drawback is its lack of detailed documentation. Plus, the point-and-click feature may not adequately address complex solutions.

7 Proven Data Mapping Techniques

When it comes to data mapping, it's important to know that there are several different methods of doing it. The one you choose depends on various factors, including:

- Nature of the task

- Resources at hand

- Skill level of the team

- Complexity of the data

These data mapping techniques can be manual, semi-automated, automated, or schema-based. The location of the mapping process also varies – either on-premise, in the cloud, or using open-source platforms.

Let’s discuss each method's capabilities and limitations so you can pinpoint a strategy that aligns with your specific needs and objectives.

Manual Data Mapping

In this approach, developers manually code the relationships between the source and destination data. While this can be time-consuming and more prone to human errors, it's suitable for small-scale or one-time operations with less complex databases.

Semi-Automated Data Mapping

This method involves both automated tools and manual input from the specialists. It's a cost-effective solution for small-scale data operations as it provides a balance between precision and automation.

Automated Data Mapping

Automated data mapping uses tools to handle the mapping process, reducing the need for manual coding. This method works great when you are handling large datasets and want to minimize human errors.

Schema Mapping

Schema mapping is a semi-automated technique that automatically links similar schemas with little manual intervention. It comes in handy when you need to understand the structural dynamics of the used program.

On-Premise Data Mapping

This technique is about mapping or connecting data from various sources within an organization's own physical infrastructure. While on-premise data mapping provides a sense of control and security, it can be costly and cumbersome in the long term. It's preferred by large organizations that need complete control over their data.

Cloud-based Data Mapping

Cloud-based data mapping connects and maps data from different sources using cloud-based services and infrastructure. This technique is used when you have large datasets, need to collaborate with multiple users or teams, or want to take advantage of advanced data analytics tools and machine learning capabilities of the cloud provider.

Cloud-based methods are fast, adaptable, and scalable to match the needs of modern businesses. However, these can have unexpected costs if not managed properly.

Open-Source Data Mapping

In the open-source data mapping method, you use software tools that are freely available and developed by a community of contributors. This technique is cost-effective and efficient but requires manual coding skills. They can be hosted either on premises or in the cloud.

Conclusion

Today, when information overload is a constant challenge, data mapping guides us through the complexity. It is the foundation for effective data management and integration. Without proper data mapping, you risk encountering data inconsistencies, redundancies, inaccuracies, and all those things that you wouldn’t want.

We know the journey of mastering data mapping is not easy. But there’s a way to ease this process – Estuary Flow. Its visual data mapping capabilities let you visualize your data elements, their transformations, and their interactions clearly and concisely. It provides a range of pre-built connectors and templates for integration with various systems, databases, and applications.

So, why wait? Get started with Flow today and take advantage of our free sign-up offer. Still have questions? Don't hesitate to reach out to us for more information.

Author

Popular Articles