Cloud data pipelines have shattered the limitations of traditional data processing and created a way for organizations to ascend to new heights of efficiency, innovation, and competitive advantage. As more organizations pivot towards cloud-based solutions, understanding the nuances of cloud data pipelines becomes increasingly crucial.

Transitioning from traditional data management systems to cloud data pipelines is not a simple task. It's a process that demands a well-thought-out strategy, careful planning, and informed execution.

In today’s guide, we’ll discuss what a cloud data pipeline is and how it can benefit your organization. We'll also look at its different types and share some real examples to show how these pipelines help businesses thrive.

By the time you’re done reading it, you'll not only have a firm grip on the fundamentals of cloud data pipelines but also know how you can benefit from them.

What Is A Cloud Data Pipeline?

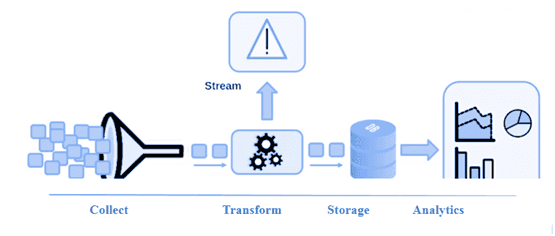

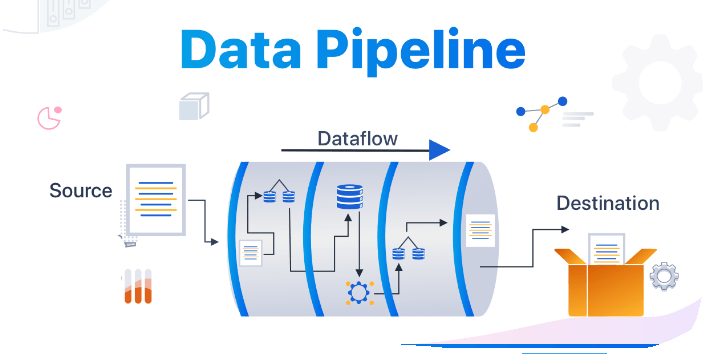

A Cloud Data Pipeline is an advanced process that efficiently transfers data from various sources to a centralized repository like cloud data warehouses or data lakes. It serves as a technological highway where raw data is transported, undergoes necessary transformations like cleaning, filtering, and aggregating, and is finally delivered in a format suitable for analysis.

Cloud Data Pipelines can also synchronize data between different cloud-based systems for optimal analysis. To ensure the secure transfer of data, strict security measures and quality checks are implemented, ensuring the accuracy and reliability of the data.

At its core, the Cloud Data Pipeline acts as a secure bridge between your data sources and the cloud to facilitate seamless and efficient data migration.

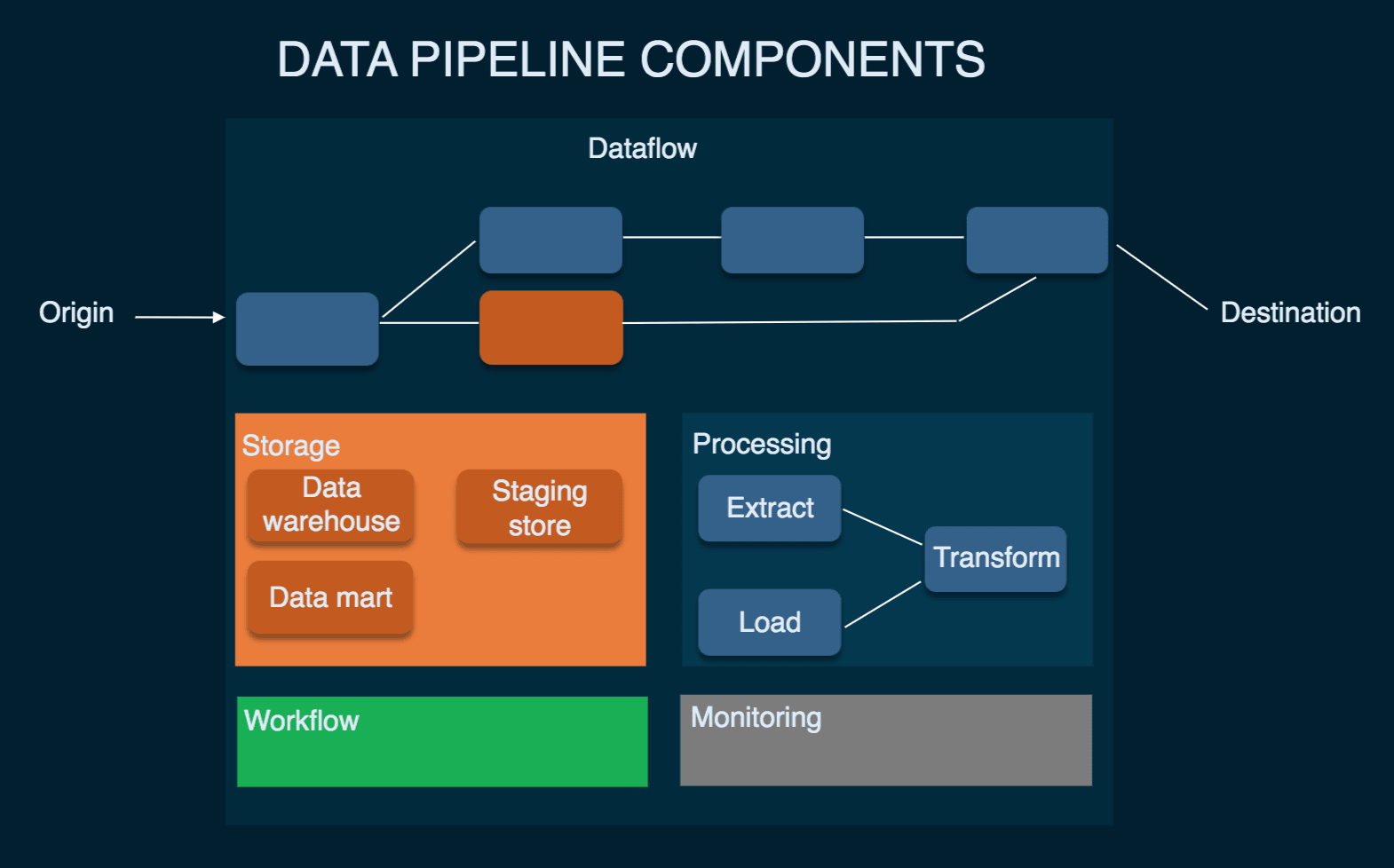

7 Components Of A Data Pipeline

A data pipeline is a complex system built with key components that each play an integral part. Let's look into the components briefly.

Origin

This is the data's starting point. Here, data from different sources such as APIs or IoT sensors enter the pipeline.

Dataflow

This is the journey itself. Data travels from the origin to the destination, transforming along the way. Often, it is structured around the ETL (Extract, Transform, Load) approach.

Storage & Processing

These are about preserving and handling data. They involve a series of actions for data ingestion, transformation, and loading.

Destination

It is the last phase of the process. Depending on the use case, the destination could be a cloud data warehouse, a data lake, or an analysis tool.

Workflow

Workflow is the pipeline's roadmap, showing how different processes interact and depend on each other.

Monitoring

The watchful eye over the pipeline ensures all stages function smoothly and correctly.

Technology

The backbone of the pipeline. This involves various tools and infrastructures that support data flow, processing, storage, workflow, and monitoring.

What Is Data Pipeline Architecture?

A data pipeline architecture is the blueprint for efficient data movement from one location to another. It involves using various tools and methods to optimize the flow and functionality of data as it travels through the pipeline.

Data pipeline architecture optimizes the process and guarantees the efficient delivery of data to its destination. By designing the architecture carefully, you can maximize efficiency and timely delivery of data.

Here are the 6 types of data pipeline architecture:

- In Kappa architecture, data is ingested and processed in real-time without duplication.

- The ETL (Extract, Transform, Load) Architecture involves extracting data from various sources, transforming it to fit the target system, and loading it into the destination.

- Batch processing refers to the practice of processing data in scheduled intervals, like daily or weekly, in groups or batches.

- Microservices architecture uses loosely coupled and independently deployable services to process data.

- Lambda architecture combines batch processing and real-time processing by initially processing data in batches and then updating it in real time.

- Real-Time streaming ensures data is processed immediately as it is generated, with minimal delay or latency.

Now that we know the basics of a cloud data pipeline, let’s discuss their different types for even better comprehension.

5 Types Of Cloud Data Pipelines

Now let's explore the 5 major types of cloud data pipelines:

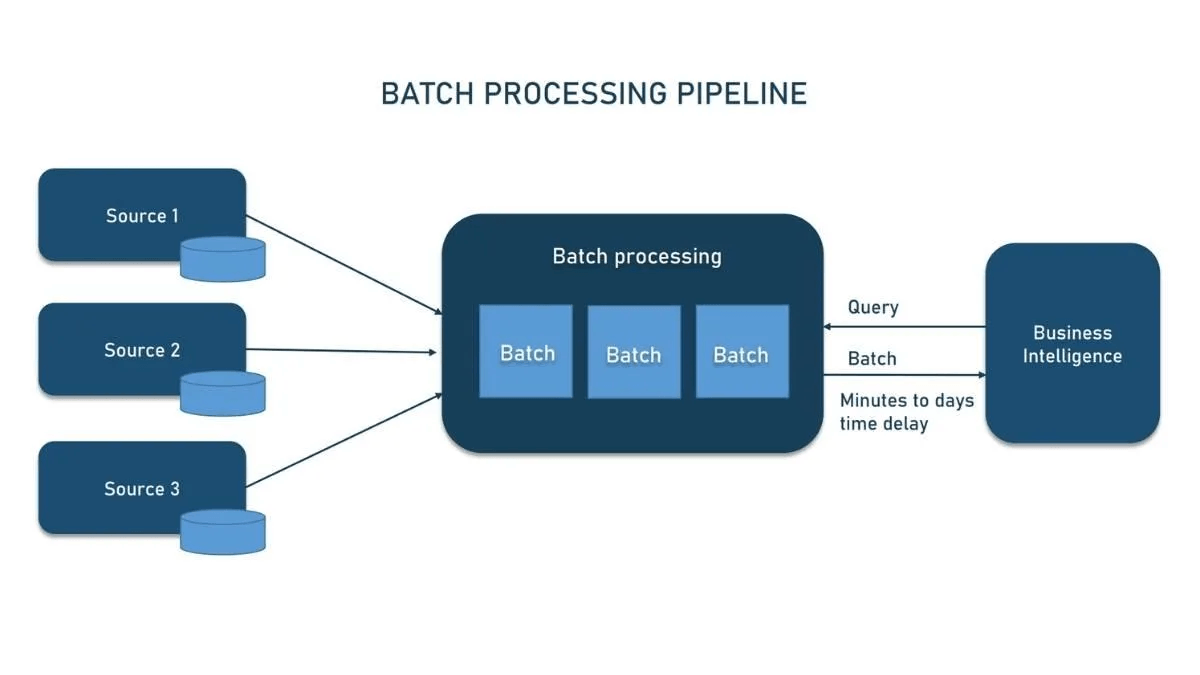

Batch Processing Pipelines

Batch processing pipelines handle data in chunks or batches and transfer it in blocks over specific periods like a few minutes or even hours. These pipelines are commonly used when working with historical data and play a crucial role in traditional analytics.

Batch processing pipelines are perfect for tasks that involve aggregating large volumes of data, generating reports, performing complex calculations, and conducting in-depth analysis.

These pipelines efficiently process large amounts of data in a structured and controlled manner. By operating in specified time intervals, you can gather and process data from various sources, such as databases, files, or APIs, and perform transformations and calculations on a batch of data as a whole.

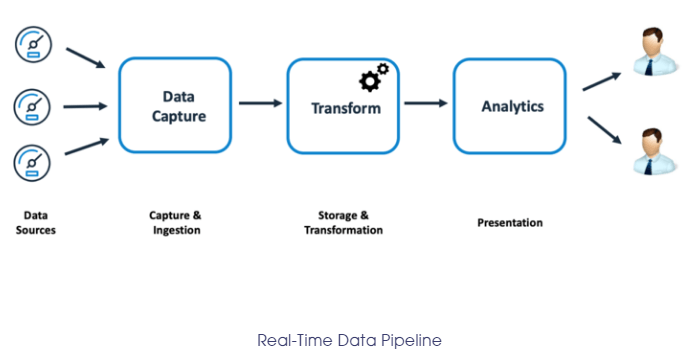

Real-Time Or Streaming Data Pipelines

Real-time or streaming data pipelines are designed for situations where immediacy is crucial. Unlike batch processing pipelines, real-time pipelines process data as it flows continuously. These pipelines are specifically tailored to handle data that is constantly changing or requires immediate action.

Streaming data pipelines ensure that reports, metrics, and summary statistics are updated in real-time as new data arrives so you can make timely decisions based on the most up-to-date information available.

Real-time or streaming pipelines are particularly beneficial when data needs to be processed and analyzed as it is generated. For example, financial market updates, social media monitoring, or real-time tracking of system metrics greatly benefit from the immediate processing and analysis provided by these pipelines.

Open-Source Pipelines

Open-source pipelines are built on open-source technologies and frameworks. The key advantage of open-source pipelines lies in their public availability and customizable nature. You can access and utilize these pipelines, modify their source code, and adapt them to suit your unique requirements.

By embracing open-source pipelines, you can tap into a community of developers and users who actively contribute to the improvement and evolution of these technologies. This collaborative ecosystem fosters innovation, as new features, enhancements, and bug fixes are regularly shared, benefiting the entire community.

Since the open-source pipelines are freely available, you can save on licensing fees and reduce overall expenses associated with proprietary solutions.

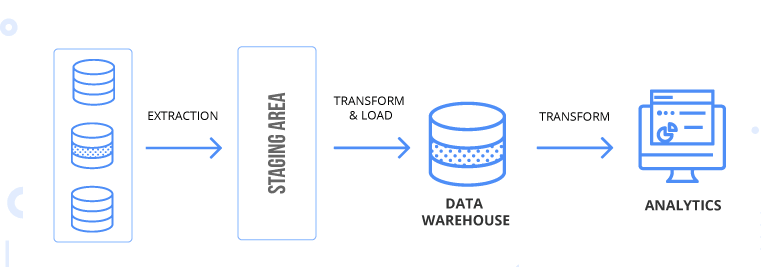

Extract, Transform, Load (ETL) Pipeline

The Extract, Transform, Load (ETL) cloud data pipeline facilitates the process of gathering, manipulating, and loading data from various sources into a cloud-based system. It combines the capabilities of extraction, transformation, and loading to create a seamless flow of data.

During the extraction phase, it uses connectors and APIs to collect data from different sources, like databases, applications, or even external systems. It includes various data types, including both structured and unstructured data. Then in the transformation stage, the extracted data undergoes a series of operations, manipulations, and validations to ensure its quality, consistency, and relevance.

Finally, it arrives at the loading phase. After getting extracted and transformed, the pipeline needs to load and store data into the cloud-based system where it can be accessed and utilized efficiently. The ETL pipeline streamlines this process and provides seamless integration with various cloud platforms.

ETL cloud data pipeline eliminates the complexities and manual effort associated with data integration. It enhances efficiency, saving time and resources while helping you make decisions based on the most current and relevant information.

Event-Driven Pipeline

The event-driven cloud data pipeline uses the power of events to trigger actions and enable seamless data processing and integration, all while maintaining real-time responsiveness.

These events are generated by various sources, like user actions, system notifications, or data changes. When an event occurs, it acts as a signal that triggers a series of predefined actions within the pipeline. This event-driven nature ensures that data processing and integration are executed immediately and automatically without the need for manual intervention.

The pipeline architecture ensures the efficient and timely handling of data. As events are generated, they are instantly captured and processed by event-driven components, which can include event routers, processors, and handlers. Event-driven pipelines are usually real-time pipelines, but some may have latency introduced.

After exploring the different types of cloud data pipelines, let’s see why cloud data pipelines have become indispensable tools for businesses.

9 Proven Benefits Of Using Cloud Data Pipelines

Here are some of the benefits of using cloud data pipelines.

Data Centralization

The first and foremost benefit of using cloud data pipelines is the centralization of your data. It allows your marketing teams, BI teams, and data analysts to access the same set of data from a unified location, fostering collaboration and transparency across your organization.

Flexible & Agile

They are designed to adapt and respond to changes in data sources or user needs, providing an agile framework that grows along with your business. Whether you're dealing with a small dataset or big data, these pipelines can effortlessly scale up or down based on your needs. This elasticity ensures optimal performance, reduces costs, and eliminates concerns about infrastructure limitations.

Cost-Effective & Efficient

Cloud data pipelines allow for speedy deployment and easy access to shared data. Not only this, but they also offer instant scalability as your workloads increase - all this without making a dent in your budget.

High-Quality Data

One major advantage of data pipelines is their ability to refine and clean data as it passes through. This ensures that your reports are consistently accurate and reliable, setting you on the path to meaningful and useful data analytics.

Standardization Simplified

Standardization is crucial when it comes to data analysis. Cloud data pipelines help convert your raw data into a standardized format that's ready for evaluation, making it easier for your analysts to draw actionable insights.

Iterative Process

With data pipelines, you're looking at an iterative process that allows you to identify trends, find performance issues, and optimize your data flow. This is critical for maintaining a standardized data architecture and facilitates the reuse of your pipelines.

Integration Made Easy

Cloud data pipelines excel at integrating new data sources. While cloud data pipelines offer pre-built connectors and integrations, they also provide flexibility for custom integration scenarios. You can leverage APIs and SDKs to create custom connectors or integrate with specialized systems specific to your organization's needs. This flexibility ensures that you can integrate any data source, regardless of its uniqueness or complexity.

Better Decision-Making

Decisions need to be data-driven. Cloud data pipelines streamline the movement of data from diverse sources into a centralized data repository or data warehouse. By consolidating data from various systems and applications, these pipelines provide a unified view of your organization's data. This centralized data repository becomes a valuable resource for decision-makers as it provides easy access to comprehensive and up-to-date information.

Cloud data pipelines also facilitate collaborative decision-making by providing a single source of truth. Decision-makers from different departments or teams can access and analyze the same data, promoting a shared understanding and alignment.

Enhanced Security

While building your cloud data pipelines, stringent security guidelines are followed to ensure your data is protected during transit. They employ encryption techniques to safeguard data during transit. Transport Layer Security (TLS) or Secure Sockets Layer (SSL) protocols are commonly used to establish secure connections and encrypt data during transit.

Cloud data pipelines also implement robust access controls and authentication mechanisms to ensure that only authorized users have access to the data. User authentication and authorization protocols, such as multi-factor authentication, role-based access control (RBAC), and identity and access management (IAM) systems, are employed to restrict access to sensitive data within the pipeline.

Now you know the benefits of cloud data pipelines, let's get to know it better and explore some real-world examples of how organizations from various industries are leveraging cloud data pipelines and how you too can put these pipelines into action.

4 Real-World Use Cases Of Cloud Data Pipelines

Let's look into some real-world case studies of cloud data pipelines.

Hewlett Packard Enterprise's Shift To Stream Processing

Hewlett Packard Enterprise (HPE), a global platform-as-a-service provider, faced a challenge with its InfoSight solution. InfoSight, designed to monitor data center infrastructure, gathered data from over 20 billion sensors installed in data centers worldwide. This vast network sent trillions of metrics daily to InfoSight, dealing with petabytes of telemetry data.

To improve the customer experience and enhance predictive maintenance capabilities, HPE recognized the need to update its infrastructure to support near-real-time analytics. The goal was clear – to build a robust data architecture that could facilitate streaming analytics. The framework needed to be always available, recover swiftly from failures, and scale elastically.

HPE turned to the Lightbend Platform to build this infrastructure. The platform comes equipped with several streaming engines such as Akka Streams, Apache Spark, and Apache Kafka. These engines manage tradeoffs between data latency, volume, transformation, and integration, among other things.

This shift to data stream processing allowed HPE to significantly enhance its InfoSight solution. Now it's capable of handling massive volumes of real-time data, resulting in improved predictive maintenance and, ultimately, a better customer experience.

Uber's Utilization Of Streaming Pipelines For Real-Time Analytics

As a global leader in mobility services, Uber places a special focus on near real-time insights for its operations. The company uses machine learning extensively for tasks like dynamic pricing, estimated time of arrival calculations, and supply-demand forecasting. To do this effectively, Uber relies on streaming cloud data pipelines to collect current data from driver and passenger apps.

The centerpiece of Uber's data flow infrastructure is Apache Flink, a distributed processing engine. Apache Flink processes supply and demand event streams and calculates features that are then supplied to machine learning models. The models then generate predictions, updated on a minute-by-minute basis, aiding Uber in its decision-making processes.

In addition to real-time data handling, Uber also leverages batch processing cloud data pipelines. This strategy lets the company detect medium-term and long-term trends, creating a more comprehensive overview of its operational landscape.

Leveraging Data Pipelines For Machine Learning At Dollar Shave Club

Dollar Shave Club, a prominent online retailer delivering grooming products to millions, takes full advantage of cloud data pipelines to improve its customer experience. The company hosts a sophisticated data infrastructure on Amazon Web Services with a Redshift cluster at its heart. This central data warehouse collects data from various sources, including production databases.

They make use of Apache Kafka to mediate data flow between applications and into the Redshift cluster. The Snowplow platform is employed to collect event data from various interfaces, such as web, mobile, and server-side applications. This data encompasses page views, link clicks, user activity, and several custom events and contexts.

To glean further insights from their data, they have developed an innovative project to develop a recommender system. This system determines which products to highlight and how to rank them in monthly emails sent to customers. The core of this engine is Apache Spark, running on the Databricks unified data analytics platform.

Streamlining Data Centralization At GoFundMe

As the world's leading social fundraising platform, GoFundMe manages an impressive volume of data, with over 25 million donors and upwards of $3 billion in donations. However, they faced a significant challenge: the absence of a central data warehouse.

Without centralization, their IT team struggled to gain a comprehensive understanding of their business trajectory. Recognizing the need for a more streamlined approach, GoFundMe set out to establish an adaptable data pipeline.

This solution had to not only provide connectors for all their data sources but also offer the flexibility to create customized Python scripts for on-the-fly data modifications. This gave them a high degree of control over their data processes.

The resulting data pipeline offered both flexibility and data integrity. One significant feature was the implementation of safeguards against the use of personal ETL scripts which could potentially alter data inadvertently.

Unlocking Efficiency With Estuary Flow In Cloud Data Pipelines

When it comes to selecting a cloud data pipeline solution, our no-code DataOps platform, Estuary Flow sets the gold standard. It provides an all-in-one solution for real-time data ingestion, transformation, and replication.

Here's how Estuary Flow excels in enhancing the data quality and management in your organization:

Fresh Data Capture

Estuary Flow kicks off its process by capturing fresh data from a wide array of sources, including databases and applications. Employing Change Data Capture (CDC) technology ensures that the collected data is always the latest, thereby mitigating concerns about outdated or duplicated data.

Customizable Data Transformations

Once the data enters the system, it provides robust tools for customization. You can employ streaming SQL and JavaScript to tailor data as it streams into the system. This functionality allows for a high degree of adaptability to meet specific data requirements.

Comprehensive Data Testing

Estuary Flow puts a strong emphasis on data quality through its built-in testing features. This function ensures the data pipelines remain error-free and reliable. Any inconsistencies or potential issues are identified swiftly, preventing them from escalating into major problems.

Real-Time Data Updates

One of Flow's standout features is its ability to maintain the cleanliness of your data over time. Creating low-latency views of your data ensures the information remains current and consistent across different systems.

Seamless Data Integration

Estuary Flow allows the integration of data from different sources. It provides a comprehensive view of your data landscape. Consequently, decision-making is backed by high-quality, integrated data, enhancing the accuracy of insights and the quality of services.

Conclusion

Cloud data pipelines, with their ability to adapt and process a wide array of data, make a compelling case for their implementation. The advantages they offer, like increased efficiency, scalability, and superior data-centric decision-making, further highlight their importance to businesses.

If you're considering implementing cloud data pipelines in your organization, Estuary Flow is a tool that simplifies the process. Offering robust capabilities for real-time data processing, Estuary Flow is designed to facilitate the easy creation and management of cloud data pipelines.

You can try Estuary Flow for free by signing up here or reaching out to our team to see how we can assist you.

Author

Popular Articles