Organizations rely on vast data volumes for effective decision-making. With constantly expanding data volumes, there is a rise in demand for databases that can handle it all. Amazon Relational Database Service (RDS) plays a pivotal role in this by providing a reliable platform for handling this data as it’s easy to set up.

However, Amazon RDS is primarily good for processing transactional data. It does not have the power to process large-scale analytical queries or derive insights for impactful decision-making. By loading your data from Amazon RDS to BigQuery, you can leverage the warehousing and advanced analytical capabilities of BigQuery. It also offers you real-time data monitoring, dynamic scalability, and simplified data analysis at reduced costs.

But, the best way to learn how to load your data into BigQuery from Amazon RDS is to try it out yourself. This is exactly what this tutorial is all about. So, let’s dive in.

Amazon RDS: Overview

Amazon Relational Database Service (Amazon RDS) is a serverless relational database service offered by Amazon Web Services (AWS). It supports effortless deployment through its managed service model, which automates the database setup and configuration in the cloud environment. You can effortlessly set up a new database with just a few API calls without any manual setup. In addition, it has the ability to collaborate with multiple popular database engines such as MySQL, PostgreSQL, Oracle, MariaDB, and SQL Server. This diverse engine support facilitates combining the scalability and managed services of Amazon RDS with the power of multiple databases.

Amazon RDS also automates time-consuming and repetitive administrative tasks such as database configuration, patching, and backup maintenance. These robust functionalities assist you in working on the development part of the application rather than putting in the effort to manage the infrastructure.

Some key features of Amazon RDS are mentioned below.

- Scalability: Amazon RDS offers you the ability to scale up or down on demand effortlessly. This permits you to handle fluctuating workloads with optimal processing speed and without much downtime.

- Automated Software Patching: Amazon RDS has the functionality to automatically apply software updates to the database as soon as they are available. This ensures the databases are continuously upgraded to the latest version and includes all security fixes.

- Automated Backups: Amazon RDS automates the backups for all the databases and securely stores them in Amazon S3. This facilitates fault tolerance and ensures data durability.

What Is BigQuery?

BigQuery is a highly scalable cloud-based data warehousing platform offered by Google Cloud Platform (GCP). It leverages Google's infrastructure to efficiently store, process, manage, and analyze petabyte-scale datasets. BigQuery also supports collaboration, consistency, and high-performance querying of these massive datasets using SQL-like syntax.

Its architecture offers columnar storage and a highly parallelized processing engine for achieving optimal performance and quick results on large and growing datasets. This also ensures automatic scaling by dynamically allocating resources for increasing or decreasing requirements.

Some of the key features of BigQuery are mentioned below.

- User-defined Functions: BigQuery offers user-defined functions (UDFs) by allowing you to write and use custom SQL functions. This facilitates query reusability and encapsulates complex logic and functionalities with the datasets.

- Data Import/Export Formats: BigQuery allows you to work with multiple data import and export formats such as JSON, CSV, Parquet, Avro, and ORC. This lets you seamlessly transfer data between BigQuery and multiple other platforms for smooth data processing.

- Disaster Recovery: BigQuery facilitates cross-region replication of your data for disaster recovery. With this feature, you can ensure high availability and no downtime, even in case of any failures or interruptions.

How to Migrate From Amazon RDS to BigQuery

Here are two methods you can use to reliably load data from Amazon RDS to BigQuery:

- Method 1: Using Estuary Flow to load data from Amazon RDS to BigQuery

- Method 2: Using manual data export/import to load data from Amazon RDS to BigQuery

Method 1: Using Estuary Flow to Load Data From Amazon RDS to BigQuery

Estuary Flow is a no-code, real-time ETL (extract, transform, load) platform that enables you to streamline your data-loading process in real time. The data extraction and loading is completely automated, thanks to its readily available connectors, allowing you to integrate your data without requiring prior coding experience.

Prerequisites

Step 1: Configure Amazon RDS as the Source

- Log in to your Estuary account to start configuring Amazon RDS as your data source.

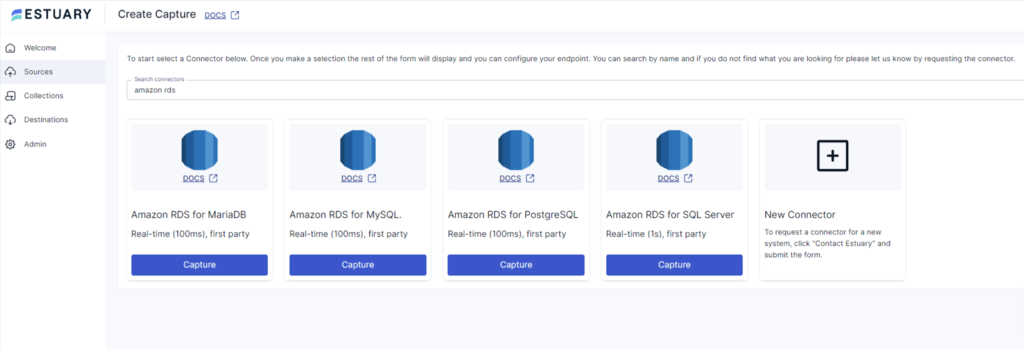

- Go to the Sources tab on the dashboard.

- Click on the + NEW CAPTURE button on the Sources page.

- Using the Search connectors box, search for the Amazon RDS connector and then click on the Capture button.

You can select one of the many connector options, including Amazon RDS for MariaDB, Amazon RDS for MySQL, Amazon RDS for PostgreSQL, or Amazon RDS for SQL Server.

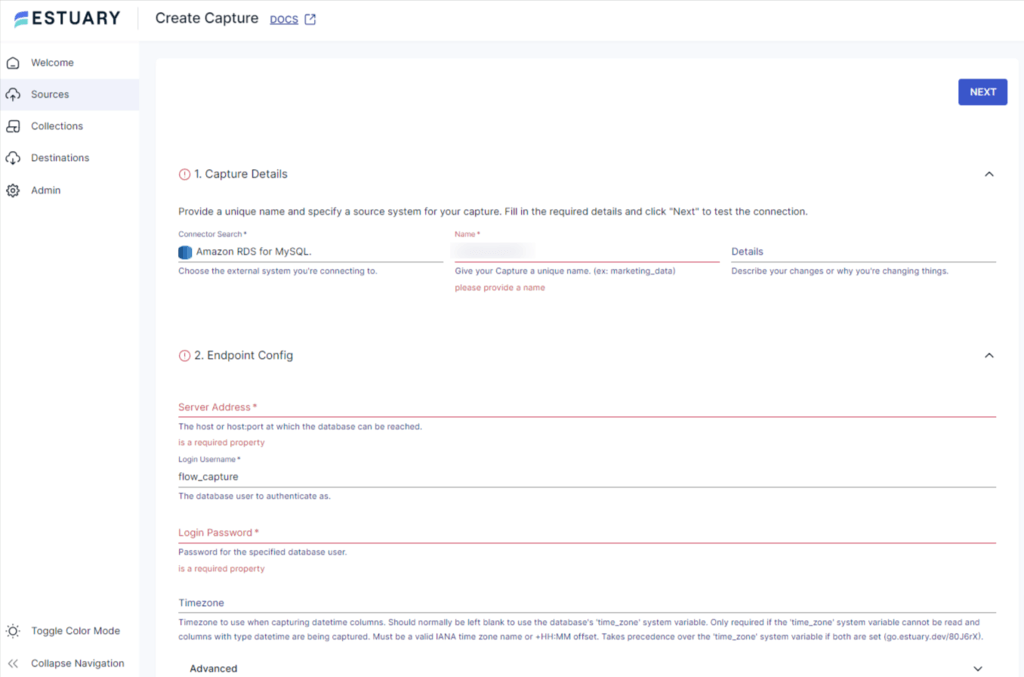

- You will be redirected to the Amazon RDS connector page. Fill in all the specified details, such as Server Address, Login Username, and Login Password.

- Click on NEXT > SAVE AND PUBLISH. The CDC connector will capture change events from MySQL into Flow Collections via the Binary Log.

Step 2: Configure BigQuery as the Destination

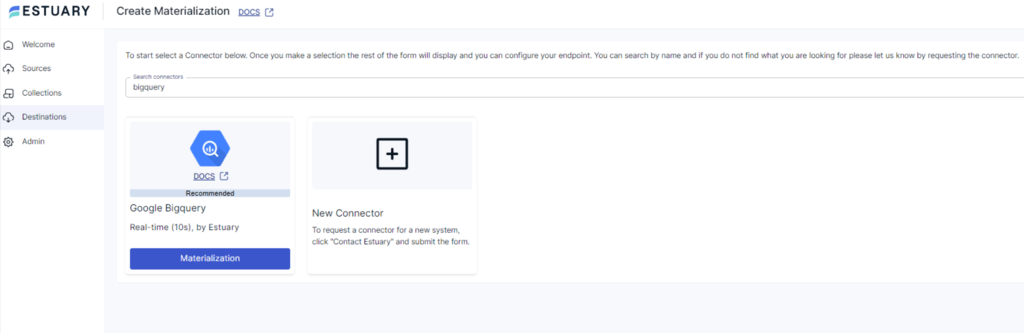

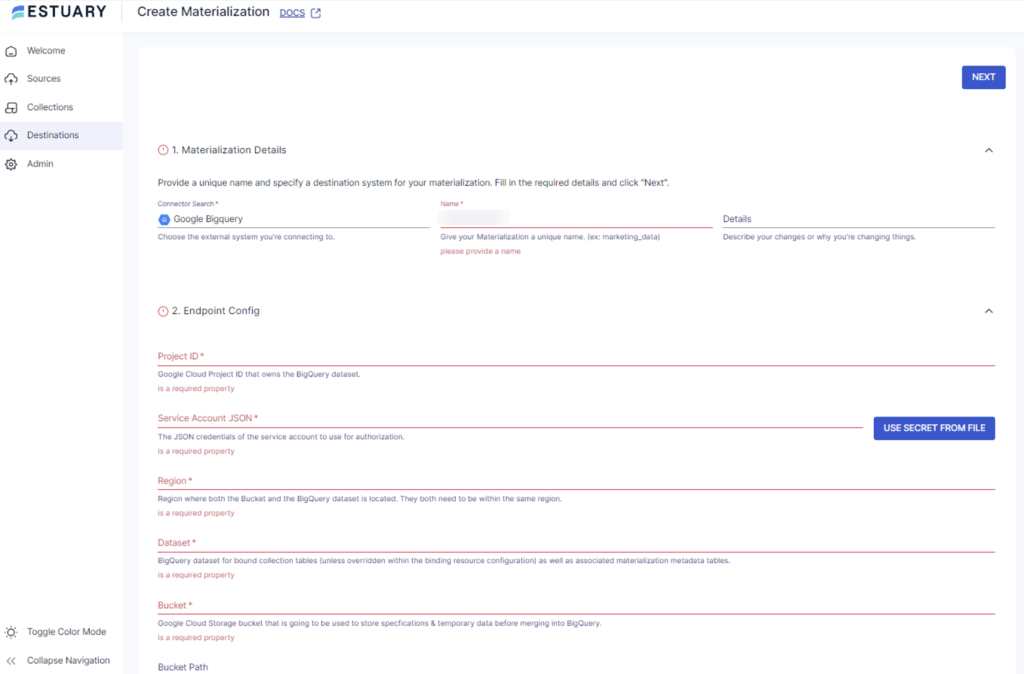

- Select the Destinations option on the dashboard to start configuring the destination end of the data pipeline.

- Click on the + NEW MATERIALIZATION button on the Destinations page.

- Using the Search connectors box, search for the BigQuery connector and click on its Materialization button.

- You will be redirected to the BigQuery connector page. Fill in all the specified details, such as Project ID, Service Account JSON, Region, Dataset, and Bucket.

- While your source data from Flow collections will be automatically added to your materialization, consider using the Source Collections section to do this manually.

- Click on NEXT > SAVE AND PUBLISH. This will materialize the data from your Flow collections into BigQuery tables.

Benefits of Using Estuary Flow

- Change Data Capture (CDC): Estuary Flow offers CDC support for processing data in real-time. It facilitates near instantaneous synchronization and continuous updating of all the changes in the data to maintain integrity.

- Built-in Testing: Flow maintains the quality of data while migration by using its built-in testing feature. This ensures that the data that you are loading from Amazon RDS to BigQuery is accurate and does not result in unexpected errors.

- Built-in Connectors: Estuary Flow provides you with more than 300 ready-to-use connectors for creating custom pipelines. This streamlines your entire data-loading process and makes it less prone to human errors.

Method 2: Using Manual Data Export/Import to Load Data From Amazon RDS to BigQuery

Let’s explore the step-by-step instructions to load your data from Amazon RDS to BigQuery by manually transferring data files. This involves exporting data from Amazon RDS and temporarily storing it in Amazon S3, following which, the data is then imported into BigQuery.

Step 1: Create an S3 Bucket

- Sign into your AWS Management Console.

- Open the Amazon S3 console by clicking on S3 under Storage & Content Delivery.

- Click on Create Bucket from the Amazon S3 console dashboard.

- Fill in the specified details, such as Bucket Name and AWS Region, and click on Create. Amazon S3 will successfully create your bucket and display it in the Buckets pane.

Step 2: Export Data From Amazon RDS to S3 Bucket

Use the following CLI command to export your data from Amazon RDS to the S3 bucket.

plaintextaws rds-data execute-statement

--database <database-name>

--resource-arn <db-cluster-arn>

--Secret-arn <secret-arn>

--sql “SELECT * FROM <table-name>”

--Include-result-metadata

--Output text > s3://<bucket-name>/data.txtSubstitute the following parameters in the above command:

- <database-name>: The name of your Amazon RDS database.

- <db-cluster-arn>: The arn of your RDS cluster.

- <secret-arn>: The arn of your secret.

- <table-name>: The name of the table from which the data has to be exported.

- <bucket-name>: The name of your newly created S3 bucket.

Step 3: Import Data From S3 Bucket into BigQuery

- In the Google Cloud Console, navigate to the BigQuery page.

- In Transfers, click Create a Transfer.

- You will be redirected to the Create Transfer page. Here, enter all the mandatory details as mentioned below:

- In the Source box under the Source type section, select Amazon S3.

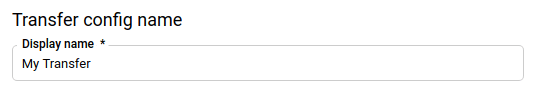

- In the Display name box under the Transfer config name section, fill in a name for the transfer.

- In the Schedule options section, specify a Repeat frequency.

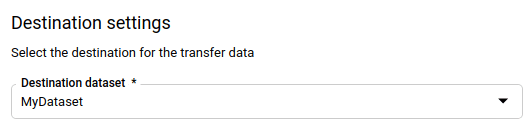

- In the Destination dataset box under the Destination settings section, choose the dataset you created to store your data.

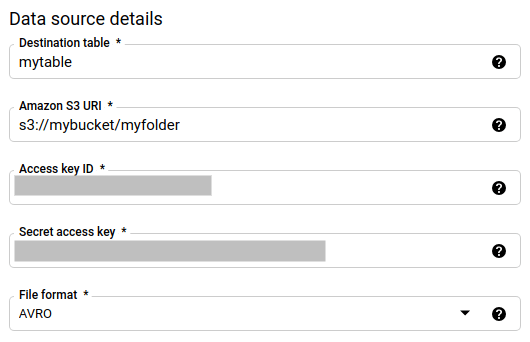

- Under the Data source details section, fill in all the fields such as Destination table, Amazon S3 URI, Access key ID, Secret access key, and File Format.

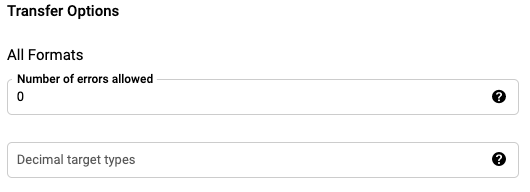

- In the Transfer Options section, fill in the Number of errors allowed.

- Select the format type and fill in the corresponding details.

- Select a Service account associated with your Google Cloud Project.

- Save all your changes by clicking the Save button to finish the importing process.

The Takeaway

Loading your data from Amazon RDS to BigQuery facilitates effective data analysis and management. It allows you to leverage the highly scalable and advanced analytical querying capabilities of BigQuery.

There are two methods for loading data from Amazon RDS to Google BigQuery. The manual export/import method uses CLI (Command Line Interface) commands to export data from Amazon RDS into the Amazon S3 bucket as a temporary location. Then, this data is imported from the S3 bucket into BigQuery using Google Cloud Console. The process is time-consuming and error-prone due to the involvement of multiple steps.

No-code ETL platforms offer a powerful solution to overcome time-consuming hurdles. Estuary Flow automates the entire data extraction and loading processes and offers an extensive connector set with real-time data processing capabilities.

Automate your data loading process with the use of Estuary Flow by leveraging its robust capabilities and dynamic scalability. Register now to get started!

FAQs

- What are the databases supported by Amazon RDS that can be integrated into BigQuery?

Amazon RDS supports multiple relational databases, such as MySQL, PostgreSQL, and different databases through Amazon Aurora, Oracle, and SQL Server. All these databases are compatible with BigQuery and can be integrated with it.

- Can I perform transformations on data from Amazon RDS before loading it into BigQuery?

Yes, you can perform data transformations on Amazon RDS data before loading it into BigQuery to ensure compatibility. You can map Amazon RDS data types to the corresponding BigQuery data types, apply all calculations or business logic, and even handle all the missing and null values.

- Can I integrate more than one Amazon RDS instance with BigQuery?

Yes, you can integrate multiple Amazon RDS instances with BigQuery by setting up individual integration workflows for each instance. Data integration platforms such as Estuary Flow allow you to connect to different Amazon RDS instances and configure your data loading process accordingly.

Author

Popular Articles