What are your best options for using change data capture (CDC) in a data pipeline for analytics, operations, data science/ML, generative AI projects, or all of these projects at once?

This guide helps answer that question, both for a single project with a single destination, and as a broader platform across multiple projects and destinations.

It first summarizes the major challenges with CDC that you need to consider for CDC-based pipelines - including reliable capture, end-to-end schema evolution and change, snapshots and backfilling, the different types and speeds of loading data, and managing end-to-end data consistency.

This guide then continues with a detailed comparison of leading vendors or technologies before moving into a more detailed description of their strengths, weaknesses, and when to use them.

In the end, ELT and ETL, messaging, and streaming analytics/stream processing vendors and technologies were all included as the major options to consider:

- ELT: Fivetran was included to represent the category. Hevo, Airbyte, and Meltano were close enough to Fivetran that it didn’t make sense to cover all of them. But the column with Fivetran really evaluates all the vendors. If you’d like a more detailed comparison of Fivetran, Hevo, and Airbyte you can read A Data Engineer’s Guide to Fivetran Alternatives.

- ETL: Estuary was the main option for real-time ETL. You could also consider some of the more traditional ETL vendors such as Informatica, Matillion, and Talend.

- Streaming integration: Striim is one of the leading vendors in this category. Compared to others, they’ve added the most data integration capabilities on top of their CDC technology.

- Replication: Debezium is the most widely used open source CDC option. There can’t be any CDC comparison without Debezium. GoldenGate and Amazon DMS deserve consideration as well (more on them below.)

This guide evaluates each vendor for the main CDC use cases:

- Database replication - replication across databases for operational use cases

- Operational data store (ODS) - creating a store for read offloading or staging data

- Historical (traditional) analytics - loading a data warehouse

- Operational data integration - data synchronization across apps

- Data migration - retiring an old app or database and replacing it with a new one

- Stream processing and streaming analytics - real-time processing of streaming data

- Operational analytics - analytics or reporting for operational use cases

- Data science and machine learning - data mining, statistics, and data exploration

- AI - data pipelines for generative AI (new)

It also evaluates each vendor based on the following categories and features:

- Connectors - CDC sources, non-CDC sources, and destinations

- Core features - full/incremental snapshots, backfilling, delivery guarantee, time travel, schema evolution, DataOps

- Deployment options - public (multi-tenant) cloud, private cloud, and on-premises

- The “abilities” - performance, scalability, reliability, and availability

- Security - including authentication, authorization, and encryption

- Costs - Ease of use, vendor and other costs you need to consider

Use cases for CDC

You have two types of choices you can make when choosing CDC technologies today. The first is to choose a technology purpose-built for that type of project. The second is to start to build a common real-time data backbone to support all your projects.

There are great reasons, especially business reasons, for choosing a specialized or general-purpose CDC technology. The most important thing you can understand are the strengths and weaknesses of each technology.

Where each vendor started continues to be one of the best ways to understand their strengths and weaknesses for different use cases. Most vendors continue to only be well suited for their original market.

If you review the history of CDC, you can easily understand the strengths and weaknesses of each vendor.

Replication

CDC was invented as a built-in feature for database-specific replication. Someone realized that the “write-ahead” transaction log file - which is used to save transactions made after a database snapshot and recover after a database failure - was an ideal source of changes for replication. Just stream writes to the log as they happen and use them to create exact replicas. It’s not only the most real-time option. It puts the least load on the database itself.

But database-specific CDC remained just that. It was used by each vendor just to scale a database deployment, not for general-purpose data sharing. In general you can only create database replicas. You can’t merge data from different databases, for example.

Decades of hardening built-in replication helped identify the best architecture for more general-purpose cross-database replication. By now log-based CDC is mature and hardened. It is the most cost-effective, and lowest-load way to extract real-time data from databases.

GoldenGate, founded in 1995, was the first major vendor to release general-purpose replication.

Operational data stores (ODS)

Companies started to use GoldenGate and other similar vendors to create operational data stores (ODS), read-only stores with up-to-date operational data, for data sharing, offloading reads, and staging areas for data pipelines. Eventually Oracle acquired GoldenGate in 2009.

They are not included in the final comparison here because they only support replication and ODS use cases. But if you’re only looking for a replication product and need to support Oracle as a source, GoldenGate should be on your short list.

Most data replication vendors like GoldenGate are pre-SaaS and DataOps. As a result, they are a little behind in these areas.

Historical analytics

CDC has been used for roughly 20 years now to load data warehouses, starting with ETL vendors. It is not only proven; it provides the lowest latency, least load on the source, and the greatest scalability for a data pipeline.

ETL vendors had their own staging areas for data, which allowed them to implement streaming from various sources, processing the data in batch, and then loading destinations in batch intervals. Historically data warehouses preferred batch loading because they are columnar stores. (A few like Teradata, Vertica, or Greenplum could do real-time data ingestion and support fast query times as well.)

The original ETL vendors like Informatica continue to evolve, having been one of the first to release a cloud offering (Informatica Cloud). Other competitors one would consider include Talend, now part of Qlik, and Matillion. While these three and many other smaller vendors are good options for ETL, especially for on premises data warehouses, data migration, and operational data integration (see below), and they do offer solid CDC support, they are missing some of the more recent CDC innovations including incremental snapshots and backfilling. They are also missing modern data pipeline features including schema evolution and DataOps support.

Modern ELT vendors like Fivetran, Hevo, and Airbyte implemented CDC-based replication to support cloud data warehouse projects. But these ELT vendors don’t have an intermediate staging area. They need to extract and load at the same interval.

For this reason, ELT vendors are batch by design. They ended up implementing CDC in “batch” mode. For this reason, ELT is not well suited for real-time use cases, and that includes CDC.

CDC is meant to be real-time. It’s designed for real-time capture of change data from a transaction or write-ahead log (WAL) which we’ll refer to as a WAL. The WAL is used by CDC as a buffer when read delays happen. It was never intended to support large-scale buffering for batch-based reads. Increasing the WAL size not only adds latency. It also consumes the limited resources of the database under any kind of load, which can slow down performance or even in some cases cause a database to fail.

Make sure you understand each vendor’s CDC architecture and limitations like this, and evaluate whether it will meet your needs. If you’d like to learn more about this, read Change Data Capture (CDC) Done Correctly (CDC).

Operational Data integration and Data Migration

While ETL vendors started as tools for loading data warehouses, they quickly ended up with 3 major use cases: data warehousing (analytics), operational data integration (data synchronization), and data migration. By 2010 ETL vendors were also using CDC across all three use cases.

Data migration is very similar to operational data integration, so it’s not included as its own use case.

ELT vendors are typically not used for operational integration or data migration because both require real-time and ETL.

Stream Processing and Streaming Analytics

Around the same time replication and ETL was evolving, messaging and event processing software was being used for various real-time control systems and applications. Technologies started to develop on top of messaging. There were many different variants. Collectively you could call all these technologies stream processing and streaming analytics. Gartner used the term operational intelligence. These technologies have always been more complex than ETL or ELT.

- Stream processing was initially called complex event processing (CEP) when it became a little more popular around 2005. It was more generally known as stream processing. Following the creation of Apache Kafka in 2011, technologies like Apache Samza and Flink started to emerge in this category.

- Streaming analytics, which was more directly tied to BI and analytics, was another version. Many streaming analytics vendors used rules engines, or machine learning techniques to look for patterns in data.

Several of the people involved with GoldenGate went off to found Striim in 2012 to focus on the use of CDC replication for replication, stream processing and analytics. Over time they added connectors to support integration use cases.

But Striim remains more complex than ELT and ETL with its proprietary SQL-like language (TQL) and streaming concepts like windowing. This is what mostly limits Striim to stream processing and sophisticated real-time use cases.

Operational analytics

Messaging software started to evolve to support operational analytics over two decades ago. In 2011, what started as a project at LinkedIn became Apache Kafka. Very quickly people started to use Kafka to support real-time operational analytics use cases. Today, if you look at the myriad of high-performance databases that get used to support sub-second analytics in operations - ClickHouse, Druid/Imply, ElasticSearch, Firebolt, Pinot/StarTree, Materialize, RisingWave, the database formerly known as Rockset - their most popular collective way to load them is with Kafka.

Modern Real-time Data Pipelines

Ever since the rise of modern technology giants - including Facebook, Amazon, Apple, Netlfix, and Google formerly known as FAANG - we’ve watched their architectures, read their papers, and tried out their open source projects to help get a glimpse into the future. It’s not quite the same; the early versions of these technologies are often too complex for many companies to adopt. But those first technologies end up getting simplified. This includes cloud computing, Hadoop/Spark, BigQuery and Snowflake, Kafka, stream processing, and yes, modern CDC.

Over the last decade CDC has been changing to support modern streaming data architectures. By 2012 people also started talking about turning the database inside out with projects like Apache Samza trying to turn the database inside out into streams of change data. Netflix and others built custom replication frameworks whose learnings led to modern CDC frameworks like Debezium and Gazette. Both have started to simplify initial (incremental) snapshotting and add modern pipeline (DataOps) features including schema evolution.

All of these companies have had real-time backbones for a decade that stream data from many sources to many destinations. They use the same backbone for operations, analytics, data science and ML, and generative AI. These technologies continue to evolve into the core of modern real-time data pipelines.

Common streaming data pipelines across operations are becoming common, and they’re also working their way towards analytics. They’re more the norm for newer AI projects.

- Tens of thousands of companies use Kafka and other messaging technologies in operations.

- Kafka is one of the most popular connection options for BigQuery, Databricks, and Snowflake as just one destination, and their streaming support has improved. Ingestion latency has dropped into the low seconds at scale.

- Spark and Databricks, BigQuery, and Snowflake are increasingly getting used for operations now. Just look at the case studies and show sessions. Query performance continues to improve, though it’s not quite sub-second at scale.

- A given high performance OLAP database can have as much as 50% of their deployments using streaming ingestion, especially Kafka.

- AI projects are only accelerating the shift, and generative AI use cases support operations. They are helping drive more destinations for data.

You can either choose replication, ELT, or streaming technologies for specific use cases, or you start to use this latest round of technologies to build a modern real-time data pipeline as a common backbone that shares data across operations, analytics, data science/ML, and AI.

The requirements for a common modern data pipeline is relatively well defined. It’s a mix of traditional requirements for operational data integration with newer data engineering best practices. They include support for:

- Real-time and batch sources and destinations including extracting data with real-time CDC and batch-loading data warehouses. If you want to learn why CDC should be real-time you can read change data capture (CDC) done correctly (CDC).

- Many destinations at the same time with only one extract per source. Most ETL only supports one destination, which means you need to re-extract for each destination.

- A common intermediate data model for mapping source data in different ways to destinations.

- Schema evolution and automation to minimize pipeline disruption and downtime. This is in addition to modern DataOps support, which is a given.

- Transformations for those destinations that do not support dbt or other types of transformations.

- Stream-store-and-forward to guarantee exactly once extraction, guaranteed delivery, and data reuse. Kafka is the most common backbone today. But it is missing services for backfilling, time travel, and updating data during recovery or schema evolution. Kafka also does not store data indefinitely. You can either build these services along with a data lake or get some of the services as part of your pipeline technology.

These features are not required for individual projects, though a few are nice to have. They only become really important once you have to support multiple projects.

Comparison Criteria

Now that you understand the different use cases and their history, and the concept of a modern data pipeline, you can start to evaluate different vendors.

The detailed comparison below covers the following categories:

Use cases - Over time, you will end up using data integration for most of these use cases. Make sure you look across your organization for current and future needs. Otherwise you might end up with multiple data integration technologies, and a painful migration project.

- Replication - Read-only and read-write load balancing of data from a source to a target for operational use cases. CDC vendors are often used in cases where built-in database replication does not work.

- Operational data store (ODS) - Real-time replication of data from many sources into an ODS for offloading reads or staging data for analytics.

- Historical analytics - The use of data warehouses for dashboards, analytics, and reporting. CDC-based ETL or ELT is used to feed the data warehouse.

- Operational data integration - Synchronizing operational data across apps and systems, such as master data or transactional data, to support business processes and transactions.

NOTE: none of the vendors in this evaluation support many apps as destinations. They only support data synchronization for the underlying databases. - Data migration - This usually involves extracting data from multiple sources, building the rules for merging data and data quality, testing it out side-by-side with the old app, and migrating users over time. The data integration vendor used becomes the new operational data integration vendor.

NOTE: like operational data integration, the vendors only support database destinations. - Stream processing - Using streams to capture and respond to specific events

- Operational analytics - The use of data in real-time to make operational decisions. It requires specialized databases with sub-second query times, and usually also requires low end-to-end latency with sub-second ingestion times as well. For this reason the data pipelines usually need to support real-time ETL with streaming transformations.

- Data science and machine learning - This generally involves loading raw data into a data lake that is used for data mining, statistics and machine learning, or data exploration including some ad hoc analytics. For data integration vendors this is very similar to data warehousing.

- AI - the use of large language models (LLM) or other artificial intelligence and machine learning models to do anything from generating new content to automating decisions. This usually involves different data pipelines for model training, and model execution.

Connectors - The ability to connect to sources and destinations in batch and real-time for different use cases. Most vendors have so many connectors that the best way to evaluate vendors is to pick your connectors and evaluate them directly in detail.

- Number of connectors - The number of source and target connectors. What’s important is the number of high-quality and real-time connectors, and that the connectors you need are included. Make sure to evaluate each vendor’s specific connectors and their capabilities for your projects. The devil is in the details.

- CDC sources - Does the vendor support your required CDC sources now for current and future projects?

- Destinations - Does the vendor support all the destinations that need the source data, or will you need to find another way to load for select projects?

- Non-CDC - when needed for batch-only destinations or to lower costs, is there an option to use batch loading into destinations?

- Support for 3rd party connectors - Is there an option to use 3rd party connectors?

- CDK - Can you build your own connectors using a connector development kit (CDK)?

- API - Is an admin API available to help integrate and automate pipelines?

Core features - How well does each vendor support core data features required to support different use cases? Source and target connectivity are covered in the Connectors section.

- Batch and streaming support - Can the product support streaming, batch, and both together in the same pipeline?

- Transformations - What level of support is there for streaming and batch ETL and ELT? This includes streaming transforms, and incremental and batch dbt support in ELT mode. What languages are supported? How do you test?

- Delivery guarantees - Is delivery guaranteed to be exactly once, and in order?

- Data types - Support for structured, semi-structured, and unstructured data types.

- Backfilling - The ability to add historical data during integration, or later additions of new data in targets.

- Time travel - The ability to review or reuse historical data without going back to sources.

- Schema drift - support for tracking schema changes over time, and handling it automatically.

- DataOps - Does the vendor support multi-stage pipeline automation?

Deployment options - does the vendor support public (multi-tenant) cloud, private cloud, and on-premises (self-deployed)?

The “abilities” - How does each vendor rank on performance, scalability, reliability, and availability?

- Performance (latency) - what is the end-to-end latency in real-time and batch mode?

- Scalability - Does the product provide elastic, linear scalability

- Reliability - How does the product ensure reliability for real-time and batch modes? One of the biggest challenges, especially with CDC, is ensuring reliability.

Security - Does the vendor implement strong authentication, authorization, RBAC, and end-to-end encryption from sources to targets?

Costs - the vendor costs, and total cost of ownership associated with data pipelines

- Ease of use - The degree to which the product is intuitive and straightforward for users to learn, build, and operate data pipelines.

- Vendor costs - including total costs and cost predictability

- Labor costs - Amount of resources required and relative productivity

- Other costs - Including additional source, pipeline infrastructure or destination costs

Comparison Matrix

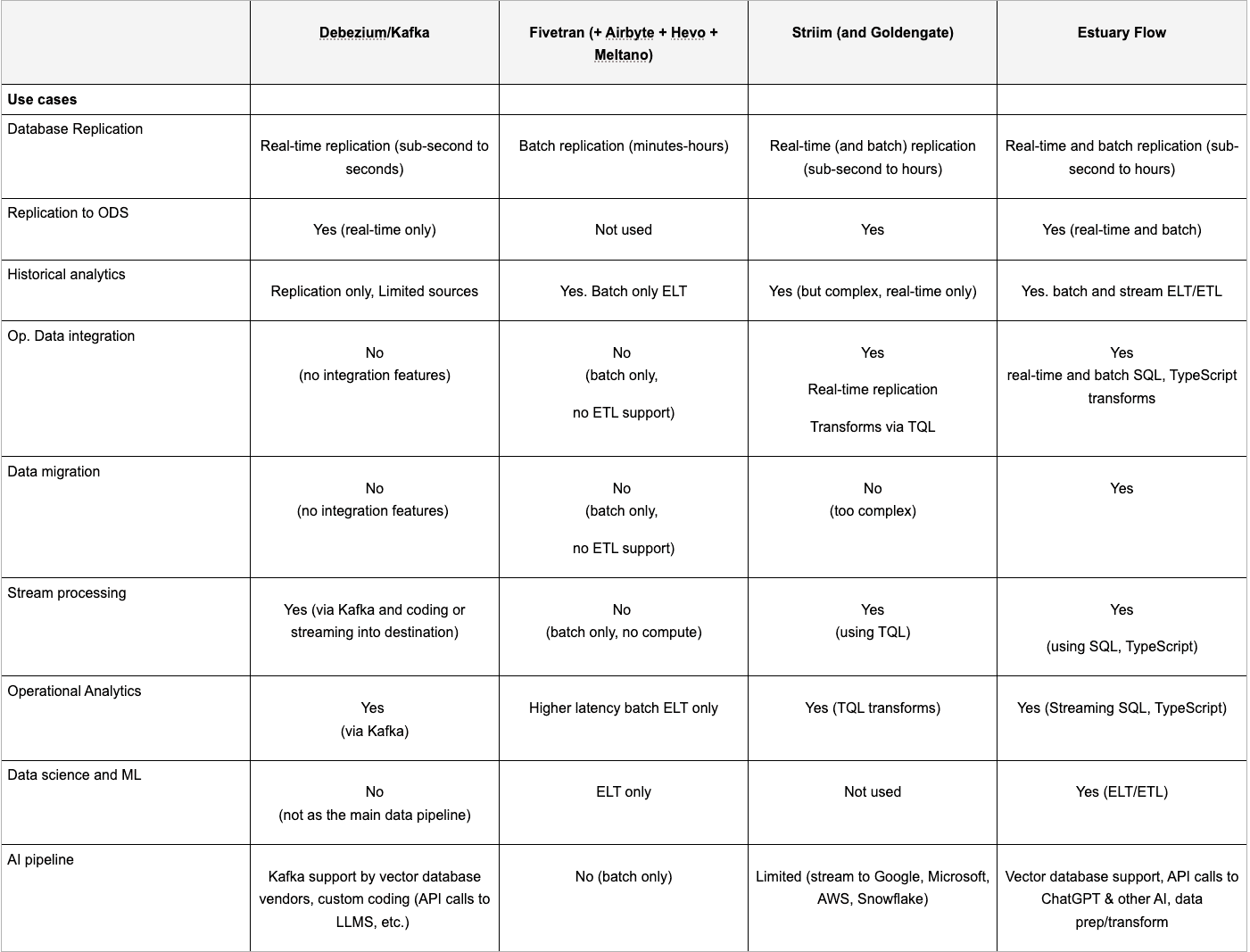

Debezium/Kafka | Fivetran (+ Airbyte + Hevo + Meltano) | Striim (and Goldengate) | Estuary Flow | |

|---|---|---|---|---|

| Use cases | ||||

| Database Replication | Real-time replication (sub-second to seconds) | Batch replication (minutes-hours) | Real-time (and batch) replication (sub-second to hours) | Real-time and batch replication (sub-second to hours) |

| Replication to ODS | Yes (real-time only) | Not used | Yes | Yes (real-time and batch) |

| Historical analytics | Replication only, Limited sources | Yes. Batch only ELT | Yes (but complex, real-time only) | Yes. batch and stream ELT/ETL |

| Op. Data integration | No | No no ETL support) | Yes Real-time replication Transforms via TQL | Yes |

| Data migration | No | No no ETL support) | No | Yes |

| Stream processing | Yes (via Kafka and coding or streaming into destination) | No | Yes | Yes (using SQL, TypeScript) |

| Operational Analytics | Yes | Higher latency batch ELT only | Yes (TQL transforms) | Yes (Streaming SQL, TypeScript) |

| Data science and ML | No | ELT only | Not used | Yes (ELT/ETL) |

| AI pipeline | Kafka support by vector database vendors, custom coding (API calls to LLMS, etc.) | No (batch only) | Limited (stream to Google, Microsoft, AWS, Snowflake) | Vector database support, API calls to ChatGPT & other AI, data prep/transform |

Debezium/Kafka | Fivetran (+ Airbyte + Hevo + Meltano) | Striim (and Goldengate) | Estuary Flow | |

|---|---|---|---|---|

| Connectors | ||||

| Number of connectors | 100+ Kafka sources and destinations (via Confluent, vendors) | <300 connectors | 100+ | 150+ |

| CDC Connectors (sources) | DB2, Cassandra, MongoDB, MySQL, Oracle, Postgres, Spanner, SQL Server, TimescaleDB (Postgres) | MySQL, SQL Server, Postgres, Oracle Single target only. Batch CDC only. | CosmosDB, MariaDB, MongoDB, MySQL, Oracle, Postgres, SQL Server | AlloyDB, Firestore, MySQL, Postgres, MariaDB, MongoDB, Oracle, Salesforce, Snowflake, SQL Server |

| Destinations | Kafka ecosystem (indirect) Data warehouses, OLAP databases, Time series databases, etc. | https://fivetran.com/docs/destinations Data warehouses, Databases (above), SingleStore, Materialize, Motherduck | https://www.striim.com/docs/en/targets.html | https://estuary.dev/integrations/ |

| Non-CDC connectors | Kafka ecosystem | Batch only. | No | 150+ of batch and real-time connectors incl. |

| Support for 3rd party connectors | Kafka ecosystem | No | No | Support for 500+ Airbyte, Stitch, and Meltano connectors |

| Custom SDK | Kafka Connect | Yes | No | Yes |

| API | Kafka API | Yes | No | Yes Estuary API docs |

Debezium/Kafka | Fivetran (+ Airbyte + Hevo + Meltano) | Striim (and Goldengate) | Estuary Flow | |

|---|---|---|---|---|

| Core features | ||||

| Batch and streaming (extract and load) | Streaming-centric (subscribers and pick up in intervals) | Batch only | Streaming-centric but can do incremental batch | Can mix streaming and batch in the same pipeline |

| Snapshots | Full or incremental | Full only | Incremental | Incremental |

| ETL Transforms | Coding (SMT) | None | TQL transforms | SQL & TypeScript (Python planned) |

| Workflow | Coding or 3rd party (including OSS) | None | Striim Flows | Many-to-many pub-sub ETL (SQL, Typescript) |

| ELT transforms | No | ELT only with | dbt | Dbt. Integrated orchestration. |

| Delivery guarantees | At least once. | Exactly once (Fivetran) At least once (Airbyte) | At least once for most destinations. | Exactly once (streaming, batch, mixed). |

| Multiple destinations | Yes (identical data by topic) | No | Yes (same data, but different flows) | Yes (different data per destination) |

| Backfilling | Yes (requires re-extract for each destination) | Yes (requires re-extract for each destination) | Yes (requires re-extract for new destinations) | Yes (extract once, backfills for multiple destinations) |

| Time travel | No | No | No | Yes |

| Schema inference and drift | Support for message-level schema evolution (Kafka Schema Registry) with limits by source and destination | Good schema inference, automating schema evolution | Yes, with some limits by destination. | Good schema inference, automating schema evolution |

| DataOps support | CLI, API | CLI, | CLI, API | CLI |

Debezium/Kafka | Fivetran (+ Airbyte + Hevo + Meltano) | Striim (and Goldengate) | Estuary Flow | |

|---|---|---|---|---|

| Deployment options | Open source, Confluent Cloud (Public) | Public cloud, private cloud (Airbyte, Meltano OSS) | On prem, Private cloud, Public cloud | Open source, Private cloud, Public cloud |

| The abilities | ||||

| Performance (minimum latency) | <100ms | 15 minutes enterprise, 1 minute business critical | <100ms | < 100 ms (in streaming mode) |

| Scalability | High | Medium-High | High (GB/sec) | High |

| Reliability | High (Kafka) | Medium-High. Issues with Fivetran CDC. | High | High |

| Security | ||||

| Data Source Authentication | SSL/SSH | OAuth / HTTPS / SSH / SSL / API Tokens | SAML, RBAC, SSH/SSL, VPN | OAuth 2.0 / API Tokens |

| Encryption | Encryption in-motion (Kafka for topic security) | Encryption in-motion | Encryption in-motion | Encryption at rest, in-motion |

Debezium/Kafka | Fivetran (+ Airbyte + Hevo + Meltano) | Striim (and Goldengate) | Estuary Flow | |

|---|---|---|---|---|

| Support | Low (Debezium community). | Medium | High | High |

| Costs | ||||

| Ease of use | Hard | Easy to use connectors. | Med-Hard | Easy to use |

| Vendor costs | Low for OSS | High | High | Low |

| Data engineering costs | High - requires custom development for destinations | Low-Med | High Requires proprietary SQL-like language and engineering (TQL) | Low-Med |

| Admin costs | High OSS infrastructure management | Med-High | High

| Low |

Debezium

Debezium started within Red Hat following the release of Kafka, and Kafka Connect. It was inspired in part by Martin Kleppmann’s presentations on CDC and turning the database inside out.

Debezium is the open source option for general-purpose replication, and it does many things right for replication, from scaling to incremental snapshots (make sure you use DDD-3 and not whole snapshotting.) If you are committed to open source, have the specialized resources needed, and need to build your own pipeline infrastructure for scalability or other reasons, Debezium is a great choice.

Otherwise think twice about using Debezium because it will be a big investment in specialized data engineering and admin resources. While the core CDC connectors are solid, you will need to build the rest of your data pipeline including:

- The many non-CDC source connectors you will eventually need. You can leverage all the Kafka Connect-based connectors to over 100 different sources and destinations. But they have a long list of limits (see confluent docs on limits).

- Data schema management and evolution - while the Kafka Schema Registry does support message-level schema evolution, the number of limitations on destinations and the translation from sources to message makes this much harder to manage.

- Kafka does not save your data indefinitely. There is no replay/backfilling service that manages previous snapshots and allows you to reuse them, or do time travel. You will need to build those services.

- Backfilling and CDC happens on the same topic. So if you need to redo a snapshot, all destinations will get it. If you want to change this behavior you need to have separate source connectors and topics for each destination, which adds costs and source loads.

- You will need to maintain to main your Kafka cluster(s), which is no small task.

If you are already invested in Kafka as your backbone, it does make good sense to evaluate Debezium. Using Confluent Cloud does simplify your deployment, but at a cost.

Fivetran

Fivetran is listed here as the main ELT vendor. But this section is meant to represent Fivetran, Airbyte, Hevo, Meltano, Stitch, and other ELT vendors. For more on the other vendors including Airbyte and Hevo you can read A Data Engineer’s Guide to Fivetran Alternatives.

If you are only loading a cloud data warehouse, you should evaluate Fivetran and other ELT vendors. They are easy-to-use, mature options.

If you want to understand and evaluate Fivetran, it’s important to know Fivetran’s history. It will help you understand Fivetran’s strengths and weaknesses relative to other vendors, including other ELT vendors.

Fivetran was founded in 2012 by data scientists who wanted an integrated stack to capture and analyze data. The name was a play on Fortran and meant to refer to a programming language for big data. After a few years the focus shifted to providing just the data integration part because that’s what so many prospects wanted. Fivetran was designed as an ELT (Extract, Load, and Transform) architecture because in data science you don’t usually know what you’re looking for, so you want the raw data.

In 2018, Fivetran raised their series A, and then added more transformation capabilities in 2020 when it released Data Build Tool (dbt) support. That year Fivetran also started to support CDC. Fivetran has since continued to invest more in CDC with its HVR acquisition.

Fivetran’s design worked well for many companies adopting cloud data warehouses starting a decade ago. While all ETL vendors also supported “EL” and it was occasionally used that way, Fivetran was cloud-native, which helped make it much easier to use. The “EL” is mostly configured, not coded, and the transformations are built on dbt core (SQL and Jinja), which many data engineers are comfortable using.

But today Fivetran often comes in conversations as a vendor customers are trying to replace. Understanding why can help you understand Fivetran’s limitations.

The most common points that come up in these conversations and online forums are about needing lower latency, improved reliability, and lower, more predictable costs:

- Latency: While Fivetran uses change data capture at the source, it is batch CDC, not streaming. Enterprise-level is guaranteed to be 15 minutes of latency. Business critical is 1 minute of latency, but costs more than 2x the standard edition. The actual latency in production is usually much longer anyway, and often slowed down by the target load and transformation times.

- Costs: Another major complaint are Fivetran’s high vendor costs, which have been anywhere from 2-10x the cost of Estuary as stated by customers. Fivetran costs are based on monthly active rows (MAR) that change at least once per month. This may seem low, but for several reasons (see below) it can quickly add up.

Lower latency is also very expensive. To reduce latency from 1 hour to 15 minutes can cost you 33-50% more (1.5x) per million MAR, and 100% (2x) or more to reduce latency to 1 minute. Even then, you still have the latency of the data warehouse load and transformations. The additional costs of frequent ingestions and transformations in the data warehouse can also be expensive and take time. Companies often keep latency high to save money. - Unpredictable costs: Another major reason for high costs is that MARs are based on Fivetran’s internal representation of rows, not rows as you see them in your source.

For some data sources you have to extract all the data across tables, which can mean many more rows. Fivetran also converts data from non-relational sources such as SaaS apps into highly normalized relational data. Both make MARs and costs unexpectedly soar. - Reliability: Another reason for replacing Fivetran is reliability. Customers have struggled with a combination of alerts of load failures, and subsequent support calls that result in a longer time to resolution. There have been several complaints about reliability with MySQL and Postgres CDC, which is due in part because Fivetran uses batch CDC. Fivetran also had a 2.5 day outage in 2022. Make sure you understand Fivetran’s current SLA in detail. Fivetran has had an “allowed downtime interval” of 12 hours before downtime SLAs start to go into effect on the downtime of connectors. They also do not include any downtime from their cloud provider.

- Support: Customers also complain about Fivetran support being slow to respond. Combined with reliability issues, this can lead to a substantial amount of data engineering time being lost to troubleshooting and administration.

- DataOps: Fivetran does not provide much control or transparency into what they do with data and schema. They alter field names and change data structures and do not allow you to rename columns. This can make it harder to migrate to other technologies. Fivetran also doesn’t always bring in all the data depending on the data structure, and does not explain why.

- Roadmap: Customers frequently comment Fivetran does not reveal as much of a future direction or roadmap compared to the others in this comparison, and do not adequately address many of the above points.

Striim

Pronounced “Stream”, Striim is a replication and stream processing vendor that has since moved into data integration.

Several of the people involved with GoldenGate moved on to found Striim in 2012 to focus on the use of CDC replication for replication, stream processing and analytics. Over time they added connectors to support integration use cases.

If you just need replication and need to do more complex stream processing, Striim is a great option and proven vendor. It has some of the best CDC around, including its Oracle support. It also has the scale of Debezium and Estuary for moving data. If you have the skill sets to do stream processing then it can also be a good option for supporting both stream processing and data integration use cases.

But its origins in stream processing are its weakness for data integration use cases.

- ELT tools are much easier to learn and use than Striim. This comes up during evaluations.

- Tungsten Query Language (TQL) is a SQL-like language designed for stream processing. While it’s very powerful, it’s not as simple as SQL.

- Striim Flows are a great graphical tool for stream processing, but it does take time to learn, and you do need to build flows (or use TQL) for each capture. This makes it much more complex than ELT vendors for building CDC flows from sources to a destination.

- While Striim can persist streams to Kafka for recovery, and it is integrated, it does not support permanent storage. There is no mechanism for backfilling destinations at a later time without taking a new snapshot. That leads to a lack of older change data in newer destinations or losing older change data.

Striim is a great option for stream processing and related real-time use cases. If you do not have the skill sets or the appetite to spend the extra time learning TQL and the intricacies of stream processing, an ELT/ETL vendor might make more sense.

Estuary

Estuary was founded in 2019. But the core technology, the Gazette open source project, has been evolving for a decade within the Ad Tech space, which is where many other real-time data technologies have started.

Estuary Flow is the only real-time CDC and ETL data pipeline vendor in this comparison.

While Estuary Flow is also a great option for batch sources and targets, and supports all Fivetran use cases, where it really shines is any combination change data capture (CDC), real-time and batch ETL or ELT, across multiple sources and destinations.

Estuary Flow has a unique architecture where it streams and stores streaming or batch data as collections of data, which are transactionally guaranteed to deliver exactly once from each source to the target. With CDC it means any (record) change is immediately captured once for multiple targets or later use. Estuary Flow uses collections for transactional guarantees and for later backfilling, restreaming, transforms, or other compute. The result is the lowest load and latency for any source, and the ability to reuse the same data for multiple real-time or batch targets across analytics, apps, and AI, or for other workloads such as stream processing, or monitoring and alerting.

Estuary Flow also supports the broadest packaged and custom connectivity. It has 150+ native connectors that are built for low latency and/or scale. While may seem low, these are connectors built for low latency and scale. Estuary is the only vendor here to support Airbyte, Meltano, and Stitch connectors as well, which easily adds 500+ more connectors. Getting official support for the connector is a quick “request-and-test” with Estuary to make sure it supports the use case in production. Most of these open source connectors are not as scalable as Estuary-native or Fivetran connectors, so it’s important to confirm they will work for you. Its support for TypeScript, SQL, and Python (planned) also makes it the best vendor for custom connectivity when compared to Fivetran functions, for example.

Estuary is the lowest cost option. Cost of ownership from lowest to highest tend to be Estuary, Debezium, Striim, and Fivetran in this order, especially for higher volume use cases. Estuary is the lowest cost mostly because Estuary only charges $1 per GB of data moved from source to target, which is much less than the roughly $10-$50 per GB that other ELT/ETL vendors charge. Your biggest cost is generally your data movement.

While open source is free, and for many it can make sense, you need to add in the cost of support from the vendor, specialized skill sets, implementation, and maintenance to your total costs to compare. Without these skill sets, you will put your time to market and reliability at risk. Many companies will not have access to the right skill sets to make open source work for them. With Debezium, your cost will be people and Confluent if you choose to go with SaaS or pay for support.

Honorable Mentions

There are a few other vendors you might consider outside of this short list.

GoldenGate

GoldenGate was the original replication vendor, founded in 1995. It is rock-solid replication technology that was acquired by Oracle in 2009. Unlike Striim, they do not support transforms, and it was built long before modern DataOps was even a twinkle in any data engineer’s eye. If you just need replication or are building an operational data store, it is arguably the best option for you, and the most expensive. It should be on your short list. GoldenGate also has a host of source and destination connectors.

You can also use it for replication (EL) into a low latency destination. But it is not well suited for other use cases. There is no support for transforms, and it does not support batch loading, which is what people often do with cloud data warehouses to reduce ingestion (compute) costs.

Amazon DMS

DMS stands for Data Migration Service, not CDC service. So if you’re thinking about using it for general-purpose CDC … think really carefully.

In 2016 Amazon released Data Migration Service (DMS) to help migrate on-prem databases to AWS services. In 2023 Amazon released DMS Serverless, which basically removes a lot of the pain of managing DMS.

DMS is a great data migration service for moving to Aurora, DynamoDB, RDS, Redshift, or an EC2-hosted database. But it’s not for general-purpose change data capture (CDC).

Whenever you use something outside of its intended use, you can expect to get some weird behaviors and errors. Look at the forums for DMS issues, then look at what they’re trying to use it for, and you’ll start to see a pattern.

By many accounts, DMS was built using an older version of Attunity. If you look at how DMS works and compare it to Attunity, you can see the similarities. That should tell you some of its limitations as well.

Here are some specific reasons why you shouldn’t use DMS for general-purpose CDC:

- You’re limited to CDC database sources and Amazon database targets. DMS requires using VPC for all targets. You also can’t do some cross-region migrations, such as with DynamoDB. If you need more than this for your data pipeline, you’ll need to add something else.

NOTE: Serverless is even more restricted, so make sure to check the limitations pages. - The initial full load of source data by table is the table at that point in time. Changes to the table are cached and applied before it goes into CDC-based replication. This is old-school Attunity. And it’s not great because it requires table locks. That puts a load on the database at scale.

- You’re limited in scale. For example, replication instances have 100GB limits on memory, which is harder to work around for a single table. You end up having to manually break a table up into smaller segments. Search for limitations and workarounds. It will save you time.

Also, please remember the difference between migration and replication! Using a database migration tool with replication can lead to mistakes. For example, if you forget to drop your Postgres replication slot at the end of a migration, bad things can happen to your source.

How to choose the best option

For the most part, if you are interested in a cloud option, need the scalability, and value ease of use and productivity, you should evaluate Estuary.

- Real-time and batch: Estuary not only has sub-100ms latency. It supports batch natively with a broad range of connectors.

- High scale: Estuary has the highest (GB/sec) scale along with Debezium/Kafka and Striim, who all have much higher scalability than Fivetran and other ELT vendors.

- Most efficient: Estuary alone has the fastest and most efficient CDC connectors. It is also the only vendor to enable exactly-and-only-once capture, which puts the least load on a system, especially when you’re supporting multiple destinations including a data warehouse, high performance analytics database, and AI engine or vector database.

- Most reliable: Estuary’s exactly-once transactional delivery and durable stream storage is partly what makes it the most reliable data pipeline vendor as well.

- Lowest cost: for data at any volume, Estuary is the clear low-cost winner.

- Great support: Customers moving from Fivetran to Estuary consistently cite great support as one of the reasons for adopting Estuary.

If you are committed to open source and want or need to build your own modern data pipeline as a common backbone, Debezium (with Kafka) is your other option. It’s what some of the tech giants have done. But it will require a big investment in resources and money.

Ultimately the best approach for evaluating your options is to identify your future and current needs for connectivity, key data integration features, and performance, scalability, reliability, and security needs, and use this information to a good short-term and long-term solution for you.

Getting Started with Estuary

Getting started with Estuary is simple. Sign up for a free account.

Make sure you read through the documentation, especially the get started section:

I highly recommend you also join the Slack community. It’s the easiest way to get support while you’re getting started.

If you want an introduction and walk-through of Estuary, you can watch the Estuary 101 Webinar.

Questions? Feel free to contact us any time!

About the author

Rob has worked extensively in marketing and product marketing on database, data integration, API management, and application integration technologies at WS02, Firebolt, Imply, GridGain, Axway, Informatica, and TIBCO.

Popular Articles