3 reasons to rethink your approach to change data capture

As we navigate this rapidly evolving space, we should be familiar with the challenges of change data capture as it currently exists. But we should not expect them to remain the same for long.

Change data capture, or CDC, is a data industry buzzword that deserves its hype. As data architectures grow in complexity and real-time data becomes non-negotiable across industries, companies need a way to sync data instantly across all their systems.

But CDC, especially real-time CDC, is a young and rapidly evolving technology. In the last few years, open-source, event-driven CDC platforms have become the industry standard. These are vast improvements over older, batch CDC methods, but like any new technology, they too have their limits.

As we navigate this rapidly evolving space, we should be familiar with the challenges of change data capture as it currently exists. But we should not expect them to remain the same for long. In the future, real-time CDC options will improve, both through the growth of current platforms and the addition of new ones.

Our platform, Flow, is one of these. We’re committed to lowering the barrier to entry around real-time, scalable CDC. Drawn from years of experience in this field, this post will discuss three CDC roadblocks our team hears about frequently, and how they can be solved.

But first, we need a bit of context.

The state of change data capture

This post is the second in a series on change data capture. In the introductory post, I gave a comprehensive background on CDC: what it is, different implementations, and when you should use it. Here, I’d like to expand on a few important points.

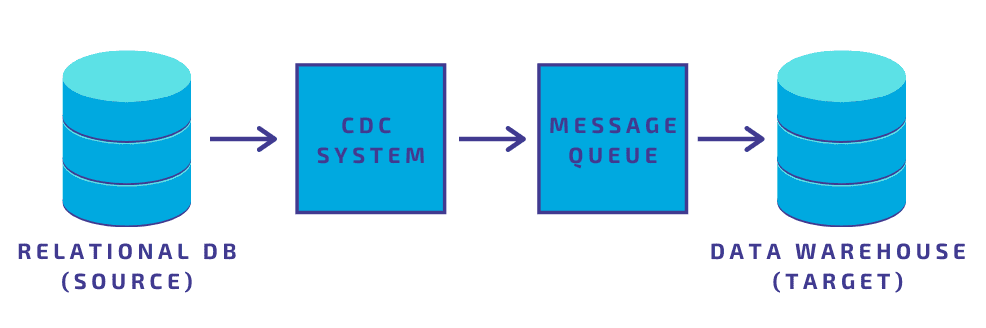

These days, when we talk about “change data capture,” we’re often using it as shorthand for a specific kind of CDC: real-time, event-driven CDC that employs the database logs, a message queue (or an event bus), and some kind of CDC platform or connector.

Essentially, the CDC platform listens for new data change events — inserts, updates, or deletes — in database source tables. These events are written to the message queue and then perpetuated to a target system. Thus, the target is kept up-to-date with the source within instants of a change being made.

This method became approachable thanks to the open-source pioneer Debezium, founded in 2016. Debezium uses Apache Kafka as an event bus and offers several connectors to popular source databases. It can be used out-of-the-box or as a foundation for custom CDC implementation.

It was a major breakthrough for CDC technology. Change data capture had been defined as early as the 1990s, but the implementations most organizations could create pre-Debezium were underwhelming.

These implementations were simple, SQL-based queries or triggers. Though these methods were approachable, they were batch solutions that introduced latency (change data capture doesn’t, by definition, need to be real-time).

Even organizations that didn’t care about real-time data could get stymied by performance. Many of these methods bogged down source databases even at modest scales.

But despite being a dramatic improvement, the current state of event-driven CDC has its shortcomings. The space has not yet diversified, and still relies heavily on a single open-source architecture, Debezium. This means the limitations of Debezium are nearly synonymous with the limitations of CDC.

At the moment, most of the large tech companies seeking an alternative to Debezium’s defaults do one of two things: they customize it heavily, like Shopify; or they build something completely from scratch, like Netflix. Small or mid-sized companies, however, may not have the bandwidth for this sort of work.

Why you should re-think change data capture

If your organization is trying to implement real-time change data capture, but can’t find a solution that will work for you, you’re not alone.

Below are three common change data capture challenges. Understanding these might help you put your finger on what, exactly, is challenging you. More importantly, it’ll highlight what you should look for in a solution in the next few years.

Open-source CDC work is ongoing: both improvements to Debezium and other, newer projects. Startups are offering CDC services. Overall, as the ecosystem becomes richer, you’ll start seeing more solutions to these problems.

To demonstrate that, after each problem, I’ll discuss how we’re approaching it at Estuary.

1- Unavailability of user-friendly change data capture tools

Discussions around hot topics like data mesh and DataOps promise a future in which data is no longer siloed within organizations.

This is the classic data paradigm: one in which specialized engineers manage data infrastructure and analysts consume data to meet business requirements. The sharp divide often lead to misunderstandings and frustration on both sides.

Recently, there’s been a shift in the industry toward teams of diverse skillsets and professional backgrounds collaborating throughout the entire data lifecycle. This is not only better for morale; it also has practical benefits. Data touches the entire business in a way it never did before, so more people should be meaningfully involved.

That’s why we need data tools and platforms that are approachable for the largest possible user group. Tools should always feature developer-focused workflows and a high degree of customization for technical experts. However, it’s also important to have a UI wherever reasonable.

Currently, real-time CDC lies mostly in the domain of highly specialized engineers. But it doesn’t need to be. In many cases, CDC pipelines are simple enough that somewhat less-technical users can create and manage them, too — given the right tools.

In the future, it’s reasonable to expect both developer and non-developer experiences to be excellent in CDC tools. Debezium recently released its UI, and many other UI-forward CDC tools are hitting the market.

What we’re doing at Estuary

Estuary Flow was designed from day one for full data team collaboration: engineers, analysts, business managers, and everyone in between.

We’re committed to full back-end access and ongoing support for developers. Data flows can be created and run through a GitOps workflow. At the same time, we’re prioritizing an intuitive user interface to allow other stakeholders to meaningfully contribute to the same data flow with zero code. The UI also provides an approachable entry point for users who’d like to grow more technical and begin to work more directly with code.

2- Performance issues with CDC at scale

Even real-time, event-based CDC suffers from scaling. This shows up most painfully for backfills of historical data.

In the previous post of this series, I discussed how SQL-based batch CDC affects the performance of the database, even at modest scales. This is fairly intuitive: repeatedly querying a growing table becomes compute-intensive. The same is true when using a trigger function that evaluates every action on the table using the database’s own resources.

Event-driven architectures eliminate these problems. Relevant events are tracked in the database log, and immediately generate messages in the queue. These are handled by the CDC platform, and don’t bog down the source database.

However, even event-driven CDC sometimes needs to look at the source table holistically. This is referred to as a snapshot or a dump. By nature, event logs are incomplete records and are not 100% reliable. Most CDC platforms handle this by indexing the entire source table once in a while.

Taking this a step further, if there’s downtime at any point along the CDC pipeline, records of events can be missed. The system then needs to backfill that data, which, again, usually requires it to re-read the whole table to figure out where it left off. This effect can compound if CDC is feeding multiple target systems.

These snapshots or historical backfills can have a debilitating effect on the source table: putting locks on them, sometimes for hours at a time, and preventing logging of new events.

These issues are what lead Netflix to develop its own CDC framework, DBLog, completely in-house. DBLog allows more control over when dumps occur, prevents locking, and allows dumps and logs to share resources.

As Netflix plans to open-source DBLog, it’s an excellent example of how open-source offerings diversify to meet different use-cases.

What we’re doing at Estuary

When we designed Flow, we prioritized easy backfills by:

- Storing fault-tolerant records of source data within the runtime, backed by a data lake. These are known as collections.

- Supporting incremental backfills by durably storing transactions

Flow uses the source database as durable storage. Taking advantage of the transactional capabilities of the database, Flow keeps a record of everything it’s read from the source table in the table itself as a checkpoint. That is, it reads from and writes to the source, making it distinct from other systems, which only read.

This allows Flow to avoid race conditions that might otherwise occur, which could cause data duplication or other issues during backfills. It also allows Flow to parallelize the backfill task, giving it speed without placing unnecessary strain on the database.

The advantages of this approach compound when the same source data is connected to multiple targets — Flow doesn’t have to repeat the same backfill.

3- Custom architecture and transformation is challenging

The base architecture of a CDC pipeline is simple: transferring data change events from a source to a target. But in practice, there are endless variations:

- What if you have multiple sources or multiple targets?

- What if the data in the source (or target) is distributed into multiple tables or other resources?

- What if you want to add a real-time data transformation along the way?

The majority of these challenges come down to the message bus on which your CDC is built. Usually, that’s Apache Kafka. Kafka is an extremely powerful platform, but it requires a high level of expertise to use effectively.

In practice, this means that if you don’t have an engineering team to devote to the task, deviations from basic architecture can become CDC showstoppers.

A great example of architectural complexity is Shopify. Shopify’s source data in MySQL was divided across 100+ instances, but they couldn’t support 100+ Kafka topics in their CDC implementation. Instead, they wanted to merge topics based on common tables. As seasoned Kafka users, they were able to accomplish this, but it wasn’t simple, and required the team to develop additional expertise with Kafka Streams.

Operational data transforms are challenging to add with Kafka alone. Kafka’s consumer framework does make this possible, but more often than not you’ll end up integrating a separate processing engine or hiring a service to help.

What we’re doing at Estuary

Flow is designed for architectural flexibility and transformation. It allows you to:

- Add multiple data sources and destinations for each pipeline

- Configure multiple bindings for a given source or destination

- Perform operational transforms with derivations

Flow is built on the assumption that a given data pipeline — CDC or otherwise — is likely to be complex. You can incorporate as many external sources and destinations as you need in your pipeline using our growing ecosystem of connectors.

When you define each connection, you have additional customization options. You can specify multiple bindings to connect to multiple resources, like tables, within the system. In a case like Shopify’s, each MySQL instance would have its own binding, and all data would automatically be combined based on a key.

Flow also makes transformations accessible with derivations: aggregations, joins, and stateful stream transformations applied directly to data collections.

All of this is available directly within your central Flow environment of choice — both the CLI and the UI — with no special configuration required.

What’s next?

There’s lots of room for growth in change data capture, and more accessible tools are on the horizon.

Estuary Flow is one of them. You can try it free here.

Author

Popular Articles